MANY OF THE trends in warfare that this special report has identified, although worrying, are at least within human experience. Great-power competition may be making a comeback. The attempt of revisionist powers to achieve their ends by using hybrid warfare in the grey zone is taking new forms. But there is nothing new about big countries bending smaller neighbours to their will without invading them. The prospect of nascent technologies contributing to instability between nuclear-armed adversaries is not reassuring, but past arms-control agreements suggest possible ways of reducing the risk of escalation.

MANY OF THE trends in warfare that this special report has identified, although worrying, are at least within human experience. Great-power competition may be making a comeback. The attempt of revisionist powers to achieve their ends by using hybrid warfare in the grey zone is taking new forms. But there is nothing new about big countries bending smaller neighbours to their will without invading them. The prospect of nascent technologies contributing to instability between nuclear-armed adversaries is not reassuring, but past arms-control agreements suggest possible ways of reducing the risk of escalation.

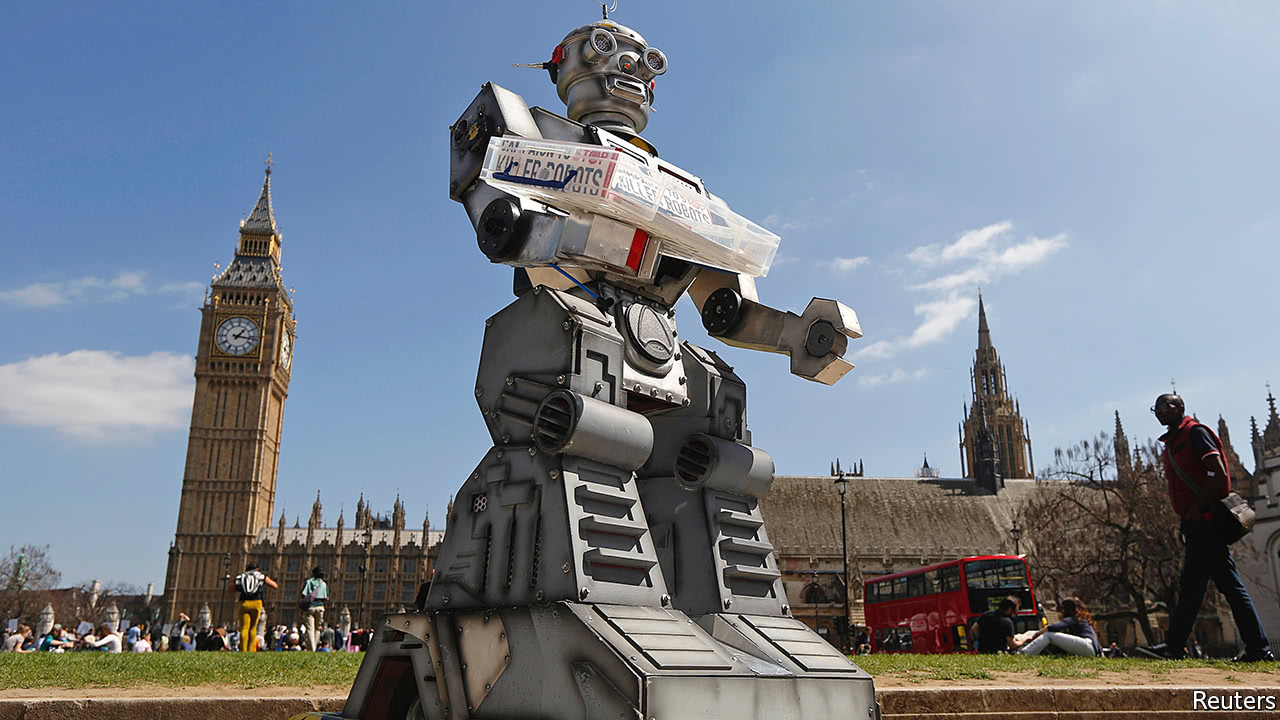

The fast-approaching revolution in military robotics is in a different league. It poses daunting ethical, legal, policy and practical problems, potentially creating dangers of an entirely new and, some think, existential kind. Concern has been growing for some time. Discussions about lethal autonomous weapons (LAWs) have been held at the UN’s Convention on Certain Conventional Weapons (CCW), which prohibits or restricts some weapons deemed to cause unjustifiable suffering. A meeting of the CCW in November brought together a group of government experts and NGOs from the Campaign to Stop Killer Robots, which wants a legally binding international treaty banning LAWs, just as cluster munitions, landmines and blinding lasers have been banned in the past.

Get our daily newsletter

Most people agree that when lethal force is used, humans should be involved. But what sort of human control is appropriate?

The trouble is that autonomous weapons range all the way from missiles capable of selective targeting to learning machines with the cognitive skills to decide whom, when and how to fight. Most people agree that when lethal force is used, humans should be involved in initiating it. But determining what sort of human control might be appropriate is trickier, and the technology is moving so fast that it is leaving international diplomacy behind.

To complicate matters, the most dramatic advances in AI and autonomous machines are being made by private firms with commercial motives. Even if agreement on banning military robots could be reached, the technology enabling autonomous weapons will be both pervasive and easily transferable.

Moreover, governments have a duty to keep their citizens secure. Concluding that they can manage quite well without chemical weapons or cluster bombs is one thing. Allowing potential adversaries a monopoly on technologies that could enable them to launch a crushing attack because some campaign groups have raised concerns is quite another.

As Peter Singer notes, the AI arms race is propelled by unstoppable forces: geopolitical competition, science pushing at the frontiers of knowledge, and profit-seeking technology businesses. So the question is whether and how some of its more disturbing aspects can be constrained. At its simplest, most people are appalled by the idea of thinking machines being allowed to make their own choices about killing human beings. And although the ultimate nightmare of a robot uprising in which machines take a genocidal dislike to the human race is still science fiction, other fears have substance.

Nightmare scenarios

Paul Scharre is concerned that autonomous systems might malfunction, perhaps because of badly written code or because of a cyber attack by an adversary. That could cause fratricidal attacks on their own side’s human forces or escalation so rapid that humans would not be able to respond. Testing autonomous weapons for reliability is tricky. Thinking machines may do things in ways that their human controllers never envisaged.

Much of the discussion about “teaming” with robotic systems revolves around humans’ place in the “observe, orient, decide, act” (OODA) decision-making loop. The operator of a remotely piloted armed Reaper drone is in the OODA loop because he decides where it goes and what it does when it gets there. An on-the-loop system, by contrast, will carry out most of its mission without a human operator, but a human can intercede at any time, for example by aborting the mission if the target has changed. A fully autonomous system, in which the human operator merely presses the start button, has responsibility for carrying through every part of the mission, including target selection, so it is off the loop. An on-the-loop driver of an autonomous car would let it do most of the work but would be ready to resume control should the need arise. Yet if the car merely had its destination chosen by the user and travelled there without any further intervention, the human would be off the loop.

For now, Western armed forces are determined to keep humans either in or on the loop. In 2012 the Pentagon issued a policy directive: “These [autonomous] systems shall be designed to allow commanders and operators to exercise appropriate levels of human judgment over the use of force. Persons who authorise the use of, direct the use of, or operate, these systems must do so with appropriate care and in accordance with the law of war, applicable treaties, weapons-systems safety rules and applicable rules of engagement.”

That remains the policy. But James Miller, the former under-secretary of Defence for Policy at the Pentagon, says that although America will try to keep a human in or on the loop, adversaries may not. They might, for example, decide on pre-delegated decision-making at hyper-speed if their command-and-control nodes are attacked. Russia is believed to operate a “dead hand” that will automatically launch its nuclear missiles if its seismic, light, radioactivity and pressure sensors detect a nuclear attack.

Mr Miller thinks that if autonomous systems are operating in highly contested space, the temptation to let the machine take over will become overwhelming: “Someone will cross the line of sensibility and morality.” And when they do, others will surely follow. Nothing is more certain about the future of warfare than that technological possibilities will always shape the struggle for advantage.

No comments:

Post a Comment