Roger Bradbury

Just as we’ve become used to the idea of cyber warfare, along come the attacks, via social media, on our polity. We’ve watched in growing amazement at the brazen efforts by the Russian state to influence the US elections, the UK’s Brexit referendum and other democratic targets. And we’ve tended to conflate them with the seemingly-endless cyber hacks and attacks on our businesses, governments, infrastructure, and a long-suffering citizenry. But these social media attacks are a different beast altogether – more sinister, more consequential and far more difficult to counter. They are the modern realisation of the Marxist-Leninist idea that information is a weapon in the struggle against Western democracies, and that the war is ongoing. There is no peacetime or wartime, there are no non-combatants. Indeed, the citizenry are the main targets.

Just as we’ve become used to the idea of cyber warfare, along come the attacks, via social media, on our polity. We’ve watched in growing amazement at the brazen efforts by the Russian state to influence the US elections, the UK’s Brexit referendum and other democratic targets. And we’ve tended to conflate them with the seemingly-endless cyber hacks and attacks on our businesses, governments, infrastructure, and a long-suffering citizenry. But these social media attacks are a different beast altogether – more sinister, more consequential and far more difficult to counter. They are the modern realisation of the Marxist-Leninist idea that information is a weapon in the struggle against Western democracies, and that the war is ongoing. There is no peacetime or wartime, there are no non-combatants. Indeed, the citizenry are the main targets.

A new battlespace for an old war

These subversive attacks on us are not a prelude to war, they are the war itself; what Cold War strategist George Kennan called “political warfare”.

Perversely, as US cyber experts Herb Lin and Jaclyn Kerr note, modern communication attacks exploit the technical virtues of the internet such as “high connectivity” and “democratised access to publishing capabilities”. What the attackers do is, broadly speaking, not illegal.

The battlespace for this warfare is not the physical, but the cognitive environment – within our brains. It seeks to sow confusion and discord, to reduce our abilities to think and reason rationally.

Social media platforms are the perfect theatres in which to wage political warfare. Their vast reach, high tempo, anonymity, directness and cheap production costs mean that political messages can be distributed quickly, cheaply and anonymously. They can also be tailored to target audiences and amplified quickly to drown out adversary messages.

Simulating dissimulation

We built simulation models (for a forthcoming publication) to test these ideas. We were astonished at how effectively this new cyber warfare can wreak havoc in the models, co-opting filter bubbles and preventing the emergence of democratic discourse.

We used agent-based models to examine how opinions shift in response to the insertion of strong opinions (fake news or propaganda) into the discourse.

Our agents in these simple models were individuals who each had a set of opinions. We represented different opinions as axes in an opinion space. Individuals are located in the space by the values of their opinions. Individuals close to each other in the opinion space are close to each other in their opinions. Their differences in opinion are simply the distance between them.

When an individual links to a neighbour, they experience a degree of convergence - their opinions are drawn towards each other. An individual’s position is not fixed, but may shift under the influence of the opinions of others.

The dynamics in these models were driven by two conflicting processes:

Individuals are social - they have a need to communicate - and they will seek to communicate with others with whom they agree. That is, other individuals nearby in their opinion space.

Individuals have a limited number of communication links they can manage at any time (also known as their Dunbar number, and they continue to find links until they satisfy this number. Individuals, therefore, are sometimes forced to communicate with individuals with whom they disagree in order to satisfy their Dunbar number. But if they wish to create a new link and have already reached their Dunbar number, they will prune another link.

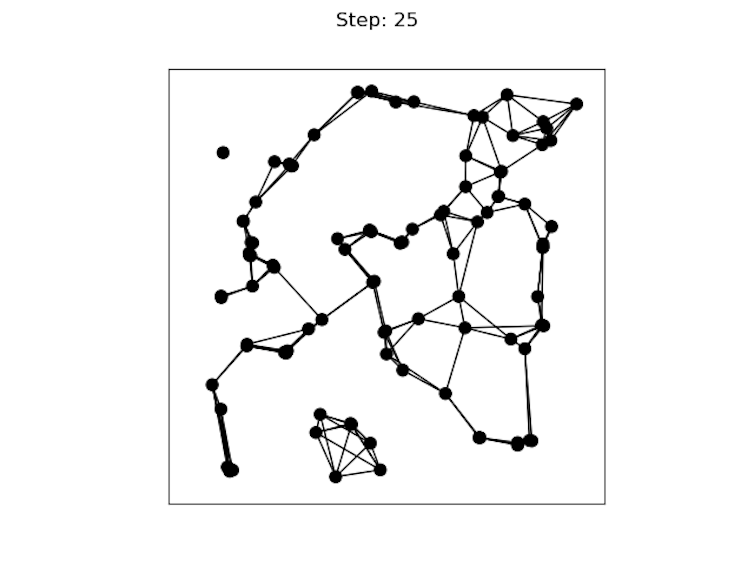

Figure 1: The emergence of filter bubbles

Figure 1: Filter bubbles emerging with two dimensions, opinions of issue X and opinions of issue Y. roger.bradbury@anu.edu.au

Figure 1: Filter bubbles emerging with two dimensions, opinions of issue X and opinions of issue Y. roger.bradbury@anu.edu.au

To begin, 100 individuals, represented as dots, were randomly distributed across the space with no links. At each step, every individual attempts to link with a near neighbour up to its Dunbar number, perhaps breaking earlier links to do so. In doing so, it may change its position in opinion space.

Over time, individuals draw together into like-minded groups (filter bubbles). But the bubbles are dynamic. They form and dissolve as individuals continue to prune old links and seek newer, closer ones as a result of their shifting positions in the opinion space. Figure 1, above, shows the state of the bubbles in one experiment after 25 steps.

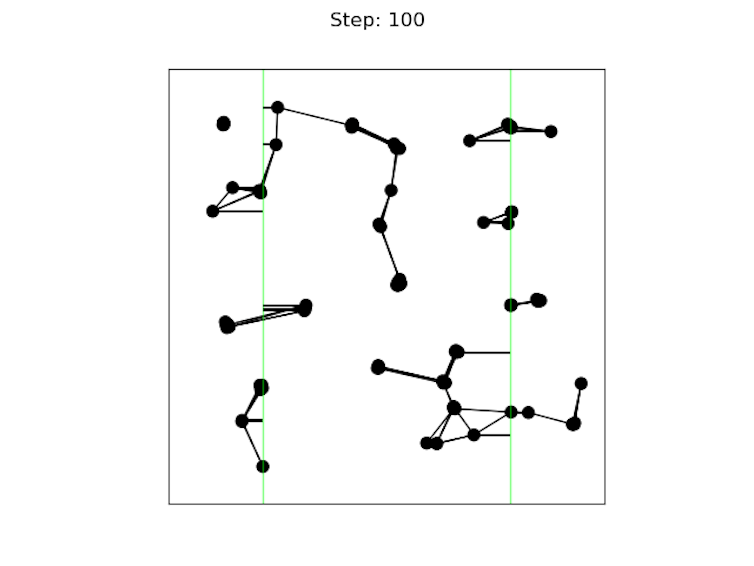

Figure 2: Capturing filter bubbles with fake news

Conversation lobbies figure 2. roger.bradbury@anu.edu.au

Conversation lobbies figure 2. roger.bradbury@anu.edu.au

At time step 26, we introduced two pieces of fake news into the model. These were represented as special sorts of individuals that had an opinion in only one dimension of the opinion space and no opinion at all in the other. Further, these “individuals” didn’t seek to connect to other individuals and they never shifted their opinion as a result of ordinary individuals linking to them. They are represented by the two green lines in Figure 2.

Over time (the figure shows time step 100), each piece of fake news breaks down the old filter bubbles and reels individuals towards their green line. They create new tighter filter bubbles that are very stable over time.

Information warfare is a threat to our Enlightenment foundations

These are the conventional tools of demagogues throughout history, but this agitprop is now packaged in ways perfectly suited to the new environment. Projected against the West, this material seeks to increase political polarisation in our public sphere.

Rather than actually change an election outcome, it seeks to prevent the creation of any coherent worldview. It encourages the creation of filter bubbles in society where emotion is privileged over reason and targets are immunised against real information and rational consideration.

These models confirm Lin and Kerr’s hypothesis. “Traditional” cyber warfare is not an existential threat to Western civilisation. We can and have rebuilt our societies after kinetic attacks. But information warfare in cyberspace is such a threat.

The Enlightenment gave us reason and reality as the foundations of political discourse, but information warfare in cyberspace could replace reason and reality with rage and fantasy. We don’t know how to deal with this yet.

No comments:

Post a Comment