by Sydney J. Freedberg Jr.

WASHINGTON: Behind the bright buzzwords about artificial intelligence, the dreary reality is that machine learning algorithms only work if they’re trained on large sets of data — and your dataset may be too small, mislabeled, inaccurate, or outright falsified by malicious actors. As officials from the NSA, NGA, and Armywarned today, big data is just a big problem if that data is bad.

WASHINGTON: Behind the bright buzzwords about artificial intelligence, the dreary reality is that machine learning algorithms only work if they’re trained on large sets of data — and your dataset may be too small, mislabeled, inaccurate, or outright falsified by malicious actors. As officials from the NSA, NGA, and Armywarned today, big data is just a big problem if that data is bad.

Big bad data is a particularly acute problem in the national security sector, where the chief threat is not mundane cyber-criminals but sophisticated and well-funded nation-states. If a savvy adversary knows what dataset your AI is training on — and because getting enough good data is so difficult, a lot of datasets are widely shared — they at least have a head start in figuring out how to deceive it. At worst, the enemy can feed you false data so your AI learns the version of reality they want it to know.

And it may be hard to figure out why your AI is going wrong. The inner workings of machine learning algorithms are notoriously opaque and unpredictable even to their own designers, so much so that engineers in such can’t-fail fields as flight controls often refuse to use them. Without an obvious disaster — like trolls teaching Microsoft’s Tay chatbot to spew racist bile within hours of coming online, or SkyNet nuking the world — you may never even realize your AI is consistently making mistakes.

Inflatable decoys like this fake Sherman tank deceived Hitler about the real target for D-Day

The Science of Deception

“All warfare is based on deception,” Sun Tzu wrote 2,500 years ago. But as warfare becomes more technologically complex, new kinds of deception become possible. In World War II, a “ghost army” of inflatable tanks that looked real enough in photographs made Hitler think the D-Day invasion was a feint. Serbian troops in 1999 added pans of warm water to their decoys to fool NATO’s infrared sensors as well as visual ones. In 2016, Russian troll factories exploited social media to stoke America’s homegrown political feuds with fake news and artificial furor. Now comes AI, which can sort through masses of data faster than armies of human analysts and find patterns no unaided human mind would see — but which can also make mistakes no human brain would fall for, a phenomenon I’ve started calling artificial stupidity.

“AI security — this is a brand new field,” NSA research director Deborah Frincketold the Accelerated AI conference this morning. “While most of you may not have to deal with that, we and the DoD need to understand that a smart adversary, who also has AI, computational power, and the like, is likely to be scrutinizing what we do.”

The intelligence community can still tap into the vast ferment of innovation in the private sector, Frincke and other officials at the conference said. It can even use things like open-source algorithms for its own software, with suitable modifications.

“If somebody else can do it, I don’t want to be doing it,” said Frincke, whose agency funds research at major universities. “There’s more sharing you might think between the federal government, even sensitive agencies, and (industry and academia), drawing on the kinds of algorithms we find outside.”

But sharing data is “harder” than borrowing algorithms, she emphasized: “The data is precious and is the lifeblood.”

An adversary who has access to the dataset your AI trained on can figure out what its likely blind spots are, said Brian Sadler, a senior scientist at the Army Research Laboratory. “If I know your data, I can create ways to fake out your system,” Sadler told me. There’s even a growing field of “adversarial AI” which researches how different artificial intelligences might try to outwit each other, he said — and that work is underway both in the government and outside.

“There are places where we overlap,” Sadler said. “The driverless car guys are definitely interested in how you might manipulate the environment, (e.g.) by putting tape on a stop sign so it looks like a yield sign” — not to a human, whose brain evolved to read contextual cues, but to a literal-minded AI that can only analyze a few narrow features of complex reality. Utility companies, the financial sector, and other non-government “critical infrastructure” are increasingly alive to the dangers of sophisticated hacking, including by nation-states, and investing in ways to check for malicious falsehoods in their data.

That said, while the military and intelligence community draw on the larger world of commercial innovation whenever they can, they still need to fund their own R&D on their own specific problems with adversarial AI and deceptive data. “It’s definitely something we worry about and it’s definitely something we’re doing research on,” Sadler said. “We are attacking adversarial questions which commercial is not.”

Air Force Cyber Protection Team exercise

Big Data, Smart Humans

You can’t fix these problems just by throwing data at algorithms and hoping AI will solve everything for you without further human intervention. To start with, “even Google doesn’t have enough labeled training data,” Todd Myers, automation lead at the National Geospatial Intelligence Agency (NGA), told me after he spoke to the AI conference. “That’s everybody’s problem.”

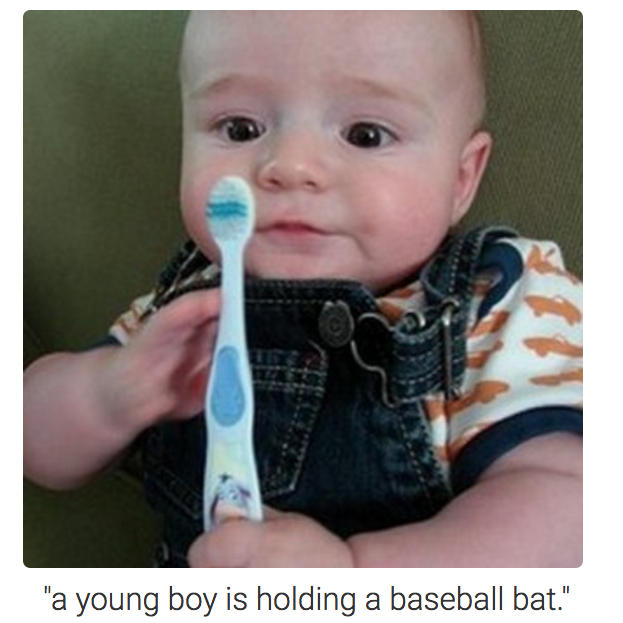

Raw data isn’t a good diet for machine-learning algorithms in their vulnerable training stage. They need data that’s been labeled, so they can check their conclusions against a baseline of truth: Does this video show a terrorist or a civilian? Does this photo show an enemy tank or just a decoy? Is this radio signal a coded transmission or just meaningless noise? Even today, advanced AI can make absurd mistakes, like confusing a toothbrush for a baseball bat (see photo). Yes, ultimately, the goal is to get the AI good enough that you can unleash it on real-world data and classify it correctly, far faster than a legion of human analysts, but while the AI’s still learning, someone has to check its homework to make sure it’s learning the right thing.

So who checks the homework? Who labels the data in the first place? Generally, that has to be humans. Sure, technical solutions like checking different data against each other can help. Using multiple sensors — visual, radar, infrared, etc. — on a single target can catch deceptions that would trick any single system. But, at least for now, there’s still no substitute for an experienced and intelligent human brain.

“If you have a lot of data, but you don’t have smart people who can help you get to ground truth,” Frincke said, “the AI system might give you a mechanical answer, but it’s not necessarily going to give you what you’re looking for in terms of accuracy.”

“Smart people” doesn’t just mean “good with computers,” she emphasized. It’s crucial to get the programmers, big data scientists, and AI experts to work with veteran specialists in a particular subject area — detecting camouflaged military equipment, for example, or decoding enemy radio signals.

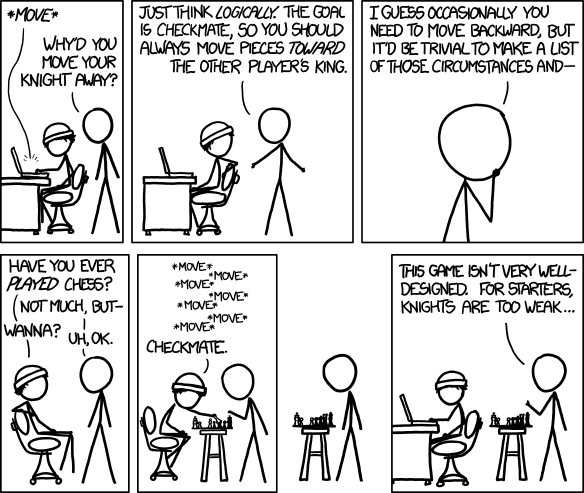

Otherwise you might get digital tools that work in the ideal world of abstract data but can’t cope with the specific physical phenomena you’re trying to analyze. There are few things more dangerous than a programmer who assumes their almighty algorithm can conquer every subject without regard for pesky details, as scientist turned cartoonist Randall Munroe makes clear:

A successful AI project has to start with “a connection between someone who understands the problem really well and is the expert in it, and a research scientist, a data scientist, a team that knows how to apply the science,” Frincke said. That feedback should never end, she added. As the AI matures beyond well-labeled training data and starts interacting with the real world, providing real intelligence to operational users, those users need to provide constant feedback to a software team making frequent updates, patches, and corrections.

At NSA, that includes giving users the ability to correct or confirm the computer’s output. In essence, it’s crowdsourced, perpetual training for the machine learning algorithms.

“We gave the analysts something that I haven’t seen in a lot of models… and that is to let the analysts, when they look at a finding or recommendation or whatever the output of the system is, to say yea or nay, thumbs-up or thumbs down,” Frincke said. “So if our learning algorithm and the model that we have weren’t as accurate as we would like, they could improve that — and over time, it becomes very strong.”

No comments:

Post a Comment