By Zachary Kallenborn

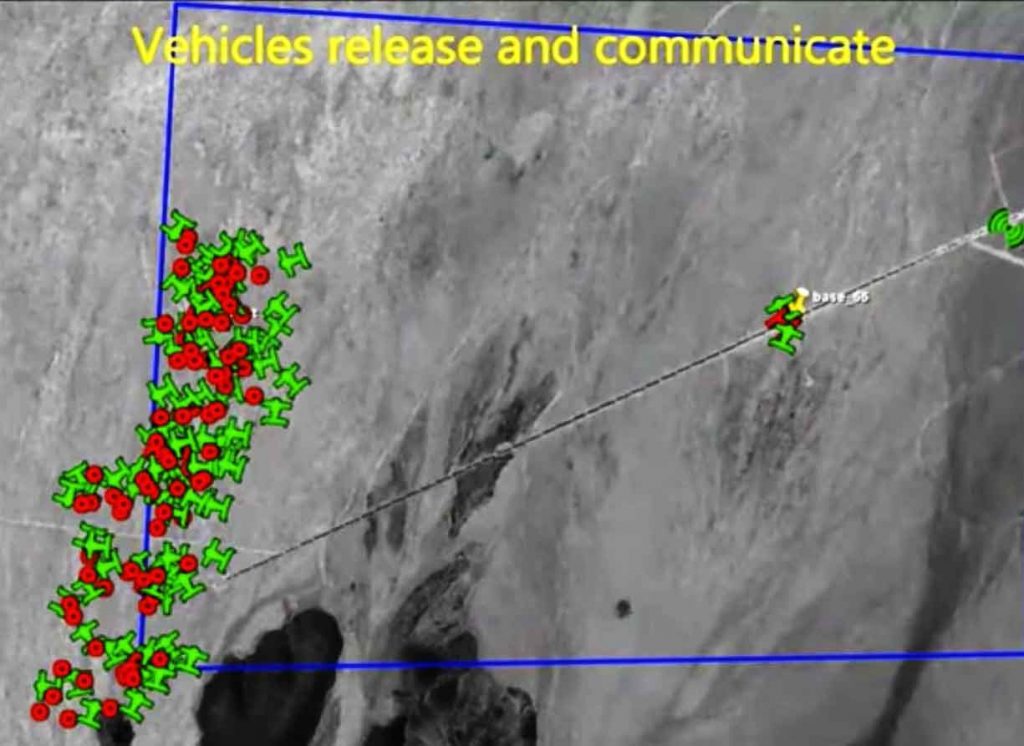

In October 2016, the United States Strategic Capabilities Office launched 103 Perdix drones out of an F/A-18 Super Hornet. The drones communicated with one another using a distributed brain, assembling into a complex formation, traveling across a battlefield, and reforming into a new formation. The swarm over China Lake, California was the sort of “cutting-edge innovation” that would keep America ahead of its adversaries, a Defense Department press release quoted then Secretary of Defense Ash Carter as saying. But the Pentagon buried the lede: The Strategic Capabilities Office did not actually create the swarm; engineering students at the Massachusetts Institute of Technology (MIT) did, using an “all-commercial-components design.”

MIT engineering students are among the best engineering students in the world, and they have the exact skills for the task, but they are still students. If drone swarming technology is accessible enough that students can develop it, global proliferation is virtually inevitable. And, of course, world militaries are deploying new drone technology so quickly that even journalists and experts who follow the issue have trouble keeping up, even as much drone swarm-related research is surely taking place outside the public eye. With many countries announcing what they call “swarms,” at some point—and arguably that point is now—this technology will pose a real risk: In theory, swarms could be scaled to tens of thousands of drones, creating a weapon akin to a low-scale nuclear device. Think “Nagasaki” to get a sense of the death toll a massive drone swarm could theoretically inflict. (In most cases, drone swarms are likely to be far below this level of harm, but such extremes are absolutely possible.)

Creating a drone swarm is fundamentally a programming problem. Drones can be easily purchased at electronics stores or just built with duct tape and plywood as the Islamic State of Iraq and Syria did. The drone swarm challenge is getting the individual units to work together. That means developing the communication protocols so they can share information, manage conflicts between the drones, and collectively decide which drones should accomplish which task. To do so, researchers must create task allocation algorithms. These algorithms allow the swarm to assign specific tasks to specific drones. Once the algorithms are created, they can be readily shared and just need to be coded into the drones.

Because battlefields are complex—with soldiers, citizens, and vehicles entering or leaving, and environmental hazards putting the drones at risk—a robust military capability still requires serious design, testing, and verification. And advanced swarm capabilities like heterogeneity (drones of different sizes or operating in different domains) and flexibility (the ability to easily add or subtract drones) are still quite novel. But getting the drones to collaborate and drop bombs is not.

Armed, fully-autonomous drone swarms are future weapons of mass destruction. While they are unlikely to achieve the scale of harm as the Tsar Bomba, the famous Soviet hydrogen bomb, or most other major nuclear weapons, swarms could cause the same level of destruction, death, and injury as the nuclear weapons used in Nagasaki and Hiroshima—that is tens-of-thousands of deaths. This is because drone swarms combine two properties unique to traditional weapons of mass destruction: mass harm and a lack of control to ensure the weapons do not harm civilians.

Countries are already putting together very large groupings of drones. In India’s recent Army Day Parade, the government demonstrated what it claimed is a true drone swarm of 75 drones and expressed the intent to scale the swarm to more than 1,000 units. The US Naval Postgraduate School is also exploring the potential for swarms of one million drones operating at sea, under sea, and in the air. To hit Nagasaki levels of potential harm, a drone swarm would only need 39,000 armed drones, and perhaps fewer if the drones had explosives capable of harming multiple people. That might seem like a lot, but China already holds a Guinness World Record for flying 3,051 pre-programmed drones at once.

Experts in national security and artificial intelligence debate whether a single autonomous weapon could ever be capable of adequately discriminating between civilian and military targets, let alone thousands or tens of thousands of drones. Noel Sharkey, for example, an AI expert at the University of Sheffield, believes that in certain narrow contexts, such a weapon might be able to make that distinction within 50 years. Georgia Tech roboticist Ronald Arkin, meanwhile, believes lethal autonomous weapons may one day prove better at reducing civilian causalities and property damage than humans, but that day hasn’t come yet. Artificial intelligences cannot yet manage the complexities of the battlefield.

Drone swarms worsen the risks posed by a lethal autonomous weapon. Even if the risk of a well-designed, tested, and validated autonomous weapon hitting an incorrect target were just 0.1 percent, that would still imply a substantial risk when multiplied across thousands of drones. As military AI expert Paul Scharre rightly noted, the frequency of autonomous weapons’ deployment and use matters, too; a weapon used frequently has more opportunity to err. And, as countries rush to develop these weapons, they may not always develop well-designed, tested, or validated machines.

Drone communication means an error in one drone may propagate across the whole swarm. Drone swarms also risk so-called “emergent error.” Emergent behavior, a term for the complex collective behavior that results from the behavior of the individual units, is a powerful advantage of swarms, allowing behaviors like self-healing in which the swarm reforms to accommodate the loss of a drone. But emergent behavior also means inaccurate information shared from each drone may lead to collective mistakes.

The proliferation of swarms will reverberate throughout the global community, as the proliferation of military drones already has echoed. In the conflict between Armenia and Azerbaijan, Azeri drones proved decisive. Open-source intelligence indicates Azeri drones devastated the Armenian military, destroying 144 tanks, 35 infantry-fighting vehicles, 19 armored personnel carriers, and 310 trucks. The Armenians surrendered quickly, and the Armenian people were so upset they assaulted their speaker of parliament.

Drone swarms will likely be extremely useful for carrying out mass casualty attacks. They may be useful as strategic deterrence weapons for states without nuclear weapons and as assassination weapons for terrorists. In fact, would-be assassins launched two drones against Prime Minister Nicolas Maduro in Venezuela in 2018. Although he escaped, the attack helps illustrate the potential of drone swarms. If the assassins launched 30 drones instead, the outcome may have been different.

Drone swarms are also likely to be highly effective delivery systems for chemical and biological weapons through integrated environmental sensors and mixed arms tactics (e.g. combining conventional and chemical weapons in a single swarm), worsening already fraying norms against the use of these weapons.

Even if drone swarm risks to civilians are reduced, drone swarm error creates escalation risks. What happens if the swarm accidentally kills soldiers in a military not involved in the conflict?

Countries need to build global norms and treaties to limit drone swarm proliferation, especially in the worst cases. From including swarms in and re-energizing UN discussions about lethal autonomous weapons to working to prevent the technology from proliferating, there are many steps the international community should take to limit the risks of swarms. Simple transparency might go a long way. The UN register on conventional arms should include a general category on unmanned systems with a sub-category for swarming-capable drones. This way the global community could better monitor the weapons’ proliferation.

The world needs to debate the growing threat of drone swarms. This debate shouldn’t wait until lethal drone swarms are used in war or in a terrorist attack but should happen now.

No comments:

Post a Comment