Sulgiye Park, Rodney C. Ewing

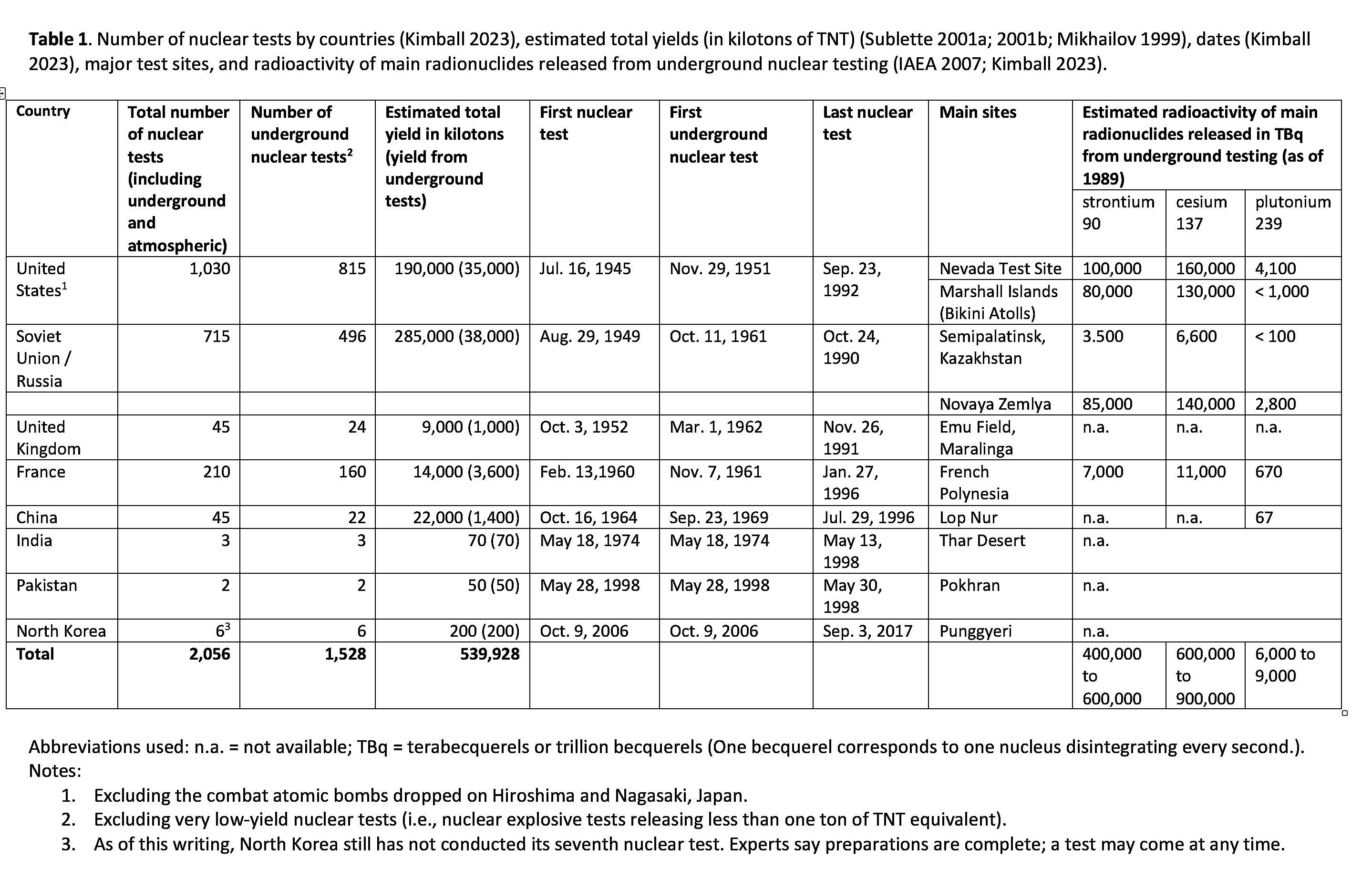

Since Trinity—the first atomic bomb test on the morning of July 16, 1945, near Alamogordo, New Mexico—the nuclear-armed states have conducted 2,056 nuclear tests (Kimball 2023). The United States led the way with 1,030 nuclear tests, or almost half of the total, between 1945 and 1992. Second is the former Soviet Union, with 715 tests between 1949 and 1990, and then France, with 210 tests between 1960 and 1996. Globally, nuclear tests culminated in a cumulative yield of over 500 megatons, which is equivalent to 500 million tons of TNT (Pravalie 2014). This surpasses by over 30,000 times the yield of the first atomic bomb dropped on Hiroshima on August 6, 1945.

Atmospheric nuclear tests prevailed until the early 1960s, with bombs tested by various means: aircraft drops, rocket launches, suspension from balloons, and detonation atop towers above ground. Between 1945 and 1963, the Soviet Union conducted 219 atmospheric tests, followed by the United States (215), the United Kingdom (21), and France (3) (Kimball 2023).

In the early days of the nuclear age, little was known about the impacts of radioactive “fallout —the residual and activated radioactive material that falls to the ground after a nuclear explosion. The impacts became clearer in the 1950s, when the Kodak chemical company detected radioactive contamination on their film, which was linked to radiation resulting from the atmospheric nuclear tests (Sato et al. 2022). American scientists, like Barry Commoner, also discovered the presence of strontium 90 in children’s teeth originating from nuclear fallout thousands of kilometers from the original test site (Commoner 1959; Commoner 1958; Reiss 1961). These discoveries alerted scientists and the public to the consequences of radioactive fallout from underwater and atmospheric nuclear tests, particularly tests of powerful thermonuclear weapons that had single event yields of one megaton or greater.

Public concerns for the effects of radioactive contamination led to the Limited (or Partial) Test Ban Treaty, signed on August 5, 1963. The treaty restricted nuclear tests from air, space, and underwater (Atomic Heritage Foundation 2016; Loeb 1991; Rubinson 2011). And while the treaty was imperfect with only three signatories at the beginning (the United States, the United Kingdom, and the Soviet Union), the ban succeeded in significantly curbing atmospheric release of radioactive isotopes.

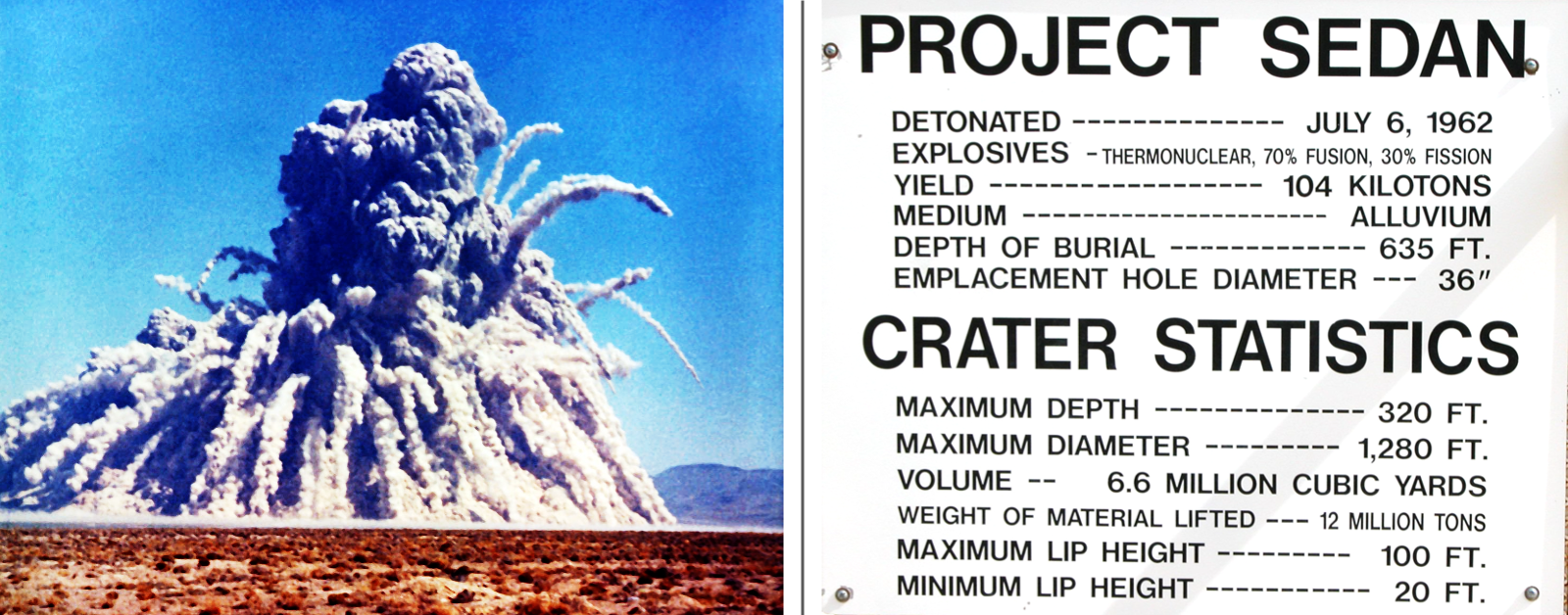

After the entry into force of the partial test ban, almost 1,500 underground nuclear tests were conducted globally. Of the 1,030 US nuclear tests, nearly 80 percent, or 815 tests (See Table 1), were conducted underground, primarily at the Nevada Test Site.[1] As for other nuclear powers, the Soviet Union conducted 496 underground tests, mostly in the Semipalatinsk region of Kazakhstan, France conducted 160 underground tests, the United Kingdom conducted 24, and China 22. These underground nuclear tests were in a variety of geologic formations (e.g., basalt, alluvium, rhyolite, sandstone, shale) to depths up to 2,400 meters.

Left: The explosion of the Storax Sedan underground nuclear test. (Credit: US Government, Public domain, via Wikimedia Commons). Right: Close-up of a sign at the site of the Sedan nuclear test.

In 1996, after some international efforts to curb nuclear testing and promote disarmament, the Comprehensive Test Ban Treaty (CTBT) was negotiated, which prohibited all nuclear explosions (General Assembly 1996). Since the negotiation of the CTBT, India and Pakistan conducted three and two underground nuclear tests, respectively, in 1998. And today, North Korea stands as the only country to have tested nuclear weapons in the 21st century.

While underground nuclear tests were chosen to limit atmospheric radioactive fallout, each test still caused dynamic and complex responses within crustal formations. Mechanical effects of underground nuclear tests span from the prompt post-detonation responses to the enduring impacts resulting in radionuclide release, dispersion, and migration through the geosphere. Every test of nuclear weapons adds to a global burden of released radioactivity (Ewing 1999).

Objectives, types, and timeline of underground nuclear tests

The scope of the nuclear testing programs evolved significantly throughout the 1980s, as the objectives of those tests ranged from weapons effect analysis to fundamental physics research to refining critical elements of warhead designs and ensuring the safety and effectiveness of the nuclear stockpile. Later, nuclear tests were also performed to study the methods for detecting those conducted by other countries. In the United States, most underground nuclear tests were conducted at the Nevada Test Site, whose remote site was originally selected for its arid climate and low population density for the safety and security needed to conduct the tests (Brady et al., 1984, 35; Laczniak et al., 1996).

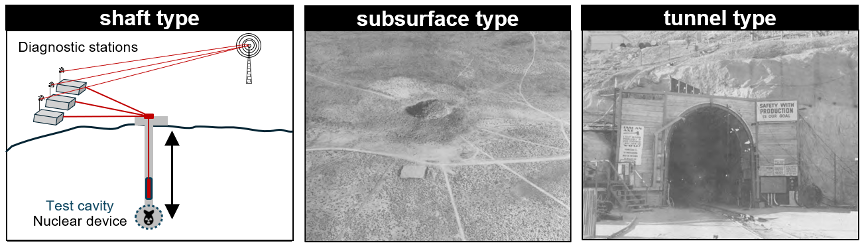

The setup of underground nuclear tests involved distinct emplacement methods tailored to various purposes. These methods included either a deep vertical shaft; a subsurface chamber (in which a nuclear device is emplaced to allow the explosion such that rock fragments are ejected, forming a crater); or a horizontal tunnel (with a nuclear device emplaced in a mined opening, intended for complete containment of the explosion). Each emplacement type was strategically designed to study different aspects of nuclear detonations and weapon performance. In turn, the type of explosion and emplacement determined the content and timing of the radioactive gases and particles released by each test. (See Figure 1, below.)

Figure 1. The three main emplacement types of underground nuclear tests.

To move nuclear testing underground, “big holes,” typically one to three meters in diameter, were drilled to varying depths, dictated by the objectives of the test, the design of the bomb being tested, and the properties of the geological formation hosting the test. The depth of the test was determined depending on the expected explosion yield and the characteristics of the geology.[2]

Each underground nuclear test followed a series of well-determined steps. The nuclear device was first placed inside a long cylindrical canister equipped with diagnostic instruments (e.g., radiation detectors) and electrical wires running back to an aboveground control station. The canister was then sealed and lowered into the shaft or tunnel, and the hole was filled with sand, gravel, and coal tar epoxy plugs to contain the debris from the detonation. Once the device was emplaced, the nuclear detonation was remotely triggered and the data generated by the explosion (e.g., the explosive yield and detonation mechanisms) were transmitted through fiber-optic cables to recording equipment housed in the aboveground station. Scientists analyzed this data to gain insights into the performance and behavior of nuclear devices, contributing to advances in warhead design, weapon efficacy, detectability of nuclear explosions, and safety measures.

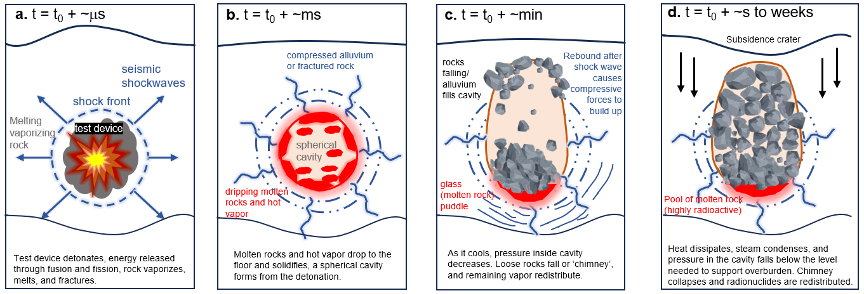

A rapid sequence of events unfolds when the nuclear device is triggered underground. (See Figure 2). The detonation first causes an instantaneous chain reaction, releasing an immense surge of energy comprised of heat, light, and shockwaves. Plasma (a blend of superheated particles) and thermal pulse (a burst of intense heat) that originate from the point of detonation expand rapidly outward within milliseconds of the explosion. Shockwaves propagate through the surrounding rock and soil, causing seismic disturbances and ground movement. Subsequently, the release of energy from fusion and fission reactions—weapons can rely on both types of reactions, the former of which involves “fusing” atoms to release energy and the latter of which involves separating them—creates a void, expanding and vaporizing the nearby rock. In the process of rock vaporization, gases are produced; and the thermodynamic properties and fate of these gases depend on the disturbed rock properties (Adushkin and Spivak 2015).

Figure 2. Sequence of events of underground nuclear test detonation.

The energy from nuclear explosions is incredibly high, with pressures ranging from one to 10 terapascals (10 million to 100 million standard atmospheres) and temperatures reaching up to 10 million degrees Celsius (Glasstone and Dolan 1977; Teller et al. 1968). For comparison, the pressures and temperatures from a nuclear explosion are up to 28 times and 1,900 times higher, respectively, than that of the Earth’s core. At this point, the spherical cavity is a fireball, the heat from which causes circumferential compressional stress conditions—just like when you blow air into a balloon, and it expands equally in all directions (Figure 2a). The size and timing of the cavity expansion depend on the explosion yield and emplacement medium (i.e., rock types). Hard rocks (e.g., basalt, granite, or sandstone) exposed to a nuclear detonation form a smaller cavity radius at a slower rate. The explosions produce a cavity radius with a size of eight to 12 meters (m) per kilotons (kt) raised to the power of one-third (m/kt1/3). The cavity expands at a rate of 30 to 50 milliseconds (ms) per kt1/3. In softer rocks (e.g., tuff or alluvium) the corresponding figures are 15 to 17 m/kt1/3 for radius size and 70 to 90 ms/kt1/3 for rate of expansion (Adushkin and Spivak 2015). The stress from this force acts as a glue that holds together the fractures around the cavity (Figure 2b).

As internal pressure decreases minutes after the explosion—much like a balloon deflating—the state of matter in the cavity changes. Gases, vaporized materials, and fragmented rocks are ejected from the cavity while molten rock flows to the bottom of the cavity (Figure 2c). With further cooling and condensation, the cavity collapses, causing loose rocks to fall or ‘chimney’ down to the cavity, while gases migrate through the rock mass into the cavity (Figure 2d). Chimney collapse happens anywhere between minutes to months after the detonation, depending on the test conditions and the geology of the test site. Heat eventually dissipates through conduction and radiation, and the quenched molten rock forms a glass as a solid mass at the base of the cavity—just as melted nuclear fuel accumulates and solidifies at the bottom of damaged reactor vessels, such as at three of the Fukushima Daiichi reactors. This highly radioactive glass contains refractory actinides (particles that are resistant to high temperatures and chemical attacks) such as plutonium, americium, and uranium, as well as fission products (fragments of lighter atomic nuclei produced by nuclear fission) and activation products (materials that have been made radioactive by the activation of neutrons). The final size of the cavity is related to the explosive yield, such that surveying of underground nuclear cavities of known geologic characteristics offers insights into the device’s explosive yield.[3]

After underground nuclear detonations, a subsidence crater (or depression) often leaves a visible scar, typical of nuclear test sites (see figure 3). The size of a crater can be estimated as a function of the detonation’s depth and yield. For a given depth, an underground test of 20- to 150-kiloton yield typically forms a 15- to 60-meter radius (Laczniak et al. 1996). The largest underground test conducted by the United States—the 5-megaton Cannikin test on November 6, 1971 (Atomic Energy Commission 1971a)—at nearly 2,000 meters depth lifted the ground by six meters and formed a subsidence area of 1,270 meters in length by 91 meters in width (Morris, Gard, and Snyder 1972). If the same bomb size was detonated on the surface, the local fallout would affect an area over 5,000 square kilometers, with a dose rate of absorbed ionizing radiation of about 1,000 rads per hour (Wellerstein n.d.). (The rad is a unit for measuring the amount of ionizing radiation absorbed. One rad corresponds to 10 joules of energy absorbed per gram of matter.) A dose of more than 1,000 rads delivered to the entire body within a day is generally considered to be fatal.

Figure 3. Surface craters left by underground nuclear tests at Yucca Flat, one of the main regions of the Nevada Test Site.

Containment failures and nuclear accidents

Underground nuclear tests are designed to limit radioactive fallout and surface effects. However, containment methods are not foolproof, and radioisotopes, which are elements with neutrons in excess making them unstable and radioactive, can leak into the surrounding environment and atmosphere, posing potential risks to ecosystems and human health.

Instances of radiation leaks were not uncommon, especially in the early underground nuclear tests. There were challenges to maintaining simultaneous diagnostics during nuclear detonations and containment of radioactivity during the explosion. Out of the nearly 800 underground tests conducted at the Nevada Test Site, 32 tests led to considerable release of iodine 131—a highly active radionuclide with a half-life of eight days that poses health risks when absorbed by the thyroid gland (UNSCEAR 1993). At times, the maximum exposures to ionizing radiation recorded by self-reading pocket dosimeters reached 1,000 milliroentgen, where one roentgen deposits 0.96 rad of absorbed dose in soft tissue (Schoengold and Stinson 1997). While some containment failures with a relatively “controlled” release of radiation were purposefully made for tunnel access, many were unintentional.

Unintended radioactive releases from underground nuclear tests occurred through venting or seeps, where fission products and radioactive materials were uncontrollably released, driven by pressure from shockwave-induced steam or gas. In rare cases, more serious nuclear accidents occurred due to incomplete geological assessments of the surrounding medium in preparation for the test. A notable example of accidental release is the Baneberry underground nuclear test on December 18, 1970, which, according to the federal government, resulted in an “unexpected and unrecognized abnormally high water content in the medium surrounding the detonation point” (Atomic Energy Commission 1971b). In turn, the higher-than-expected underground water content increased the energy transfer from the detonation to the surrounding rocks and soil, while prolonging the duration of high-pressure phase in the cavity. The sustained stresses and pressures over longer periods of time altered the integrity of the containment structures, which failed and released approximately 80,000 curies, a unit for measuring radiation, of radioactive iodine 131 into the atmosphere.

As a result of this accident, 86 on-site workers were exposed to ionizing radiation (Atomic Energy Commission 1971b). The maximum ever recorded dose for an on-site worker’s thyroid is 3.8 millirems—corresponding to 38 percent of the Federal Radiation Council’s quarterly guide for the thyroid (Federal Radiation Council and Protection Division 1961). (The rem is a unit of effective absorbed radiation in human tissue, equivalent to one roentgen of X-rays. One millirem is one-thousandth of a rem.) Offsite, the highest recorded inhalation dose reaches 90 millirems—6 percent of the yearly protection guide for the thyroid. The highest radioiodine levels in milk measured 810 picocuries per liter at a ranch near a test (equivalent to 1,080 millirems per year).[4]

That’s a value that is 270 times greater than today’s maximum contaminant level set by the Environmental Protection Agency (Environmental Protection Agency 2002).

Mechanical and radiation effects of underground nuclear tests

Three main factors affect the mechanical responses of underground nuclear tests: the yield, the device placement (i.e., depth of burial, chamber geometry, and size), and the emplacement medium (i.e., rock type, water content, mineral compositions, physical properties, and tectonic structure). These factors influence the physical response of the surrounding geological formations and the extent of ground displacement, which, in turn, determine the radiation effects by influencing the timing and fate of the radioactive gas release.

Every kiloton of explosive yield produces approximately 60 grams (3 × 1012 fission product atoms) of radionuclides (Smith 1995; Glasstone and Dolan 1977). Between 1962 and 1992, underground nuclear tests had a total explosive yield of approximately 90 megatons (Pravalie 2014), producing nearly 5.4 metric tons of radionuclides. While the total radioactivity of the fission products is extremely large at the point of detonation (e.g., one minute after a nuclear explosion, the radioactivity of the fission products from one kiloton fission yield explosion is approximately 109 terabecquerel), it decreases quickly because of radioactive decay (Glasstone and Dolan 1977). (The becquerel is an international system unit for measuring radioactivity. One becquerel corresponds to the activity of radioactive material in which one nucleus decays per second.)

The radionuclides generated from a nuclear explosion consist of a mixture of:

- refractory species from the weapon materials that condense at high temperatures and partition into the “melt glass;”

- volatile species that condense at lower temperatures and widely disperse on rock surfaces throughout the entire volume of material disturbed by the detonation;

- fission products, which mostly decay by emission of beta and gamma radiation; and

- activation products, created by neutron irradiation of the surrounding rocks.

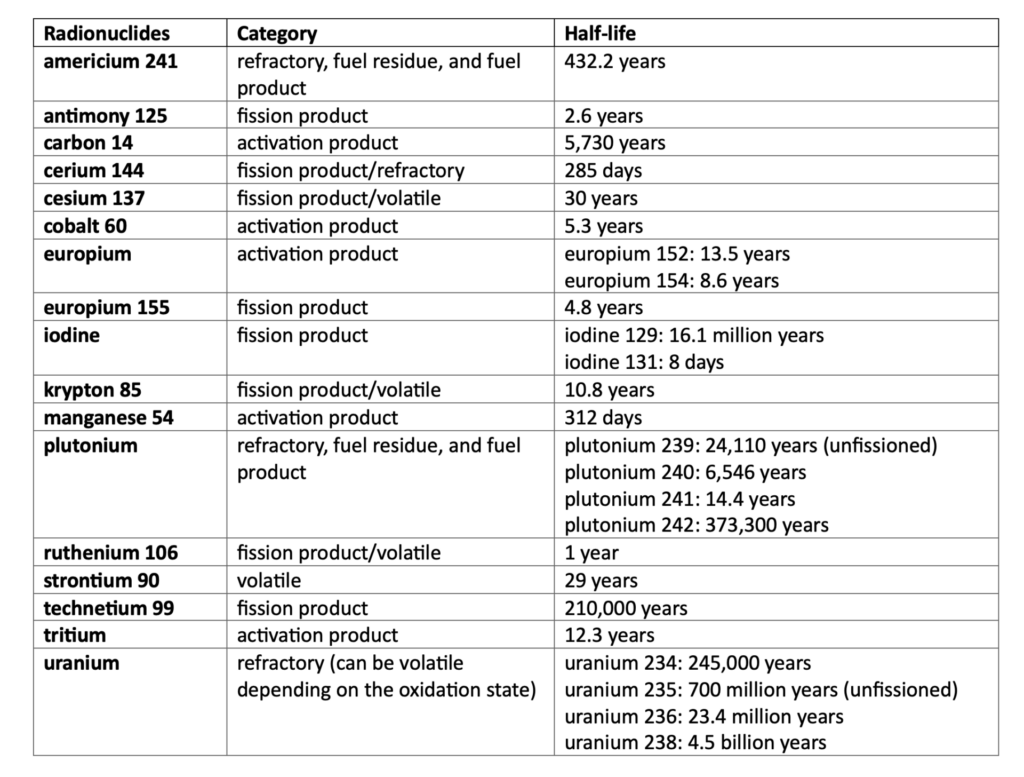

The initial distribution of these species is determined by the temperature and pressure history post explosion, and by the chemical properties of radionuclides produced (Table 2). Lethal doses of different radioisotopes in humans vary. For example, an uptake of a few milligrams of plutonium 239 per kilogram of tissue is a lethal dose based on animal studies (Voelz and Buican 2000). As of September 1992, when the United States conducted its last underground test, the total amount of radioactivity generated by the 43 long-lived radionuclides (with half-lives greater than 10 years) produced by the 828 underground nuclear tests conducted at the Nevada Test Site between 1951 and 1992 is estimated to be 4,890,000 terabecquerel or trillion becquerels (Smith, Finnegan, and Bowen 2003).

Table 2. Major radionuclides associated with underground nuclear tests

The partitioning of radionuclides between the melt glass and rubble significantly impacts the subsequent transfer of radioactivity to groundwater. Radionuclides deposited on free surfaces are prone to dissolution in groundwater through ion exchange, desorption (the release of adsorbed atoms or molecules from a surface into the surrounding water), and surface-layer alteration processes, whereas refractory species that are largely partitioned into the melt glass are less accessible to groundwater. Even then, the release of these partitioned species depends on the rate of melt glass dissolution in contact with the groundwater, which is rather rapid due to the low stability of glass in contact with water. Nuclear detonation can also alter the physical properties of the surrounding rock formation, thereby accelerating the dissolution rate of the melt glass and the release of radionuclides into the groundwater along networks of fractures created by the blast.

As the shockwaves produced from a detonation propagate through the surrounding rock and soil, they induce a stress field within the geological environment on both the microscopic (0.1 to 100 micrometers) and mesoscopic (100 to 1,000 nanometers) scales. These stresses cause irreversible structural changes, which can compromise the physical integrity of the geologic formations. At the microscale, these changes can include microscopic fractures and/or dislocations within individual mineral grains. At the mesoscale, shock-induced changes include faulting and the formation of visible fractures or shock structures, including localized brecciation (i.e., the formation of angular fragments of rock).

The extreme temperatures generated during the nuclear detonation can change the composition of nearby rocks and form new minerals or glass depending on their chemistry, duration of the thermal pulse (electromagnetic radiation generated by the particles in movement), and hydrological setting. Temperatures produced by large explosions can change the permeability, porosity, and water storage capacity by creating new fractures, cavities, and chimneys. The radius of increased permeability (a unitless measure of the ability of a porous material to allow fluids to pass through) can be calculated as a function of the resulting cavity radius, and in the case of the Nevada Test Site, it was typically seven times greater than the radius of the cavity (Adushkin and Spivak 2015). The explosion also affects the porosity of the surrounding rock. For example, a fully contained explosion of 12.5-kiloton yield in Degelen Mountain at the former Soviet Union’s Semipalatinsk test site resulted in up to a six-fold increase in porosity within the crush zone surrounding the cavity (Adushkin and Spivak 2015). Increased permeability and porosity of the surrounding rock can lead to more radionuclides being released, as more groundwater can pass through the geologic formation.

Hydrogeology and release of radioactivity

The main way contaminants can be moved from underground test areas to the more accessible environment is through groundwater flow. Concurrent to the changes in rock permeability and porosity, the residual deformations from nuclear explosions can change the interstitial fluid pressures and water compositions, subsequently modifying groundwater flow rates and directions. In turn, these changes, combined with the presence of water and gas-forming components in rocks, affect the extent of the damage zone and potential migration of radioactive particles into the subsurface environment.

Water affects the behavior of underground nuclear tests in several ways. First, it enhances the transmission of stress waves through the rock mass. This mechanism caused the Baneberry accident (Atomic Energy Commission 1971b). Second, water serves as the main transportation pathway for radionuclides—either in solution (i.e., chemically dissolved in water) or attached to colloids (i.e., dispersed insoluble particles suspended throughout water). The radionuclide particles are in the sub-micrometer range in size, with high surface areas (i.e., the area available for chemical reactions). Important radioisotopes that are particularly likely to interact with hydrodynamic processes include plutonium 239 and plutonium 240, which can adhere or sorb onto mobile mineral particulates in the aquifer and be transported by groundwater. And when contaminants encounter groundwater, their migration potential increases, with the movement depending on the rate and direction of groundwater flow—just like drivers use cars to move around faster and farther.

Even when isotopes do not interact with groundwater immediately after a detonation, the residual thermal effects from detonation can lead to lasting physical and chemical changes as the thermal pulse persists for up to 50 years after the explosion, long after groundwater has returned to the cavity system (Maxwell et al. 2000; Tompson et al. 2002). After the explosion, the residual heat is typically below the boiling point of water, and there is a thermal contribution by the decay of radionuclides. This residual heat can induce vertical, buoyancy-driven water flow, while accelerating the dissolution rate of the melt glass. The increased dissolution, in turn, increases the release of radionuclides, allowing mobile particles to rise to more permeable geologic zones and escape from the cavity or through the chimney system. For example, plutonium from the Nevada Test Site was found to have migrated 1.3 kilometers in 30 years in groundwater by means of colloid-facilitated transport (Kersting 1999). This migration distance contradicted previous models, considering that the groundwater levels at the site typically lie deeper than 200 meters below the surface; and two-thirds of underground tests at the Nevada Test Site were conducted at depths above the water table to ensure subsurface containment of radioactive by-products (Laczniak et al. 1996).

Similarly, at a low-level radioactive waste management site at Los Alamos National Laboratory, where treated waste effluents were discharged into Mortandad Canyon, americium and plutonium were shown to migrate 30 meters in 33 years within the unsaturated zone (Penrose et al. 1990; Travis and Nuttall 1985). And in another example, plutonium was detected on colloids at more than four kilometers downstream of the source at Mayak, a nuclear waste reprocessing plant in Russia (Novikov et al. 2006). Given their long half-lives (Table 2), the ability of plutonium isotopes to migrate over time raises concerns about the long-term impacts and challenges in managing radioactive contamination.

In all these cases, colloid-facilitated transport allowed for the migration of radioactive particles through groundwater flow over an extended period—long after the nuclear tests or discharge occurred (Novikov et al. 2006). These cases have been confirmed using the distinctive isotopic ratios of key radionuclides to trace migrating radionuclides back to the specific tests or “shots,” making them a useful forensic tool to discern the sources of contaminants.

The risks associated with the environmental contamination from underground nuclear tests have often been considered low due to the slow movement of the groundwater and the long distance that separates it from publicly accessible groundwater supplies. But these studies demonstrate that apart from prompt effect of radioactive gas releases from instantaneous changes in geologic formations, long-term effects persist due to the evolving properties of the surrounding rocks long after the tests. Long-lived radionuclides can be remarkably mobile in the geosphere. Such findings underscore the necessity for sustained long-term monitoring efforts at and around nuclear test sites to evaluate the delayed impacts of underground nuclear testing on the environment and public health.

Enduring legacy

Nearly three decades after the five nuclear-armed states under the CTBT stopped testing nuclear weapons both in the atmosphere and underground, the effects of past tests persist in various forms—including environmental contamination, radiation exposure, and socio-economic repercussions—which continue to impact populations at and near closed nuclear test sites (Blume 2022). The concerns are greater when the test sites are abandoned without adequate environmental remediation. This was the case with the Semipalatinsk test site in Kazakhstan that was left unattended after the fall of the Soviet Union in 1991, before a secret multi-million effort was made by the United States, Russia, and Kazakhstan to secure the site (Hecker 2013). The abandonment resulted in heavy contamination of soil, water, and vegetation, posing significant risks to the local populations (Kassenova 2009).

In 1990, the US Congress acknowledged the health risks from nuclear testing by establishing the Radiation Exposure Compensation Act (RECA), which provides compensation to those affected by radioactive fallout from nuclear tests and uranium mining. Still, there are limitations and gaps in coverage that leave many impacted individuals, including the “downwinders” from the Trinity test site without compensation for their radiation exposure (Blume, 2023). The Act is set to expire in July 2024, potentially depriving many individuals without essential assistance. Over the past 30 years, the RECA fund paid out approximately $2.5 billion to impacted populations (Congressional Research Service 2022). For comparison, the US federal government spends $60 billion per year to maintain its nuclear forces (Congressional Budget Office 2021).

As the effects of nuclear testing still linger, today’s generations are witnessing an increasing concern at the possibility of a new arms race and potential resumption of nuclear testing (Drozdenko 2023; Diaz-Maurin 2023). The concern is heightened by activities in China and North Korea and with Russia rescinding its ratification of the CTBT. Even though the United States maintains a moratorium on non-subcritical nuclear tests, its decision not to ratify the test ban treaty shows a lack of international leadership and commitment. As global tensions and uncertainties arise, it is critical to ensure global security and minimize the risks to humans and the environment by enforcing comprehensive treaties like the CTBT. Transparency at nuclear test sites should be promoted, including those conducting very-low-yield subcritical tests, and the enduring impacts of past nuclear tests should be assessed and addressed.

Endnotes

[1] The number of US nuclear tests reported in different publications ranges from 1,051 to 1,151. The discrepancy is attributed to the different ways of counting nuclear tests (e.g., the frequency, timing, and the number of nuclear devices). Here, underground nuclear tests refer to one or more nuclear devices in the same tunnel or hole. If we count simultaneous tests or explosions close in time, the number of US tests would be higher than reported here.

[2] The scaled depth of burial (empirical measure of blast energy confinement) can be calculated using the equation (McEwan 1988):

[3] Assuming the cavity reaches a maximum volume when the gas pressure reaches the lithostatic (overburden) pressure at the explosion depth, the radius of cavity can be estimated using the following equation:

where yield is expressed in kt, and g is the effective adiabat exponent of the explosion products, which depends on the composition of the emplacement medium (Allen and Duff 1969; Boardman, Rabb, and McArthur 1964; Adushkin and Spivak 2015).

[4] Continuous daily intake of 100 picocuries of iodine 131 per day for one year remains within the radiation protection guide’s limit of 0.5 millirems per year. The highest estimated thyroid exposure from inhalation and milk ingestion was 130 millirems, measured in a two-year-old child in Beatty, an unincorporated community bordering the Nevada Test Site.

No comments:

Post a Comment