By Leah Burrows

In his 5th century treatise on war, Sun Tzu famously proclaimed “If you know your enemy and you know yourself, you will be victorious in numerous battles.” Of course, Sun Tzu was fighting with swords and arrows, not keystrokes and algorithms, but the principle is just as applicable to cyber warfare as it was to ancient Chinese battlefields.

In his 5th century treatise on war, Sun Tzu famously proclaimed “If you know your enemy and you know yourself, you will be victorious in numerous battles.” Of course, Sun Tzu was fighting with swords and arrows, not keystrokes and algorithms, but the principle is just as applicable to cyber warfare as it was to ancient Chinese battlefields.

Among the most vulnerable targets in cyberwarfare are deep neural networks. These deep-learning machines are vital for computer vision — including in autonomous vehicles — speech recognition, robotics and more.

“Since people started to get really enthusiastic about the possibilities of deep learning, there has been a race to the bottom to find ways to fool the machine learning algorithms,” said Yaron Singer, Assistant Professor of Computer Science at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS).

The most effective way to fool a machine learning algorithm is to introduce noise — additional data that disrupts or skews information the algorithm has already learned. Imagine an algorithm is learning the difference between a stop sign and a mailbox. Once the algorithm has “seen” enough images and collected enough data points, it can develop a classifying line that separates stop signs from mailboxes. This classifier is unique for every dataset.

To trick the machine, all you need is basic information about the classifier and you can tailor noise specifically to confuse it. This so-called adversarial noise could wreak havoc on autonomous vehicles or facial recognition software and, right now, there is no effective protection against it. The only recourse is to recalibrate the classifier. Of course, the adversary then develops new noise to attack the new classifier and the battle continues.

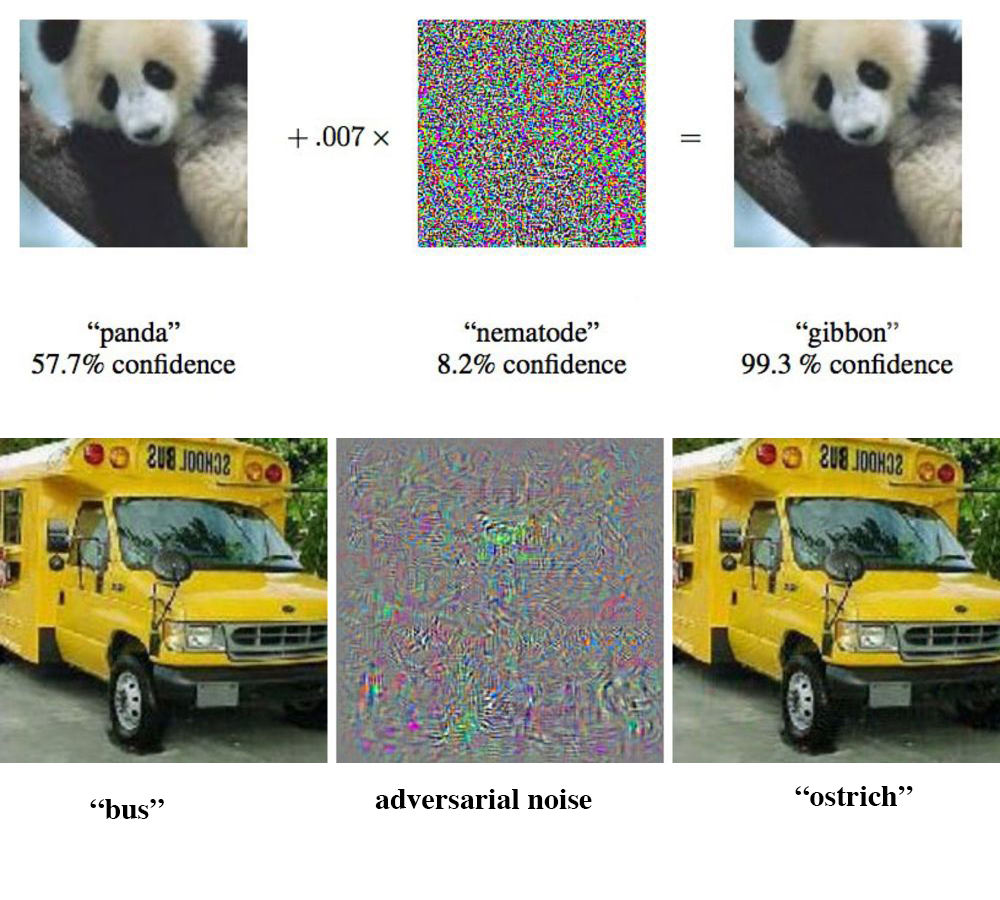

So-called adversarial noise, mostly undetectable to human eyes, causes machine learning algorithms to confuse simple objects. Above, adversarial noise causes an algorithm to confuse a panda with a gibbon. Below, adversarial noise causes an algorithm to confuse a school bus with an ostrich.

But what if, as Sun Tzu suggests, your classifier could truly know all the types of noise that could be used against it and be prepared for each attack?

That’s what Singer and his team set out to do.

“We developed noise-robust classifiers that are prepared against the worst case of noise,” Singer said. “Our algorithms have a guaranteed performance across a range of different example cases of noise and perform well in practice.”

Singer, with co-authors Robert Chen, Brendan Lucier, and Vasilis Syrgkanis, recently presented the research at the prestigious Neural Information Processing Systems conference in California.

"Our work provides new theoretical insights into how to optimize functions under uncertainty,” said Robert Chen, co-author of the research and former SEAS undergraduate. “Those functions could be measuring any number of things, however, and so the framework accommodates a wide range of problems and has a wide range of potential applications, from autonomous driving to computational biology and theoretical computer science.”

Next, the researchers hope to use this framework to tackle other problems in machine learning, such as understanding the spread of epidemics in social media.

No comments:

Post a Comment