Darren Linvill, Patrick Warren

Witzke’s post was grounded in a lie, the result of very purposeful narrative laundering (that is, the process of hiding the source of false information). This particular laundering campaign is affiliated with the Russian government and aimed largely at undermining Western support for Ukraine. While many who read Witzke’s message rightly questioned it, it was nonetheless reposted more than 12,000 times and viewed (by X’s metric) nearly 10 million times. The narrative was shared in thousands of other posts across platforms, before and after Witzke, including by those who unknowingly linked directly to a covert Russian outlet.

Lies don’t have to be good to be effective. For those who wanted to believe this story, it was easy to do so. Its considerable success didn’t result from typically expected tactics, however. It wasn’t spread using an army of social media bots or an AI-generated video of Zelenskyy. This campaign relies on individuals like Witzke, unknowing dupes with influence and credibility in their online communities.

Since 2016, conversations about disinformation have focused on the role of technology—from chatbots to deepfakes. Persuasion, however, is a fundamentally human-centered endeavor, and humans haven’t changed. The fundamentals of covert influence haven’t either.

The Soviet Union’s most infamous disinformation campaign was Operation Denver, sometimes referred to as Operation INFEKTION, an active-measures campaign created to persuade the world that the United States was responsible for the creation of the AIDS virus. The effort began in 1983 with the planting of a fictitious letter entitled “AIDS may Invade India.” This letter was sent to the editor of The Patriot, an Indian newspaper that was created some years earlier by the KGB for the purposes of spreading pro-Soviet propaganda. The letter claimed it was written by a fictional American scientist and revealed that the AIDS virus originated in the United States, created at the chemical and biological warfare research facility located in Fort Detrick, Maryland. This letter was then cited in later KGB efforts to spread the story. Forty years on, the narrative spun by Operation Denver is still widely believed in some communities, particularly by those inclined to view the U.S. government with suspicion.

It is the decades-old influence tradecraft used in Operation Denver that today’s Russian operations continue to employ, albeit fueled by new technologies applied in creative ways. Narrative laundering, however, is a difficult and risky process. The hardest part of that process is integration, a term borrowed from processes that describe money laundering. This is the final stage of the cleaning process, the point at which information becomes endorsed by more credible and genuine sources. In a pre-digital communication age, Operation Denver took years to move from the initial placement of the information to its integration within Western communities. Today, Russia has significantly decreased the timing of this process from years down to days or even hours. One way they have accomplished this feat is by constructing their own credible sources from whole cloth, as seen in an online publication called DC Weekly.

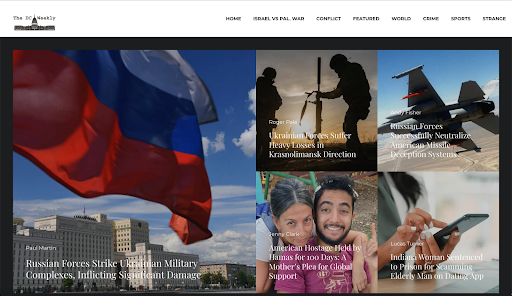

DC Weekly is part of a wider narrative-laundering campaign and designed specifically to help integrate Russian lies into mainstream, Western conversations. It describes itself as the “definitive hub for the freshest updates and in-depth insights into Washington, D.C.’s political scene. Born in 2002 as a weekly political gazette, we’ve since morphed into an agile digital news portal, delivering instant news flashes to our audience.” While none of this is true, there is no question that the website certainly looks the part. It appears professionally designed and is stocked with a breadth of original content on current news.

A screenshot of the DC Weekly homepage. (Courtesy of Katherine Pompilio, Lawfare. Jan. 12, 2024.)

Our research has shown, however, that DC Weekly is very much relying on readers judging a book by its cover. DC Weekly’s articles each include detailed author descriptions, but these journalists are as fabricated as the website’s own backstory. The bios are fiction, and the profile images are stolen from across the web. Most interestingly, the site’s news content is largely created using generative artificial intelligence (AI), stolen first from Fox News or Russian state media and then given its own spin so that it appears unique to DC Weekly. The DC Weekly article “Plagiarism Concerns Surrounding Artificial Intelligence Platforms Raise Calls for Regulation” by the fictional journalist Roger Pale, for instance, originated from the Fox News article “Promising New Tech Has ‘Staggeringly Difficult’ Copyright Problem: Expert” by the very real Michael Lee. In this way, DC Weekly has a constant flow of new content, necessary for any reliable news source, and a casual reader would not likely be suspicious.

DC Weekly exists to serve as a step in a process to launder Russian lies and distribute them to unsuspecting readers. It has done so at least a dozen times since August, taking stories that operatives move from initial placement on social media, next to foreign news outlets, then to DC Weekly, and finally to genuine influencers and the end reader, cleaning these narratives of Russian fingerprints with each link in the chain. Some of these recent narratives, such as the use of Western aid money to buy luxury yachts, have been accepted as unquestioned truth and discussed publicly by at least one U.S. senator working to end military support for Ukraine.

Screenshots of false Zelenskyy-yacht narrative spread on social media.

Concerningly, as a piece of the Russian disinformation machine, DC Weekly is a relatively replaceable part. Only days after we first partnered with the BBC to report on this ongoing Russian influence operation, DC Weekly’s role was replaced by a new outlet, clearstory.news. Already this page has helped the campaign to spread conspiracies regarding President Zelenskyy’s supposed purchase of a villa in Germany that belonged to Joseph Goebbels, Hitler’s propaganda minister, and the growth of Ukraine’s illegal narcotics industry.

In their report to the U.S. Senate exploring Russia’s disinformation tactics in 2016, Renee DiResta and her colleagues at New Knowledge questioned what the future of disinformation may be, writing, “Now that automation techniques (e.g., bots) are better policed, the near future will be a return to the past: we’ll see increased human exploitation tradecraft and narrative laundering.” Advantages of some technologies, in other words, do not always remain advantages. From the English longbow to the German U-boat, the relative benefit of new offensive tools wanes after introduction as awareness and countermeasures develop.

DiResta was only half right with her nevertheless prescient warning, however. Combined with automation techniques, including generative AI, old Russian tradecraft has been supercharged. The prominence of DC Weekly warns observers that they must be broad minded when it comes to understanding and anticipating the dangers posed by new digital technologies. The drum has been beaten for years regarding the dangers of social media bots and trolls or AI deepfakes breaking down the walls between what is genuine and what is fiction. These threats are real, but, remember, the DC Weekly campaign used neither bots nor deepfakes. It used proven processes that have been around for generations but that have been made faster, cheaper, and more effective by digital technologies. Sometimes the old ways are the best ways.

As social media platforms slash the teams responsible for moderation and Congress investigates researchers working to understand and combat the spread of falsehoods, the ability to counter online threats has diminished. This is particularly alarming given the ways in which threats are evolving. Disinformation campaigns are produced by creative professionals, and evolutions in digital technologies are going to continually provide new tools of influence. Civil society can’t forget, however, that the real danger lies in how technology is combined with proven methods of human exploitation. In 2016, Americans were influenced by a few fake online individuals; in less than a decade, this threat has transformed into entirely fabricated networks, systems, and organizations. DC Weekly is a warning of what’s to come.

No comments:

Post a Comment