Erol Yayboke and Christopher Reid

August 31, 2021, marked the end of the United States’ two-decade military presence in Afghanistan. It also marked the end of U.S. military and intelligence eyes and ears on the ground in a place known to be a safe haven for violent extremist groups. In Afghanistan and other areas where the United States lacks a persistent, physical presence, the Biden administration announced a pivot to “over-the-horizon” counterterrorism operations (OTH-CT) that rely heavily on stand-off assets, such as overhead satellite technology and airpower, in the absence of eyes and ears. While the use of drones—or “remotely piloted aircraft” (RPAs)—to target potential terrorist threats seems to be a cost-effective approach from a military perspective, their use has come under increasing pressure from Capitol Hill, human rights and humanitarian organizations, and others for their effects on civilian populations. Military action almost always carries risk of collateral damage, but the disproportionate civilian impact associated with RPAs is not only troubling from rights and humanitarian perspectives, but it also calls into question the strategic, longer-term rationale of using them for counterterrorism purposes in the first place.

Congressional leaders sent a letter to the president on January 20, 2022, about the ongoing OTH-CT strategy review. In it, they point out that “while the intent of U.S. counterterrorism policy may be to target terrorism suspects who threaten U.S. national security, in too many instances, U.S. drone strikes have instead led to unintended and deadly consequences—killing civilians and increasing anger towards the United States.” They, therefore, call on the administration to “review and overhaul U.S. counterterrorism policy to center human rights and the protection of civilians, align with U.S. and international law, prioritize non-lethal tools to address conflict and fragility, and only use force when it is lawful and as a last resort.”

Reconciling the risks and implications of RPA strikes is necessary for genuinely implementing President Biden’s calls for a “targeted, precise strategy that goes after terror.” In doing so, the administration also needs to address concerns over civilian casualties alongside meaningful and justifiable military utilization of RPAs. Using RPAs against those who pose an imminent threat to the United States or its allies and partners is sometimes necessary and appropriate, especially in scenarios that are high-risk for crewed aircraft or ground forces. So why wouldn’t the United States use RPAs more broadly at low risk to blood and treasure? Why put soldiers in danger when we can extensively monitor threats and eventually address them from a remotely piloted aircraft high above? The answers are at once simple (e.g., impact on civilians) and complicated (e.g., limited military alternatives), exposing a gulf in understanding and approach to RPA utilization between the advocacy community (and some congressional leaders) and military and intelligence planners.

This CSIS brief explores the challenge ahead for the Biden administration. It starts with a contextualization of the OTH-CT strategy review, followed by assessments of the short- and longer-term risks associated with RPA utilization and how to think about risk itself. Offering evidence and framing throughout, the brief ends by detailing two sets of recommendations. The first set looks at how to approach the decision of whether to use RPAs:Develop a framework for RPA utilization in OTH-CT missions that considers longer-term implications and elevates decisionmaking;

The second set of recommendations deals with what comes after RPAs are determined to be the right tool:Increase transparency and accountability;

Improve intelligence gathering and sharing; and

Above all, protect civilians.

The OTH-CT Review in Context

The administration’s OTH-CT strategy-revision process is ongoing. While new in the context of an Afghanistan without on-the-ground U.S. and allied forces, it in fact builds on strategies the United States has employed various other conflicts. Containing and using force against violent extremists in areas where it lacks an on-the-ground presence has been a persistent challenge U.S. policymakers have had to confront since the immediate aftermath of 9/11. OTH-CT is premised on the belief that advanced technology—especially increasingly precise RPAs—can effectively provide signals intelligence (SIGINT) and even replace on-the-ground operations to deter terrorism based in Afghanistan, Yemen, North Africa, and other areas. The pervasive public and policymaker perception is that RPA utilization comes with little or no immediate risk to U.S. forces and without the longer-term political risk of a persistently forward-staged ground presence.

Ultimately, this perception belies the complexity of RPA usage and fails to address key analytical questions regarding decisionmaking processes, strategic risk, and longer-term implications of relying heavily on remotely piloted aircraft. Their answers are significant enough to call into question the efficacy of the strategy itself and raise further questions that should be part of the review process. For example, do all terrorism-related threat environments necessitate perpetual presence and kinetic air policing? What constitutes sufficient intelligence to support a strike, especially given the significant potential for civilian casualties? Would an air strike create new problems while it attempts to deal with old ones? Is there endless time and risk appetite to achieve a desired end state? Are there alternative capabilities or missions to achieve that desired end state?

A goal of the OTH-CT strategy for Afghanistan is to consider how RPAs can address intelligence and strike capacity gaps in the absence of ground forces. However, the consideration alone exposes a paradox: A smaller (or nonexistent) footprint limits the type, quantity, and quality of intelligence collected, intelligence that is critical for the effectiveness of RPA tools at the center of the OTH-CT strategy. More broadly, the answers to the above questions expose an inconvenient truth about many U.S. national security efforts: The real and perceived need to respond to short-term threats may place the United States at odds with its own longer-term strategic goals, ultimately making them significantly harder to achieve.

Beyond Recon: Why Drones Have Become the CT Weapon of Choice

For the better part of the past two decades, the crux of U.S. national security efforts has been to fight terrorism wherever it exists. The events of 9/11 spawned an entire industry of counterterrorism experts; new government policies, programs, and doctrines; and increasingly sophisticated capabilities to target threats. Reflecting broad sentiment in national security communities, a 2017 RAND Corporation report claimed that “contemporary CT campaigns can scarcely be contemplated under any conditions except air supremacy.”

From a military perspective, RPAs are a valuable tool in the “air supremacy” toolbox, specifically for their air policing abilities. They far exceed a crewed aircraft’s loiter time over targets of interest and have organic intelligence capabilities on scene to pair with dynamic kinetic employment. But RPAs have shifted from “an instrument of . . . surveillance to a tool for conducting offensive strikes against enemy targets” and are credited with decimating al-Qaeda leadership in Afghanistan. Armed RPAs have often been the tool of choice for U.S. counterterrorism efforts, particularly in Afghanistan, Libya, Pakistan, Somalia, and Yemen. They have been hailed as a “highly effective instrument of counterterrorism” because they provide “pervasive intelligence and aggressive strike capabilities.” Additional research from their use in Pakistan suggests that “drone strikes are associated with decreases in the incidence and lethality of terrorist attacks” in the short term, though that particular study did not evaluate longer-term effects—including those on post-strike recruitment efforts.

The loiter time advantages of RPAs provide an unmatched, persistent presence and an easy military crutch when political commitment for larger military effort is absent. They are also effective as air cover for ground forces and additional resources for overstretched air forces. As Ukraine fights off the latest Russian invasion, Turkish-made Bayraktar drones are providing a counterweight to what many experts believed would be Russian air superiority. Their use against a hostile, invading, and traditional army demonstrates that RPAs can be appropriate and effective, particularly against fielded forces.

Short-term Consequences: Collateral Civilian Causalities

For the above reasons, RPAs have, over time, become both the surveillance tool and strike platform of choice. However, the resulting collateral statistics cast a dark shadow on RPA use despite their kinetic successes, especially given the degree to which policymakers have come to rely on RPAs as a tool of first resort. Notably, a 2013 study by Dr. Larry Lewis from the Center for Naval Analyses (CNA) found that drones were ten times more deadly to civilians than crewed aircraft during one year in Afghanistan.

According to the U.S. military, between August 2014 and the end of June 2021, 1,417 civilians had been killed in air strikes in the campaign against the Islamic State, and at least 188 have been killed in Afghanistan since 2018. However, a New York Times investigation revealed that “civilian casualties had been summarily discounted,” leading to hundreds of undocumented deaths. For example, out of 103 air strikes carried out by U.S.-led coalition forces in Qayara, Iraq, one in five resulted in civilian death, a rate 31 times higher than what was officially reported. Moreover, for about half of the strikes that killed civilians, there were no discernible Islamic State targets nearby, indicating a shortcoming in the reliability of intelligence.

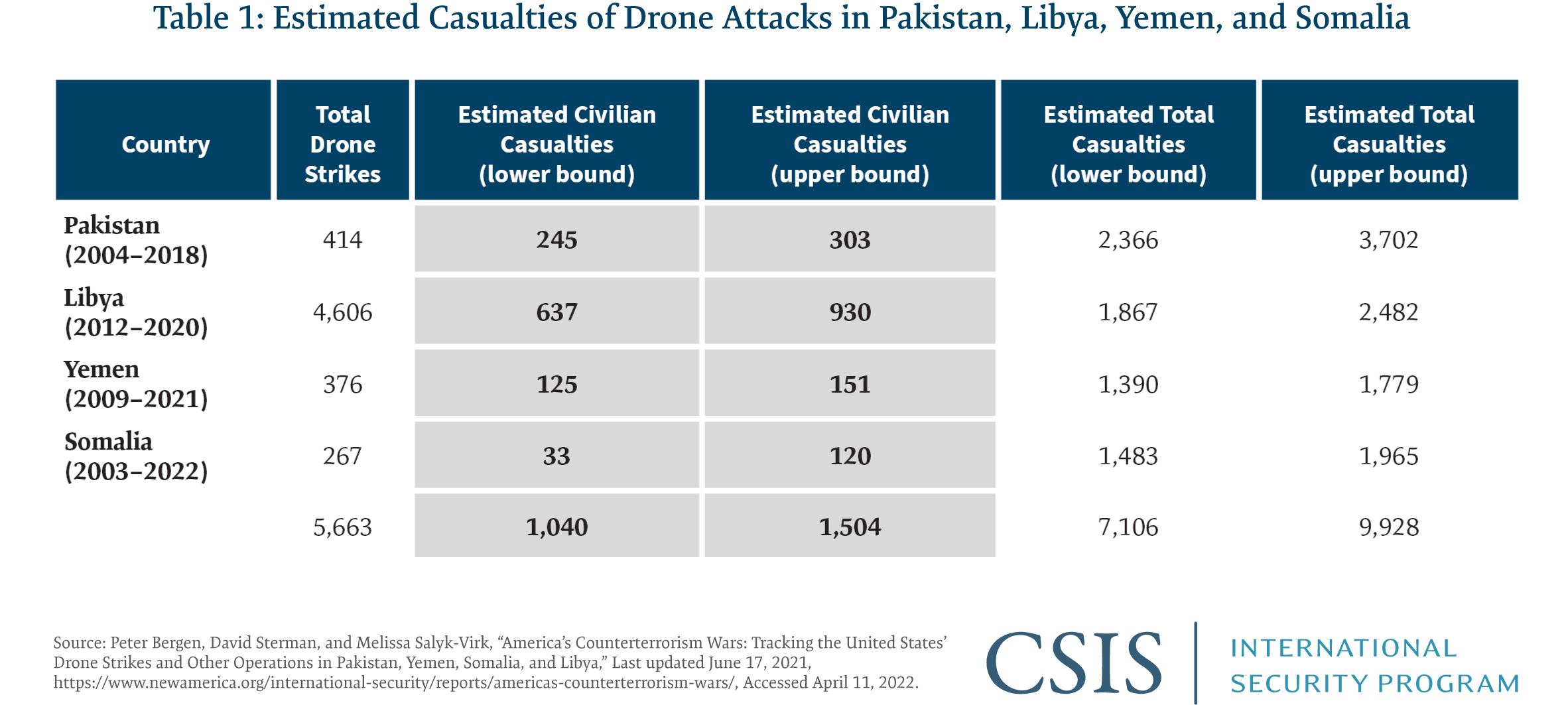

An analysis of United Nations Assistance Mission in Afghanistan (UNAMA) data by Action on Armed Violence found that between 2016 and 2020, 40 percent of all reported casualties were children and that the majority (57 percent) of these child casualties were caused by U.S.-led international forces. New America tracks drone strikes in Pakistan, Libya, Yemen, and Somalia (see Table 1), which—when combined with the U.S. military’s own data from Iraq and taking into account the New York Times investigation—means that at least 3,000 civilians may have been killed by RPAs in those six countries alone since 9/11.

Some would argue that these collateral civilian casualties are a justifiable price to pay for the achievement of broader counterterrorism goals. However, as this brief shows, there are significant longer-term consequences to RPA usage that call into question the credibility of that argument, consequences that should be taken into account as part of any revisions to the OTH-CT strategy.

Signature Strikes: Precise and (Usually) Inaccurate

The strategic counterproductivity of RPA utilization manifests most acutely in so-called “signature strikes.” RPAs are increasingly precise but only as accurate as the information available to them—some collected by RPAs itself thanks to persistent loitering capabilities and some by ground-based human or signals sources. Since both types of intelligence are so often imperfect, those responsible for executing strikes tend to rely on patterns and behaviors (i.e., “signatures”) known to be associated with terrorism and violent extremism. The results of this pattern and behavioral analysis—rather than specific intelligence pinpointing the location of terrorists—are signature strikes, which have been conducted most often in places such as Somalia, Yemen, and Pakistan, where the United States is neither formally nor publicly engaged in a war but clearly has counterterrorism interests. Unlike targeted killings, signature strikes do not require presidential approval, and often decisionmakers do not know the identities—or even numbers—of the alleged terrorists or violent extremists they are targeting. The ensuing uncertainty and reliance on confirmation bias has undoubtedly put civilian lives at greater risk.

It is worth noting that signature strikes are only made possible by the development of more advanced RPA technology. Their problematic nature is thus a policy and strategy problem about how to utilize new technology, not necessarily a problem with the technology itself. Put another way, the technology made it possible for bad policy decisions to be made. This offers a valuable lesson for strategic and operational planners as other new technologies (e.g., artificial intelligence and machine learning) go from the design table to the battlefield.

Longer-term Consequences: Undermining OTH-CT Mission Effectiveness

There is nothing inherently wrong with remotely piloted aircraft. Nor are RPAs automatically indiscriminate—unlike, for example, chemical, biological, and nuclear weapons. Nonetheless, as the previous section documents, civilian casualties have been a short-term consequence of recent RPA utilization. But their current uses also have longer-term consequences.

Longer-term strategic goals of national security policymakers should ideally be to prevent violence before it becomes a threat to perpetually counter in the short term. This argument has been made by one of the authors of this piece, a recent United States Institute of Peace task force, the proponents of the Global Fragility Act, and many others. Continually and primarily relying on RPAs to address terrorism and violent extremism has the potential to undermine longer-term prevention efforts. If prevention is—and should be—the longer-term goal, the United States should carefully reflect upon and even question a strategic approach that relies on RPAs for counterterrorism operations, balancing short-term military and intelligence necessity against the results of strikes on the ground and potential strategic setbacks.

Beyond failing to prevent violence, an overreliance on RPAs for OTH-CT can also have longer-term strategic consequences, namely on legitimacy and the potential to increase radicalization and violent extremism.

PSYCHOLOGICAL HARM AND EROSION OF LEGITIMACY

In some cases, the deployment of RPAs for kinetic responses to counterterrorism can have a greater psychological toll on civilians than the terrorist attacks they aim to prevent. While they can potentially deliver surgical precision, there is a pervasive risk of destabilization and fomentation of generational antipathy toward the United States.

These longer-term consequences are perhaps most apparent in the “neighborhood effects” of RPA strikes and persistent air policing. These effects extend beyond bodily injury or death into psychological, economic, and social dimensions. The “remote and covert nature of drones” makes them effective at both killing targets and “promulgating a sense of terror among the broader populace within which targets are embedded.” From July to September 2014, the Swiss non-governmental organization Alkarama surveyed civilians living in Yemeni villages where U.S. drones were operational. Of the 100 civilians surveyed, 72 displayed many symptoms of post-traumatic stress disorder (PTSD), and 27 believed that they were experiencing PTSD. Those directly affected by drone strikes (e.g., a close family member was killed) and other civilians living in the area experienced fear, insomnia, and emotional distress—indicating drone-related trauma across all demographic groups.

This sense of terror is associated not with violent extremists, who may or may not be in their midst, but with the United States. As the recent New York Times investigation reveals, institutional underreporting and impunity have, over time, reinforced the perception that civilian harm is an “inevitable collateral toll” the U.S. military is willing to accept, moreover without further investigations or disciplinary action. This perception has affected U.S. credibility among civilian populations and current and potential allies, despite the fact that it needs this credibility to meet strategic challenges in a growing number of theaters.

Legitimacy plays a foundational role in the ability of the U.S. military to engage in conventional, asymmetric, and hybrid warfare. Over the past 20 years, countless think tanks, policy experts, and professional military institutions have dusted off centuries-old theorems to develop counterinsurgency and other strategies as part of the “Global War on Terror.” Along the way, they relearned and institutionalized values such as having clarity of purpose, winning hearts and minds, and controlling the narrative. Though efforts to have clarity, win hearts and minds, and control the narrative have not always been executed well, these values remain critical. Without them, it is hard to imagine successfully convincing nations and populations that a global security architecture led by the United States and like-minded friends and allies is superior to one led by China and Russia. The absence of such values-based credibility could even necessitate the utilization of RPAs as even more of a crutch. For example, RPAs’ extremely long flight time capabilities give a distinct advantage in basing options, as the United States does not necessarily have to form and maintain critical partnerships with those nations closest to regional threats in order to launch and recover crewed aircraft. This effectively bypasses the sometimes difficult, though ultimately rewarding, diplomatic process to receive regional “buy-in” and actually goes against other stated efforts. As U.S. Secretary of Defense Lloyd Austin stated in a recent congressional hearing, “shorten[ing] the legs” for over-the-horizon capabilities—in other words, becoming more agile and responsive to threats even without physical presence in places like Afghanistan—remains a key priority for both operational and strategic reasons.

The rise of the Islamic State in Iraq, an ignominious U.S. exit from Afghanistan, and other demonstrations of the limitations of U.S. hard power to directly provide a modicum of stability, much less sustainable security outcomes, have done enough to undermine U.S. legitimacy. OTH-CT planners should ensure that RPA utilization does not make things harder in the longer term, especially in places like Afghanistan where a case for using RPAs to protect ground forces can no longer be made.

Unilateral dependency on RPAs for kinetic strikes ignores the psychological vulnerabilities in already fragile populations and the importance of perceptions of legitimacy. The complexities in Afghanistan, for example, of various tribes and groups seeking to retain identity and legitimacy amid Taliban control present an even more challenging landscape than when U.S. and NATO forces were on the ground to reinforce messaging. It may be convenient to think that OTH-CT will eliminate risk at low cost—but that convenience belies the fact that the opposite is more likely true.

EXTREMIST RADICALIZATION AND RECRUITMENT

Growing evidence, including by the Central Intelligence Agency (CIA), suggests a troubling trend: RPA strikes seem to be encouraging terrorism and increasing support for local violent extremist organizations. This seems to especially be the case in the absence of supporting narratives and perceived legitimacy, wherein terrorist and extremist groups can exploit civilian deaths to further their propaganda and recruitment efforts. For example, research from Pakistan published in 2019 shows that drone strikes “are suggested to encourage terrorism” and “to increase anti-US sentiment and radicalization.” Similarly, one 2020 study found that terrorists were more likely to increase their attacks in the months after a deadly drone strike. Put more bluntly, RPA strikes “radicalize civilians faster than they kill terrorists.”

Efforts to counter these negative effects have had mixed results. In the Middle East and Central Asia, for example, counterterrorism air strikes were often accompanied by messaging in the form of air-dropped leaflets and other information operation efforts, highlighting both the difficulty and criticality of avoiding civilian casualties. Messaging was intended to prevent associations with and recruitment from extremists and was coupled with on-the-ground efforts—e.g., supporting, training, and operating in concert with the Afghan National Army—to invest in legitimate institutions. Now, absent U.S. and NATO troops in Afghanistan and in other areas lacking a multi-pronged approach, populations are even more vulnerable to terrorist recruitment tactics after such strikes.

Unfortunately, a general lack of transparency and over-classification of data on RPAs use limits the scope of possible analysis on the longer-term strategic effects of RPA strikes. More research and comprehensive datasets are needed. Nonetheless, initial research points to short-term counterterrorism efforts increasing terrorism threats in the longer term, concerns that OTH-CT strategists should carefully weigh.

How to Think about Risk

While it is beneficial to detail short- and longer-term consequences of RPA utilization in the context of the OTH-CT strategy revision process, it would be foolish to do so without acknowledging that each decision—whether at the policy or operational level—comes down to evidence-based risk analysis.

Historically, there are numerous risks inherent with airpower of any form. But RPAs come with their own specific risks:RPAs are often employed in more difficult strike missions where no crewed aircraft are available, there is little willingness to expose soldiers to danger, or optimal engagement windows are unknown—carrying the risk of missed targets, incomplete “pattern of life analysis,” compressed timelines during dynamic strikes, secondary explosions, and an overreliance on air power.

Decisionmakers can over-rely on aerial intelligence that is outdated, flawed, or poor (e.g., blurry or pixelated imagery that can cause them to misidentify people as objects) in the absence of human and other correlating intelligence, leading to confirmation bias and an overreliance on “signature strikes” (see text box above).

When not supporting a ground force, RPAs can artificially decrease engagement thresholds, leading to misanalysis in the absence of contextual and cultural understanding.

The risks associated with RPAs have meant that while these aircraft can eliminate terrorists, violent extremists, militants, and other bad actors, civilian casualties have also been significant and longer-term consequences remain a challenge.

IN PRACTICE AND POLICY

The risks, limitations, and counterproductive potential of RPA utilization in counterterrorism operations came into stark relief during the chaotic withdrawal of U.S. armed forces from Afghanistan in August 2021. Tensions were high after an Islamic State Khorasan Province attack tragically killed 13 U.S. service members and as many as 170 civilians at Hamid Karzai International Airport. After suspecting the driver of a white Toyota Corolla was carrying explosives based on previous intelligence and the target’s “signature,” military officials “prejudiced their views of every stop he made that day.” The result was a deadly RPA signature strike, which the Pentagon later acknowledged had mistakenly killed 10 civilians, including seven children and the driver, Zemari Ahmadi, who turned out to be an Afghan employee of a U.S.-based humanitarian organization.

Those authorizing specific strikes need to consider the potential for civilian casualties in each instance. Every time these risks are deemed acceptable in pursuit of a CT objective, it indicates that decisionmakers have met guidelines outlined in defense policy, the U.S. military’s joint doctrine, and international law covering concepts such as “military necessity,” “proportionality,” “humanity,” and “distinction.” From a military perspective, each of these foundational concepts relies on judging and assessing each individual case. Strategic assessment on RPA utilization more broadly requires a longer, deeper review by policymakers. Whether officially a part of the OTH-CT strategy review process or not, such a process should include a review of the impact RPA intelligence gathering and strike operations have on a population, how their use influences perceptions by the international community, and whether and how their use affects terrorist recruitment.

Collective Self-Defense

Discussions of an OTH-CT strategy infer that the U.S. military would carry out RPA strikes, special operations, and support missions primarily for self-defense purposes. The military has claimed “collective self-defense” of partners on the ground as a reason to strike targets in places like Somalia where there are no significant levels of U.S. troop deployment (though this posture may be changing). While the self-defense framing suggests that military officials will pursue postures of restraint, the continued—though admittedly scaled back—use of RPAs to strike “collective self-defense” targets implies a broad interpretation of restraint.

Ultimately, the data suggests a disconnect between heavy reliance on the technological promise envisioned by military and airpower planners and the reality that RPA use is complicated at best. Despite the tactical realities facing these planners, the international community broadly perceives the thresholds for the use of force and determinations of military necessity as lower when utilizing RPAs. Defense strategists and operators should be open to questioning such assumptions, and strong consideration should be paid to balancing risks and uncertainty against the phase of overall conflict, current political goals, and the resulting military necessity. At the end of the day, however, if the risk to operational personnel is low or zero and military necessity is low, then the certainty of achieving the objective without collateral damage should be high.

RISK-BASED DECISIONMAKING

The Biden administration and military officials appear to have heeded concerns about minimizing civilian harm, as demonstrated by elevating strike approval requirements in early 2021. This change in tone—if not policy—was put to the test in February 2022 when intelligence arrived on the location of Islamic State leader Abu Ibrahim al-Hashimi al-Qurayshi in Syria. Notably, this intelligence came from on-the-ground informants and was corroborated by aerial surveillance cameras and nearby audio sensors over a period of several weeks. After assessing that an RPA strike would come with a high risk of civilian casualties, U.S. Special Operations Forces conducted an overnight raid of the residential compound where al-Qurayshi was staying. Although at least 13 people were killed, including by al-Qurayshi’s self-detonation of a suicide vest, U.S. forces evacuated 10 civilians from the building, avoiding any U.S. casualties in the process.

The decision of whether to deploy kinetic responses—including RPAs—should thus be viewed as fundamentally related to risk-based decisionmaking based on available resources. The tragic Kabul strike on civilians and the al-Qurayshi operation had vastly contrasting outcomes: a signature strike that killed 10 civilians versus an operation against a known terrorist that utilized evidence-based decisionmaking and multiple sources of intelligence. It is worth noting that the context of these two incidents was also different. The first, in Afghanistan, was one of perceived self-defense, which meant that decisionmakers had less time to corroborate the threat via other intelligence before taking action, especially with resources concentrated on evacuation efforts. The second, in Syria, was an offensive operation not aimed at an imminent threat, and decisionmakers, therefore, had more time to characterize the threat, including through ground-force validation. The contrast highlights the complex calculus of deciding whether to risk the lives of U.S. troops in a ground operation or those of civilians in an air strike to neutralize terrorist threats.

In shifting to an OTH-CT strategy in Afghanistan heavily reliant on RPAs, the United States has tied itself in a paradox: It lacks the on-the-ground eyes and ears that make RPAs—which are meant to replace those eyes and ears—more accurate. This paradox could be a fundamental barrier to executing the strategy. While indefinite troop presence was also not a feasible option in Afghanistan, the United States now finds itself flying above while blind below in an environment where it can expect mistakes and high civilian costs from kinetic utilization of RPAs. This paradox and the risk to civilians it creates thus has short- and longer-term negative repercussions and should be seen as carrying high—some might even say prohibitive—levels of risk.

Too often, the targeting of a specific individual is the strategy rather than a tactical step on the road to addressing terrorism and violent extremism. Considering each action as part of a strategic pursuit of an overarching goal—e.g., responding to and preventing violent extremism—would necessitate consideration of broader implications such as civilian risk and the potential to create more enemies in the future.

Military leaders should incorporate more robust consideration of longer-term implications into all RPA-strike decisionmaking processes. Doing so will likely require the elevation of approval authorities and the formation of an interagency task force—to include senior civilian officials—for strategic-level decisionmaking. The Center for Civilians in Conflict has advocated for such a body to be comprised of the director of National Intelligence and senior officials from the National Security Agency, the CIA, and the Departments of State, Justice, and Defense. The task force, in cooperation with operational agencies, should also conduct or commission in-depth assessments of RPA operations and evaluate the second- and third-order effects of their utilization in the longer term. Part of this assessment should include, as a RAND study recommends, the consistent application of a legal analytical framework, ensuring the right strategic balance of risk, reward, and adherence to international law. Temporary efforts by the Biden administration in early 2021 to require high-level approval for counterterrorism-related RPA strikes outside of active conflict zones should be made permanent.

1.2 Strike networks, not signatures.

The OTH-CT strategy should emphasize using air assets—particularly RPA assets—primarily for SIGINT-gathering purposes but should stop short of using the identification of patterns alone as cause for kinetic action. Accordingly, the Biden administration should halt the utilization of signature strikes, and Congress should explore legislation to make them permanently unavailable as kinetic options.

Targeting specific individuals will likely continue to be a tactical necessity, especially if credible intelligence points to an imminent threat. In al-Qurayshi’s case, the need could even be presented in strategic terms. However, a more effective over-the-horizon strategy would focus on dismantling target networks rather than relying on tactics—e.g., signature strikes—that carry great risks to civilians. While removing a leader or network hub can thwart a group’s operations in the short term, these individuals are replaceable, and the terrorist threat persists. Experienced groups such as the Islamic State and al-Qaeda have opaque command structures and contingency plans, having learned how to alleviate the effects of leadership changes. Additionally, the decentralization and defragmentation of extremist groups across the Middle East and into sub-Saharan Africa mean they can operate without direction from core leadership.

RPAs can be effective intelligence assets. Rather than emphasizing their loiter time and small payload for use in aerial strikes against core leadership, military planners should focus RPA use on the extreme intelligence inversion that has resulted from losing many of the sensors previously available to forces on the ground. Given the strategic and longer-term risks of RPA strikes presented in this brief, seamlessly developing and building out information on extremist networks should be the priority when using all available technology. In the absence of on-the-ground human intelligence (HUMINT) in some environments, aerial reconnaissance can map out the relationships and operations between groups, which can in turn inform a broader, more integrated strategy for containing terrorist networks. If the picture of a particular terrorist network remains unclear, that should indicate plans to strike it are immature and foreshadow their ineffectiveness in hitting any one subcomponent.

1.3 Understand that RPAs will not eliminate over-the-horizon risks, though a fuller suite of technological tools might.

The situation in the Middle East—from where much of the counterterrorism architecture of the past 20 years derived—has changed significantly from operational and political perspectives. It has shifted drastically away from a force-posture perspective. Old methods of force will not necessarily generate the same effects, nor should they draw heavier reliance on RPAs just because alternative options are less clear.

Traditionally, any time the U.S. military consolidates control and decisionmaking, it does so as a direct result of risk. With the elimination of the risk to ground forces in Afghanistan, as well as reductions elsewhere, the risk pendulum has clearly swung from imminent self-defense to strategic risk of inaccurate offensive action. This friction may drive planners to more covert action to avoid public blowback from error, thereby undermining international transparency and security reassurances with regional partners. Strategists and planners should revisit the multi-pronged, institutional approaches relearned over the past two decades of counterinsurgency to focus again on restoring trust in security institutions.

Strategic planners will need to consider plans and posture in the context of changed operational environments and newly available OTH-CT technologies. The choice should not be seen as binary, pitting OTH-CT technologies against boots on the ground—or, if the latter is not operationally or politically feasible, OTH-CT technologies against nothing. RPAs with increasingly sophisticated intelligence-gathering tools and more precise targeting abilities that limit collateral damage might be useful, but RPA data should be compared to those collected by space-based intelligence, surveillance, and reconnaissance (ISR) assets, all cross-referenced against artificial intelligence and machine learning (AI/ML) analysis of available (classified and open-source) data. Where possible, encrypted communications tools should be used to maintain HUMINT and SIGINT capacities even when physical presence is less feasible. OTH-CT technologies—from RPAs to ISR to AI/ML and beyond—should be seen as a suite of tools to be deployed in different contexts as determined by the nature of the threat and operational environment.

2. If RPAs are determined to be the right tool:

2.1 Increase transparency and accountability.

First and foremost, policies and procedures on employing RPAs should be made clear and public. RPA targeting is often intentionally kept covert and hidden to support sensitive operations; however, the results of miscalculation or misinterpretation are not hidden. If RPAs are to be relied upon more heavily, they should be treated as transparently as other military operations that have a high chance of civilian impacts.

As warfighting technology advances rapidly, the public will demand more accountability. How the public perceives the use of RPAs—including failing to apply minimum standards for civilian protection and a lack of accountability when things go wrong—has cascading effects on rules of engagement, international community acceptance, alliance formation, geographic access or denial, and (critically) civilian trust in military institutions. As the United States considers selling RPAs to international partners, U.S. precedent in how it interprets international laws and norms concerning its own drone use will become increasingly important for informing the practice of others using RPAs. Failure to apply minimum standards to the United States’ own practice will lessen its ability to inform partners’ adherence to international law.

The current lack of transparency leads to a public perception that those utilizing RPAs are not held accountable for mistakes, even if that may not always be the case—for example in courts martial where proceedings and even verdicts are often either classified or kept sensitive to protect personal identities. It also promotes the perception that the United States does not support international human rights law, under which the use of lethal force can only be legally justified if violence (or the threat of such violence) emanates from the targeted person. The Department of Defense’s own Law of War Manual clearly states that harm must be minimized to anyone other than the person who poses an imminent threat. Both international humanitarian and human rights law also underscore the importance of proportionality, wherein the use of force is commensurate with the actual threat posed. These international laws stress the need to have clearly articulated policies governing the use of RPAs and the provision of support to families of victims. In the absence of these policies, civilian casualties from RPAs undermine U.S. credibility abroad, especially when there is little transparency and seems to be little accountability for mistakes made.

While there may be value in highly classifying information on RPA plans, posture, and execution, over time datasets should be made available for research, including research commissioned and funded by independent foundations and by the Department of Defense, intelligence agencies, and other RPA users. After his 2013 CNA study showed how much deadlier drones were than crewed aircraft, Dr. Larry Lewis led a 2021 study on using artificial intelligence to mitigate civilian harm, drawing on research from the Joint Civilian Casualty Study, in which he and Dr. Sarah Sewall concluded that data-driven modeling shattered previous military assumptions to prevent or mitigate civilian collateral effects. The authors’ unrestricted access to battlefield data, granted by General David Petraeus, resulted in a study that prompted significant changes to military planning and execution for both crewed and remotely piloted aircraft strikes—results only possible due to high-level access and transparency.

Future research should focus on how to improve over-the-horizon deployment of RPAs in concert with other technologies, including on how to limit civilian casualties and what the longer-term strategic implications of RPA utilization are, especially for terrorism and violent extremism. To the extent possible, the information should be made public, though in rare and exceptional circumstances classified research can be considered instead.

2.2 Improve intelligence gathering and sharing.

The United States should strengthen collaboration with overseas military partners. Integrating multiple sources of information—aerial surveillance, SIGINT, and HUMINT—would minimize the possibility of intended consequences. RPAs are currently equipped to gather and aggregate aerial surveillance and SIGINT effectively, which could help track known bad actors with transnational ambitions and support kinetic ground operations. When this information is corroborated by on-the-ground HUMINT, RPAs can even be an effective strike force in rare instances, especially since drone-strike technology is increasingly capable of targeting individuals with limited collateral damage. Without HUMINT, utilizing RPAs for OTH-CT purposes will be fraught with short- and longer-term risk.

General Kenneth McKenzie, Jr., commander of U.S. Central Command and focal point for the administration’s OTH-CT strategy, said in September 2021 that RPA strikes need to be carried out “under a higher standard” than the “reasonable certainty” under which the tragic Kabul strike was ordered. The Biden administration should make this higher standard into an official policy. Implementing it would then require greater utilization of technology tools (e.g., RPAs, space-based ISR, and AI/ML) and HUMINT collection capabilities, including via increased collaboration with friends and allies. Intelligence support to civil societies could help uphold norms regarding human rights and rule of law among institutions we recognize. In Afghanistan, this could mean coordinating a counterrorism strategy with France or India, given their interest in containing threats emanating from there. Others have suggested establishing military partnerships in Central Asia or with the Gulf states. Since the withdrawal of troops from Afghanistan, and with Chinese and Russian interests sensing opportunity, the United States should also prioritize reinvesting in partnership and a shared security narrative with countries such as Pakistan against terrorism and violent extremism, though doing so will be neither straightforward nor easy and would need to be done on careful terms.

2.3 Above all, protect civilians.

This brief shows that avoiding civilian casualties is the right and strategically important thing to do. U.S. Marine Brigadier General Larry Nicholson put the need to protect civilians in more colorful terms when speaking with Afghan tribal elders in 2010: “In counterinsurgency, the people are the prize.”

Conclusion

This brief attempts to bridge the gulf in understanding and approach to RPA utilization—especially for counterterrorism reasons—between the advocacy community, congressional leaders, and military planners. It makes the case for why thinking more strategically and over the horizon about RPA utilization is in our strategic interest. Importantly, this is also the right thing to do from human rights and humanitarian perspectives. While air strikes may help target individual leaders in the short term and provide air cover during ground kinetic operations, they likely have only a marginal effect on adaptive and increasingly decentralized terrorist and violent extremist organizations. At the same time, without a risk-based, robust framework for RPA utilization for OTH-CT purposes, they run the real risk of unintended and counterproductive longer-term strategic consequences.

The consequences of the August 2021 Kabul drone attack were both tragic and tragically common. Zemari Ahmadi was transporting water around town in his Corolla. Even if the intelligence was not faulty and he was intent on violence, the indiscriminate collateral damage of such an attack should still give us pause. In this case, as with so many others over the past two decades, the intelligence was wrong. It is not hard to imagine the justifiable anger that his friends, family, and many other Afghans felt after the “tragic mistake.” One can only hope that the anger does not turn into violence, or even violent extremism, and a loss of trust in military institutions—though it is also not hard to understand why it would.

No comments:

Post a Comment