David Alayón

When I published the article “Understanding Blockchain” many of you wrote me to ask me if I could make one dedicated to Artificial Intelligence. The truth is that I hadn’t had time to get on with it and before sharing anything, I wanted to finish some courses in order to add value to the recommendations.

When I published the article “Understanding Blockchain” many of you wrote me to ask me if I could make one dedicated to Artificial Intelligence. The truth is that I hadn’t had time to get on with it and before sharing anything, I wanted to finish some courses in order to add value to the recommendations.

The problem with Artificial Intelligence is that it’s much more fragmented, both technologically and in use cases, than Blockchain, making it a real challenge to condense all the information and share it meaningfully. Likewise, I have tried to make an effort in the summary of key concepts and in the compilation of interesting sources and resources, I hope it helps you as well as it did to me!

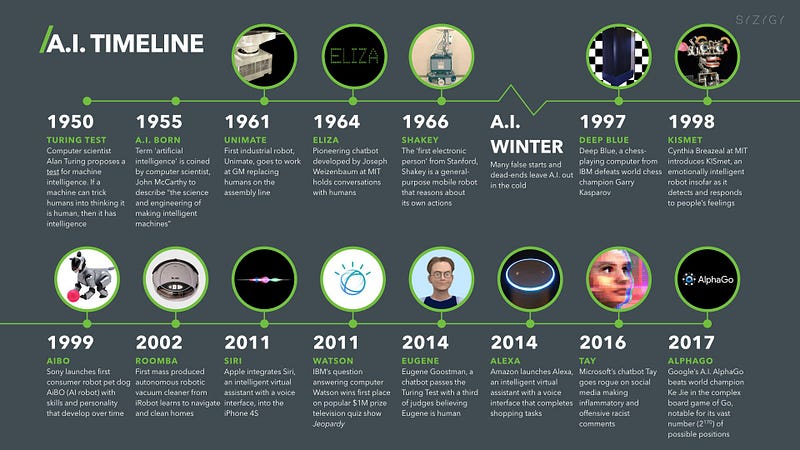

Let’s start with a little history. The timeline you see is taken from this articleand it shows the most important milestones of Artificial Intelligence. The term AI goes back to Alan Turing who defined a test, Turing Test, to measure a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. A few years later it was John McCarthy who coined the term officially, at the famous Dartmouth Workshop, with the following phrase: “every aspect of learning or any other characteristic of intelligence can, in principle, be described with such precision that a machine can be made to simulate it. We will try to discover how to make machines use language, from abstractions and concepts, solve problems now reserved for humans, and improve themselves”. The rest of the milestones you see, mainly Deep Blue and AlphaGo, will appear throughout the article on several occasions. I recommend that you also watch the ColdFusion video where some more details about the history of Artificial Intelligence are nuanced.

Something really interesting that appears in the video are the 7 aspects of Artificial Intelligence defined in 1955 and that are still valid today, and in which we have currently reached (with some level of progress) only three of them: programming a computer to use general language, a way to determine and measure the complexity of problems and self-improvement. We could also say that we are starting with “randomness and creativity”, with some examples like Morgan’s trailer or the script of “Surprising” (2016), the perfumes of Watson, and projects like AIVA, Magenta or My Artificial Muse.

Therefore, we could say that Artificial Intelligence are machines or computer programs that learn to perform tasks that require types of intelligence and that are usually performed by humans. And when we talk about types of intelligence, we need to rescue Jack Copeland’s reflection on what intelligence is: “the dominant thought in psychology considers human intelligence not as a single ability or cognitive process, but rather as a set of separate components. Research in AI has focused primarily on the following components of intelligence: learning, reasoning, problem solving, perception, and comprehension of language”.

That said, let’s go with the different types of Artificial Intelligence. In the video there were two: Weak AI or also called Artificial Narrow Intelligence (ANI), which allows computers to outperform humans in some very specific tasks (the most famous example is IBM Watson); and Strong AI or Artificial General Intelligence (AGI), the ability of a machine to perform the same intellectual tasks as a human being (we are far from reaching it). There is a third level called Artificial Superintelligence (ASI), when a machine possesses an intelligence that far surpasses the brightest and most gifted human minds in the world combined.

Another way to categorize it’s in four levels: Reactive Machines, which simply react to a stimulus (or several) but cannot build on previous experiences and cannot improve with practice (IBM Deep Blue); Limited Memory, can retain and use data for a short period of time but cannot add them to a library of experiences (Self Driving Cars); Theory of Mind, machines that imitate our mental models: have thoughts, emotions and memories (none yet exist) and finally Self-Awareness, or conscious machines, something that stays in the realm of science fiction (for now)

We have now reached the ANI or Limited Memory level, with the intention of making the next leap but with much uncertainty as to how and when we will achieve it. If we focus on the first categorization, there is a pioneering project that could be laying the groundwork for achieving AGI (although it’s still light years away) and is AlphaGo or its latest version: AlphaZero. This last one uses a totally different approach for learning than the rest of the AIs we have seen. The previous versions used expert knowledge (humans introducing what’s right) or needed a lot of data (the version of AlphaGo that won Lee Sedol at Go learned from thousands and thousands of games). On the contrary, Alpha Zero uses Reinforcement Learning, that is, it learns by playing against itself. In this article you can see what it means and how in 40 days by learning this way it became the best of all its predecessors, and by extension the best in the world.

The new debate with DeepMind, the creators of AlphaGo that were acquired by Google in 2014, is whether this approach is the right one. They work with tabula rasa, that is, they start with a blank canvas where Artificial Intelligencelearns completely from scratch. Another approach would be if Artificial Intelligence had a pre-wired base, as Chomsky says with his Theory of Universal Grammar, or directly should be preloaded with a layer of “common sense” like what Etzioni is creating at the Allen Institute for Artificial Intelligence.

Returning to the present and understanding these basic concepts, we can see the immense applications of AI and how we already have many of them working in our hands or in our day to day: virtual assistants, translators, eCommerce or social networks recommendations, chatbots … This is just starting and is going at full speed! If we look a little into the future it’s clear that AI is going to change companies, industries, countries and the whole world; and it’s up to us to think how we want it to be and make the right decisions from the present. Gerd Leonhard has spoken a lot about this topicand along with him there are many other writers, thinkers and futurists who have explained their visions, mainly in using Artificial Intelligence to increase and complement us, not to replace us.

Agentive Technology. Artificial Intelligence should augment us, give us the tools to get rid of work and do it with autonomy.

Centaurs. It is demonstrated that the sum of IA and Humans is better than IA alone because of the complementarity of competences (Kasparov proved it on multiple occasions).

Multiplicity. The key lies in the collaboration between Artificial Intelligence and Amplified Intelligence (human + artificial).

AI Superpowers. Dr. Kai-Fu includes variables such as compassion, love and I would say empathy, placing AI at the center.

Then we have Yuval Noah Harari and Tristan Harris talking about dataismand making a call for a big reflection and ethics, or Elon Musk and another group of technologists and scientists, developing initiatives to raise awareness that we are in our way to self-destruction and creating projects like OpenAIfor a “safe” Artificial Intelligence. I personally don’t yet see this Black Mirrorapproach but I do believe that we are at the point of starting to think about where we want to go and try to create a kind of world committee to make decisions at the planetary level and as a species.

To finish this first block, here you have a list of books on AI, highly recommended Nick Bostrom’s, and a list of films, highly recommended the last two: Her (2013) and Ex Machina (2015).

ADVANCED

We’re moving to the next level! Regardless of the category, the technological learning base of Artificial Intelligence is mainly based on two pillars: Symbolic Learning and Machine Learning. Curiously, the first pillar was the one that began everything but with the birth of Machine Learning and specifically with Deep Learning, all efforts have been focused on the second (although there are many technologists who are thinking on retaking the first). Before we move on, take a look at this video by Raj Ramesh:

Really interesting how it synthesizes the different branches of Artificial Intelligence. I think it’s clear that the most promising branch that has come to stay is Machine Learning, which is nothing more than a system capable of taking large amounts of data, developing models that can successfully classify them and then make predictions with new data. To understand a little more this approach, watch this CGP Grey’s video:

One of the most interesting thing is that these models are not programmed, they arise from training, and there is a point where no human, nor the programmers themselves, can understand how it works. By now, enough new “words” have come out, so I’ll leave you with an IA dictionary for beginners and one more reading: Difference between Machine Learning, Deep Learning and Artificial Intelligence.

Now it’s time to go deeper into Deep Learning, the most advanced approach to develop Artificial Intelligence today. After reading many articles, watching many videos and doing some courses, I can say with certainty that an ideal way to have a complete overview of Deep Learning, handling basic concepts, technical terminology and even starting to know some tools and platforms is DeepLearningTV. I don’t know how long it will be active (I recommend you download the videos) because it’s been a while since their last update and I don’t see any company or community behind it… Their videos are pure gold! Here you have the complete list with the 31 episodes:

Impressive, isn’t it? I think what needs a little more development are the frameworks or tools of Machine / Deep Learning. It talks about TensorFlow, Caffe, Torch, DeepLearning4j and Theano but there are many others like Keras, AWS Deep Learning AMI, Google Cloud ML Engine or Microsoft Cognitive CNTK. As complementary material, here you have some links with comparatives of these platforms:

Let’s summarize some key points to close this block:

Types of learning: Supervised learning (contains both inputs and desired outputs, and is trained with a training data); Unsupervised learning (only contains input data that has not been tagged or classified, and common elements are identified); and Reinforcement learning (instead of focusing on performance, seeks a balance between exploration — new knowledge — and exploitation — current knowledge-).

Learning models: there are many such as basic regression (linear, logistic), classification (neural networks, naive bayes, random forest…), cluster analysis (k-means, anomaly detection…). Here you have an infographics as cheat sheet and a video that explains a little more in detail 7 of those models.

As you can see, Artificial Neural Networks (ANNs) can assume all three types of learning and are within the classification spectrum. I’m not going to go deeper into the types inside each one, like CNN or RNN that you’ll see in the next section, but I do want to share with you an article by Matt Truck talking about how far we are from the AGI: Frontier AI: How far are we from artificial “general” intelligence, really?. Now that we have a solid base of how DeepMind and AlphaGo works (Deep Learning + Reinforcement learning) another key term comes out: transfer learning. It’s about the possibility of applying acquired knowledge in some tasks to new tasks. DeepMind is solving it with PathNet, a network of trained neural networks. You have another really interesting article on this topic, with positive results on the transfer knowledge between simple games. Obviously, this extrapolation to the real world is still science fiction but it’s a great start.

Returning to Truck’s article, it lists really interesting different approaches to ANNs such as Recursive Cortical Networks (RCN), CapNets, Differentiable Neural Computers (DNC)… In short, fusing neuroscience with AI. In the article he names a workshop, “Canonical Computation in Brains and Machines”, where he specifically talks about these topics and which content is uploaded to YouTube. I haven’t had time to watch it entirely (24 lectures of 40 minutes…) but here you have the complete list (start with What are the principles of learning in newborns?)

TECHNICAL

And we’ve reached the final level! In this section I’m not going to explain any new concept in detail but I recommend you different online courses, free and paid, for you to do. Obviously, they are all technical courses, some require programming experience and others just a solid mathematical base. The important thing about these courses is that you acquire the knowledge you need. Some of you will want to go all the way until you can program an Alpha Zero (as they write in this article) and others, as is my case, understand the technological bases and extrapolate from there. Let’s start!

AI For Everyone — Andrew Ng

I don’t know if you know Andrew Ng, co-founder of Coursera, director of Stanford’s AI lab and former Chief Scientist at Baidu. I knew him from the free book he launched in Machine Learning and I recommend the course he has launched in Coursera, 100% online and free.

No comments:

Post a Comment