The Department of the Navy [DON] collects more data each day than the total amount stored in the Library of Congress. Yet, the DON is organized and funded around systems and hardware and lacks the tools to ensure the information is used to its full potential.

In artificial intelligence (AI), categorization is commonly defined as assigning something (e.g., a person or piece of equipment) to a group or class. For example, a specific large piece of equipment in the ocean could be categorized in the categories or groups: warship, aircraft carrier, flagship, and U.S. Navy-owned. However, assigning entities to groups is only the tip of the categorization iceberg because categorization also includes naming and defining categories; arranging categories into frameworks; documenting the basic methods of categorization; and agreeing on and using an upper-level framework of categories. Frameworks of categories are critical because they provide context for individual data elements and serve as job aids for people assigning appropriate machine readable labels to individual instances or entities.

Categorization is far more important to the naval services than is generally recognized for three reasons: first, it is the foundation on which elemental AI machine learning is built; second, AI offers significant potential to improve operational effectiveness and efficiency; and third, categorization “ensure[s] information is used to its full potential.” Machine learning and “deep” learning algorithms attract more attention, but without fundamental categorization, the more conspicuous elements of AI do not function.

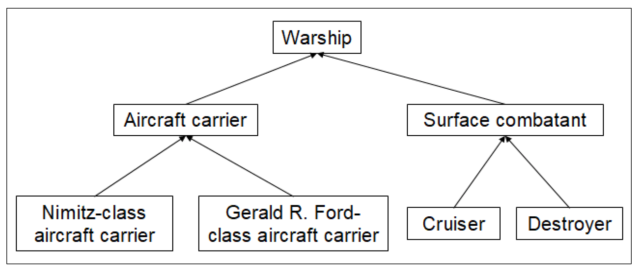

The figure below is a framework showing six categories of warships.1 Each category by itself is useful. However, only the framework provides the context for categorizing types of warships. The framework also is adaptable: Other types of Navy ships can be added. This makes it possible for machine-learning algorithms to identify and categorize information about Navy ships.

AUTHORS

A person could do all the tagging, by processing and marking data, reports, articles, and other literature about these types of ships with standard names. He or she also could use both standard and nonstandard names for the types, such as “tin can” for destroyer. This person might use a variation of the chart above as a job-aid reference.

A machine-learning algorithm trained to do this work could review and tag all the documents in a repository—more documents than it is practical for even a large number of people to review. However, such an algorithm must be trained. The training requires individual people to review a sample of the documents, labeling and associating nonstandard terms with standard ones. The New York Times reports that labeling training data “account(s) for 80 percent of the time spent building A.I. technology.” If you were not aware that labeling of training data takes four times as much time as using the labeled data to train an algorithm, it is probably because, as the Times points out, “Tech companies keep quiet about this work.”

George Washington and Continental Army C2

The U.S. military has recognized the importance of standard frameworks since long before the advent of AI. General George Washington spent the winters at Valley Forge putting the full force of his office behind developing and implementing the framework of the system of commands he used to control the maneuvers in battle. The result was published in Regulations for the Order and Discipline of the Troops of the United States.

These commands were needed because the Continental Army was formed by aggregating units from the various colonies. Since the colonies’ militias lacked standardization, the units in General Washington’s Army continued to use their local and disparate systems of commands until the standard commands were implemented. Battlefield leaders who understood the commands General Washington used had to translate them into commands used by their own units. This self-induced “friction in war” unnecessarily impeded execution of orders. Thus, Washington’s Regulations were key to improving the Army’s command and control (C2) and efficiency on the battlefield.

Two hundred and forty years later, today’s Defense Department has the same basic problem because Congress, the armed services, other DoD organizations, and communities of interest agree on the need for frameworks of categories but have developed and implementing hundreds—possibly thousands—of them in disparate silos. These frameworks are promulgated in laws, policy, doctrinal publications, standards, DoD forms, and other authoritative documents. Missing—but necessary—is an upper-level framework of categories to which today’s many disparate frameworks of categories can be mapped and reconciled. Developing and implementing such a framework will require senior leaders’ involvement.

A more recent example of such leadership is Secretary of Defense Robert McNamara’s standardization efforts. In 1961 when he assumed office, McNamara knew DoD needed to standardize categorical frameworks across the department. His aim was the same as General Washington’s: to improve effectiveness and efficiency. One such standardization effort was developing the mission designation system (MDS) for aircraft across DoD. Secretary McNamara’s management efforts are often criticized today, but his personal involvement in this type of effort standardized frameworks of categories across DoD, achieving efficiencies that are taken for granted today.

Operation Desert Storm – C2 and Intelligence

In 1991, one of this article’s authors led the Marine Corps’ Battlefield Assessment Team’s assessment of C2 by Marine Corps forces in Operation Desert Storm. Probably the most important information learned was that, on days with the most operational activity, the I Marine Expeditionary Force (I MEF) received so many intelligence reports that the intelligence section stopped counting at 6,000 incoming reports. The intelligence section did not know how many reports they received, and probably less than half were read. It is clear that in the future intelligence analysts will require computers that can assume much of the work that was done by people in 1991—because the number of intelligence reports is likely to increase dramatically. Even if the personnel available to process intelligence increases, it will not be at nearly the same rate as the reports.

ICODES and AI for Rapid Shipload Planning

The sailors and civil servants who plan shiploading also need more data-processing capacity than existed for Desert Storm. When planning a shipload, these professionals need to generate and process detailed data, including dimensions and weight of every vehicle and other cargo item to be loaded. Load planners also need to implement rules in hazardous materials regulations.

Until the late 1990s, developing a load plan was largely manual with only supplemental computer support. A unit whose equipment was to be shipped to Saudi Arabia would receive a copy of the layout of the ship and then prepare a plan good for that ship and only that ship. This detailed planning addressed multiple factors, including three particularly important ones. First, every square foot of space needs to be used. Second, improper loading/weight distribution can put too much stress on the hull and damage the ship. Third, cargos include hazardous materials that are safe only as long as safety requirements are met—some must be loaded on weather decks to disburse hazardous vapors; others must be kept separate to preclude vapors mixing and forming a dangerous combination. If, as sometimes happened, the ship that showed up to be loaded was different from that for which the load plan had been developed, no fast way to develop a new plan or adjust an existing plan existed.

After Operation Desert Storm, the load-planning community sought a computer-based tool that would not only support planning loads, but also—probably more important—rapid replanning. The result was the Integrated Computerized Deployment System (ICODES). It was first fielded in 1997; employed symbolic AI; and had one or more frameworks of categories (called ontologies).

ICODES’ ontology modeled the ship’s layout, cargos (e.g., tanks and trucks), and hazardous materials. In the past 22 years, ICODES has been extended to aircraft, trains, staging areas, and other load-planning tasks. As useful as it is, people familiar with ICODES are almost always unaware of three things: First, ICODES was developed as a symbolic AI solution; second, its success rested on identifying and developing frameworks of categories; and third, there was support from the highest level of the then–Military Traffic Management Command, now the Military Surface Deployment and Distribution Command (SDDC).

Global Force Management Data Initiative

In the early 2000s, then–Marine Lieutenant General James Cartwright was the J-8 (finance and contracts) of the Joint Staff. One of DoD’s pressing challenges was how to manage U.S. forces globally, made more difficult as the armed forces’ declining size increased the need to deploy partial units (e.g., sending only certain members, capabilities, or equipment from a unit). Manually tracking decomposed unit-level organizations throughout the process was difficult, and determining residual capability and tracking remaining partial units challenging. DoD lacked an automated process do so.2

This is very similar to the ship-loading problem, except the domain is not a ship but all of DoD’s forces wherever they are. One of General Cartwright’s important contributions to a solution was championing the Global Force Management Data Initiative (GFM-DI). The core of the GFM-DI is a framework of categories focused on representing force structure. With ICODES ontology, a ship’s load is represented down to the single-item level of detail (e.g., truck). With GFM-DI a force can be represented down to the single item level of detail (e.g., truck and person). Just as Secretary McNamara’s standardization had been resisted 40 years earlier, so too was General Cartwright’s GFM-DI initiative resisted. But today, the GFM-DI is being implemented.

Preparing for Tomorrow’s AI

In his foreword to the Department of the Navy Innovation Vision report, then–Secretary of the Navy Ray Mabus observed, “It is clear that innovation is not just about buying a new platform or weapon system; rather it is about changing the way we think.” The Innovation Vision goes on to state, “Sharing information across organizational boundaries enables innovation to thrive. The [Navy] will integrate technology and learn from other organizations’ best practices to maximize the value of our existing information and become a learning organization by mastering the information cycle.”

Today’s DoD data silos and stovepipes are largely the result of people with the best of intentions developing “one-off” frameworks for their unique domains. Sharing data and information across the naval services and DoD to create an effective “learning organization” capable of mastering the information cycle depends on providing easy access across the enterprise to the basic theories and methods of categorization. Successful cultures of innovation emerge from the creative energies of individuals and teams working across an enterprise, recognizing the lessons learned from previous breakthroughs and building upon them.

No comments:

Post a Comment