by Trey Herr

Source: Simon Handler, Trey Herr, and John Eric Goines

Source: Simon Handler, Trey Herr, and John Eric Goines

Source: Lily-Zimeng Liu19

Source: Lily-Zimeng Liu19

Executive summary

Cloud computing is more than technology and engineering minutia—it has real social and political consequences. Cloud services are becoming the battleground for diplomatic, economic, and military dispute between states. Companies providing these cloud services are substantially impacted by geopolitics. This paper pokes holes in four recurrent myths about the cloud to provide actionable advice, intended to increase the transparency and security of the cloud, to policymakers in the United States and European Union and practitioners in industry.

Commerce and Trade: Amazon retains a lead in the cloud market, but it, Microsoft, and Google are pushing data/infrastructure localized friendly products that might well undermine the economics of their business in years to come.

National Security: If the United States and China are battling over the security of 5G telecommunications technology, which is still barely in use, what kind of risk will this great-power competition have for cloud computing?

Tech Policy: Cloud providers like Microsoft and Amazon are seeing userbases grow so large, and include ever more government users, that they are increasingly host to cyberattacks launched and targeted within their own networks.

Keys

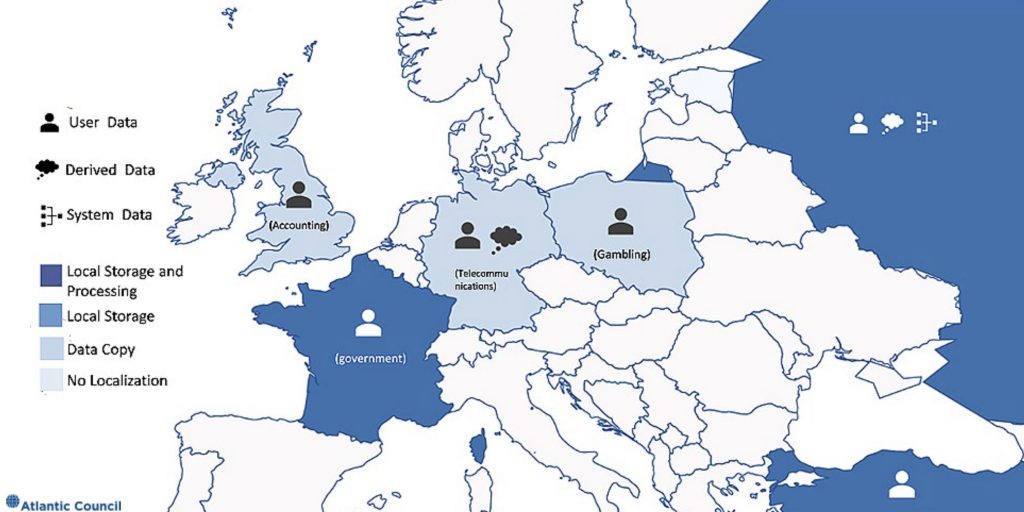

Data localization requirements being implemented in many countries in the European Union and Asia lack clear distinction between three major types of data cloud providers collect—user (files, emails), derived (websites visited, advertisements clicked), and system (how the provider’s computers and network respond to use—e.g. heat, lag time, bandwidth used).

Cloud computing is a source of supply chain risk for users and a significant risk management problem for cloud companies themselves. Many cloud providers' core risk management practices are less transparent to customers than in other areas of security.

The public cloud, accessible around the globe, provides the economic rationale behind cloud computing—many users sharing the cost of the same infrastructure. More than just Russia or China, democratic states are undermining the public cloud; the United States, United Kingdom, Australia, and Germany have all demanded some version of private cloud for varying uses and the European Union is pursuing a potentially sovereign European cloud—GAIA-X.

While Twitter and Facebook influence the information we see, the US hyperscale cloud providers, Amazon, Microsoft, and Google are reshaping the internet over which this information travels.

Introduction

Cloud computing providers are more than companies—they govern vast utility infrastructure, play host to digital battlefields, and are magnificent engines of complexity. Cloud computing is embedded in contemporary geopolitics; the choices providers make are influenced by, and influential on, the behavior of states. In competition and cooperation, cloud computing is the canvas on which states conduct significant political, security, and economic activity.

This paper offers a brief primer on the concepts underneath cloud computing and then introduces four myths about the interaction of cloud and geopolitics. First, that all data is created equal – a discussion of how cloud providers build and operate these data intensive services and the impact of debates about how and where to localize these systems and their contents. Second, that cloud computing is not a supply chain risk – cloud providers play host to some of the most remarkable security challenges and widely used technical infrastructure in the world, their decisions impact the supply chains of millions of users and entail management of risk at sometimes novel scale. Third, only authoritarian states distort the cloud – a pernicious myth and one that continues to hold back a cogent Western stategy to defend the open internet and threatens to upend the economics supporting the public cloud. Fourth, that cloud providers do not influence the shape of the internet – this final section highlights both risk and opportunity for the internet which runs much deeper than speech controls and content takedowns.

Understanding the geopolitics of cloud computing demands that we approach select companies and treat them like states, understanding their influence exists in the domain of technology, society, economics, and politics, if not the most visible forms of warfare. The influence of cloud is more than theoretical and has significant implications for policy making across trade, foreign policy, national security, as well as technology policy. The myths we tell ourselves about these interactions risk distorting our perception of the events in front of us.

There is no cloud, just other people’s computers

Cloud providers, in effect, rent out computers and networks to users around the world, from Fortune 500 companies to individuals. Providers build new services on top of their computing resources, like accessible machine translation, sophisticated databases, and new software development tools. Large internet companies increasingly use these cloud services in lieu of building their own technology infrastructure. The growth of cloud computing from an academic research project to a commercial product generating billions of dollars in sales has commoditized computing capacity, storage, and networking bandwidth, and led to a new generation of data-intensive startups.

Figure 1: Global public cloud revenue 1

Cloud computing ties corporate decision-making driven by business risk even more closely to national security risk as a single provider’s supply chain decisions and internal security policies can impact millions of customers. This dynamic recalls the “era of big iron,” when room-sized mainframes built by a handful of powerful firms were how most users accessed a computer. The language of that era persists today: the vast networks of servers that cloud providers build and operate are similarly cloistered in specialized and well-protected rooms, concentrated under a handful of corporate giants.2 The decisions these giants take about what technology to buy, build, and operate shapes the technical environment for an increasing number of government and sensitive corporate entities.

Figure 2: IaaS & SaaS, 2019 public cloud market share 3

These changes in technology have had political ramifications as the growing clout of major cloud service providers causes friction between regulatory models developed for personal computers and servers located in one jurisdiction and a cloud infrastructure that is globally distributed. As ever larger numbers of customers, including intelligence and security agencies, move their data and operations into cloud services, concerns arise over where the infrastructure underneath these services is built and how it is administered. Regulation of the different types of data in the cloud create flashpoints and misunderstanding between companies and governments. Add to that a healthy skepticism from non-Western states about the dominance of US cloud providers, and the conditions are ripe for friction.

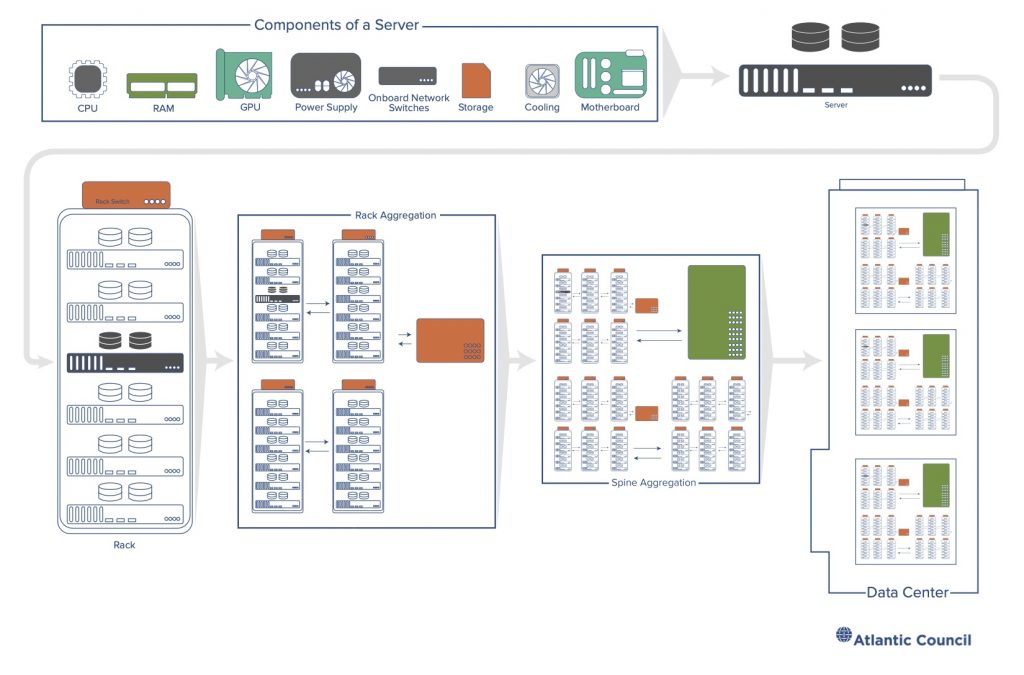

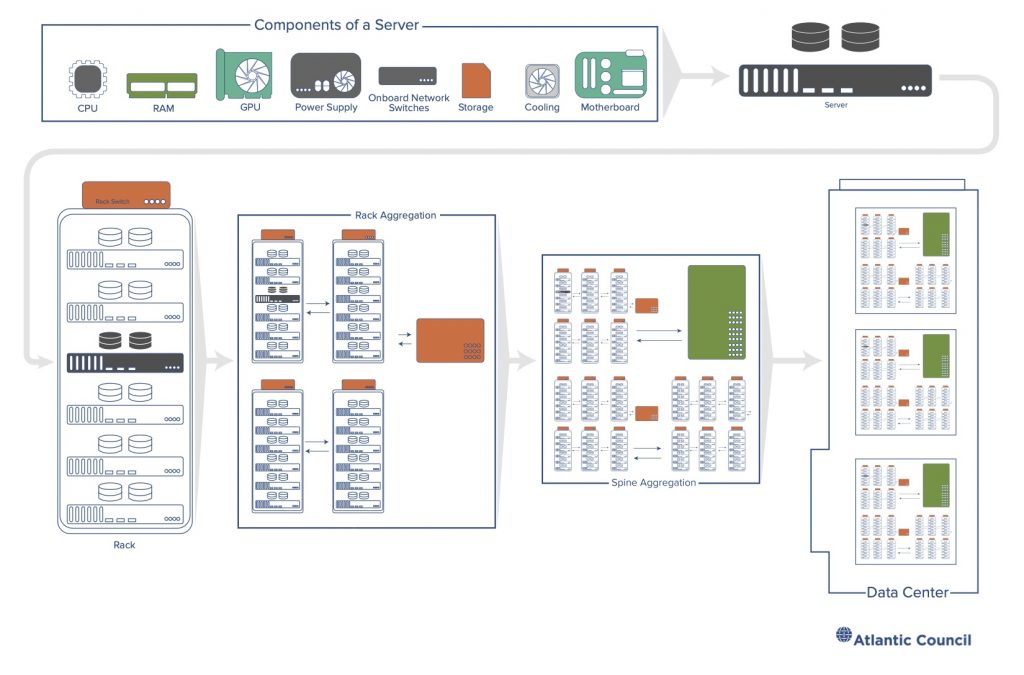

An unfortunate amount of material written about cloud computing discusses it with the awe reserved for magical woodland creatures and general artificial intelligence. In contrast, cloud computing is quite real—manifest as miles of metal racks housing sophisticated electronics connected by planetary-scale fiber and radio data networks all supported by specialist teams and massive cooling sources. The term cloud comes from networking diagrams where a system being described had a link to some far away set of computers, a line drawn up to the corner of the page toward a bubbly figure representing the “other.” This bubbly cloud-like image became shorthand for computers and network services that were not in the scope of the diagram itself but remained accessible. Caring for these ‘fleets’ of machines demands constant attention and adjustment. Even the best run processes can suffer embarrassing failures, like a broken Google update that caused a temporary outage4 through large swaths5 of North America in June 2019, or a lightning strike6 at a Microsoft data center that hobbled7 Active Directory company-wide for hours.

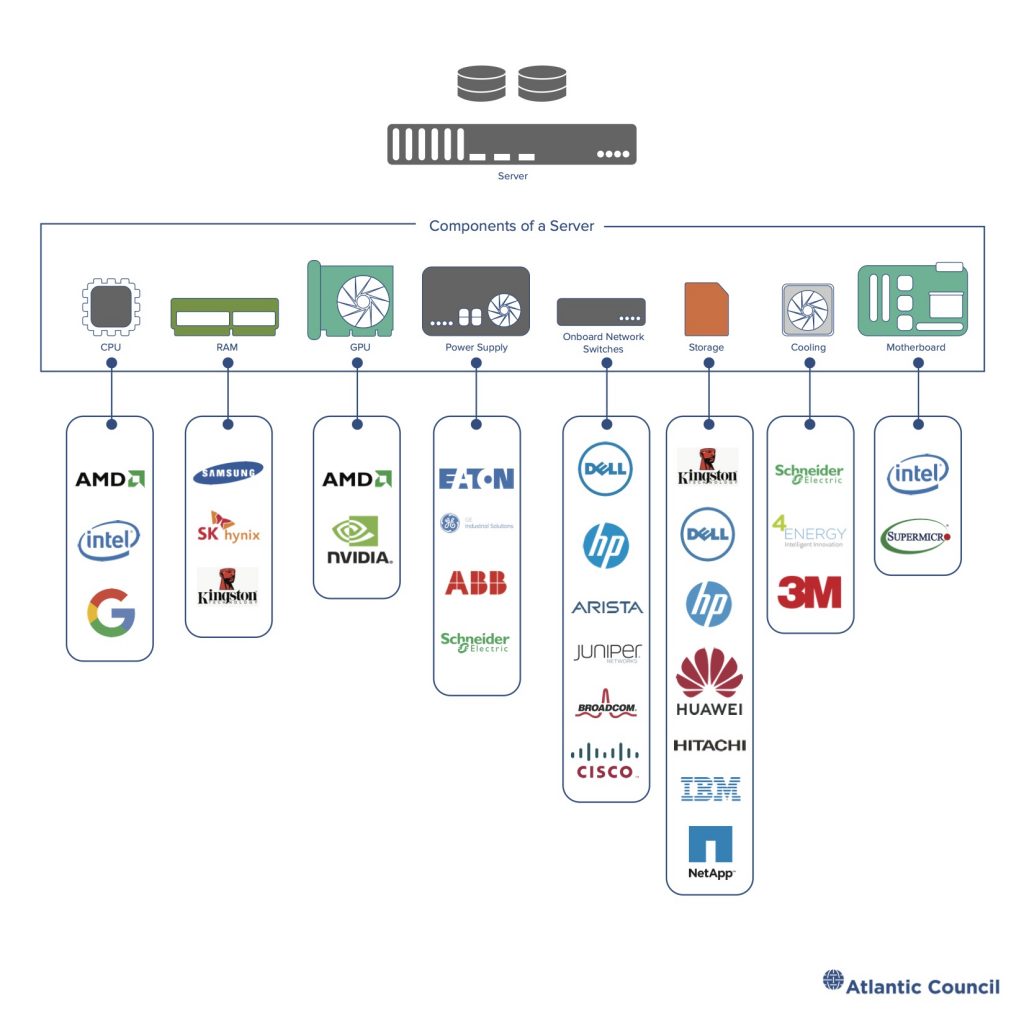

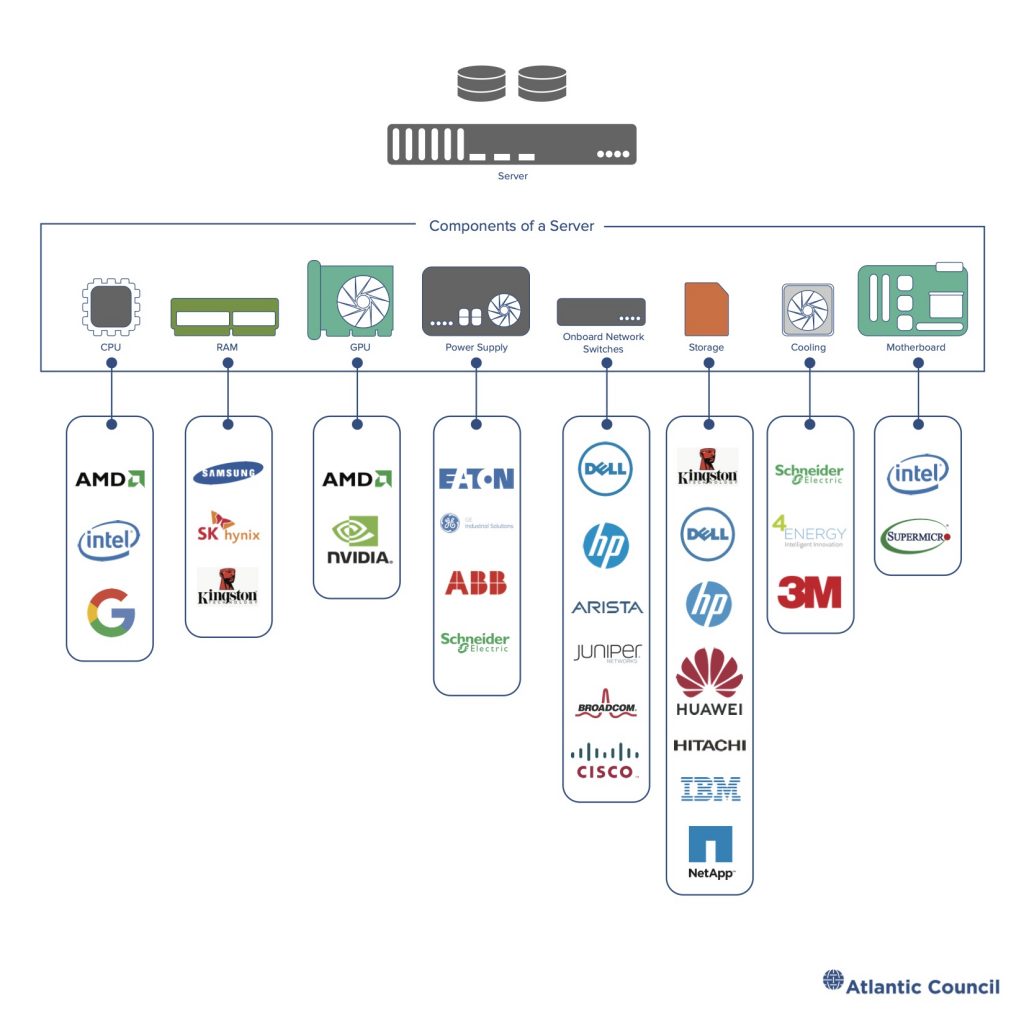

Figure 3: Components of a data center Source: Tianjiu Zuo and John Eric Goines

Source: Tianjiu Zuo and John Eric Goines

Source: Tianjiu Zuo and John Eric Goines

Source: Tianjiu Zuo and John Eric Goines

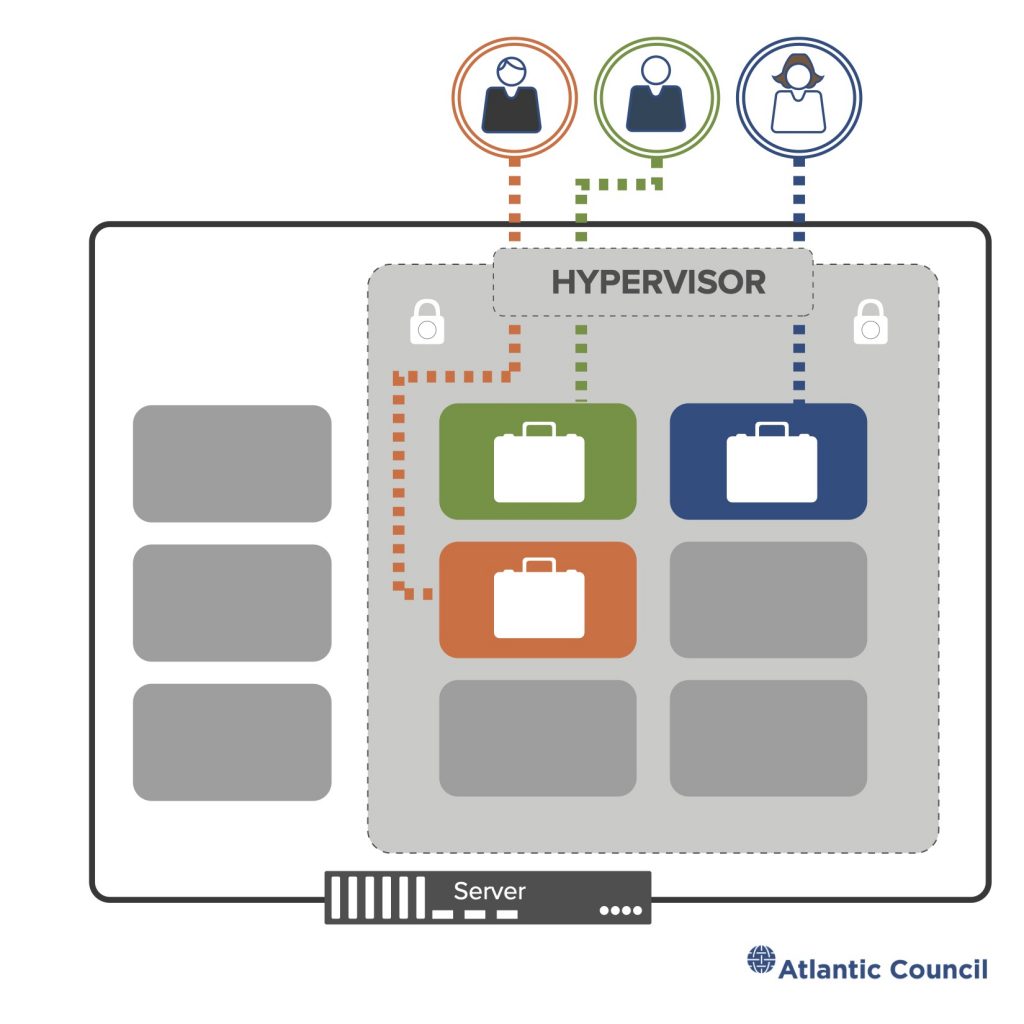

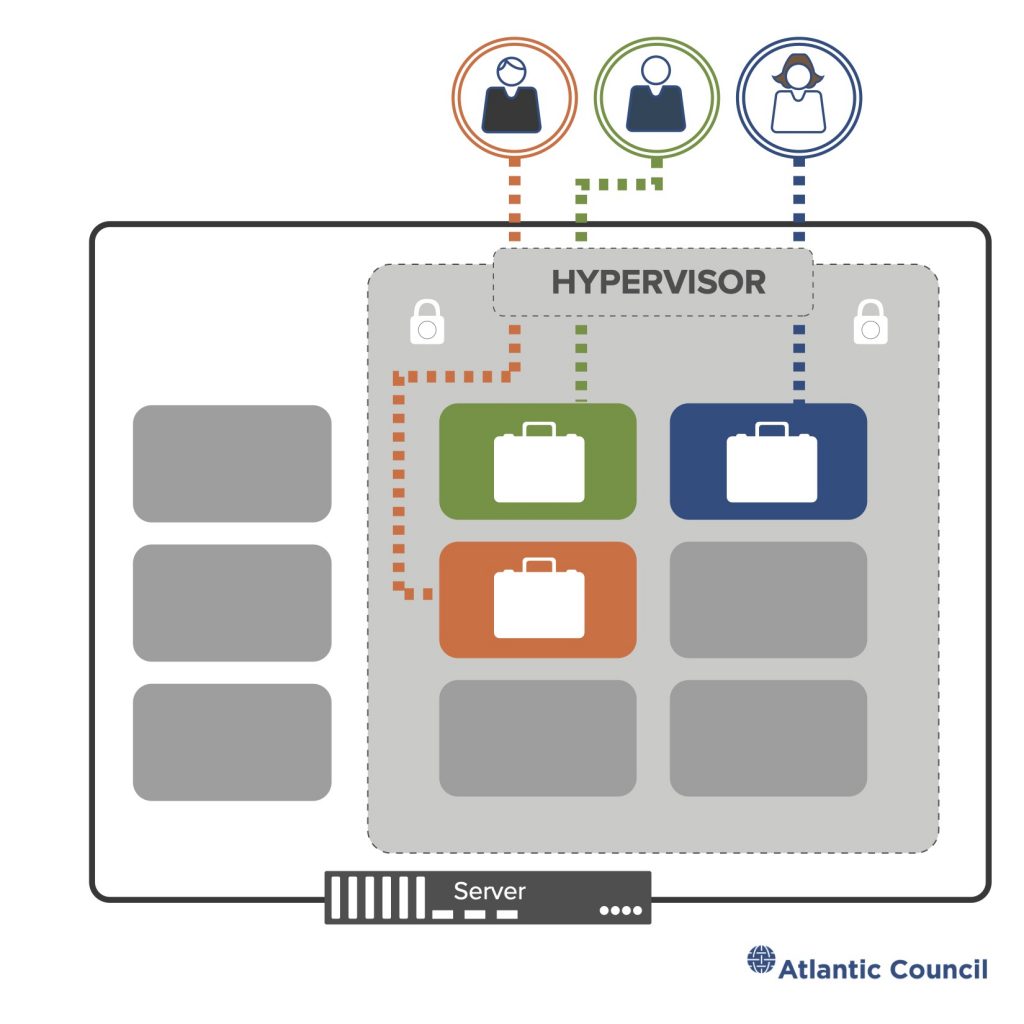

At the root of the majority of cloud computing is the shared services model, where many users reside on a single physical machine.8 Multitenancy is the term used to describe shared use, while the technology that makes it possible is called a hypervisor: software that supervises a computer and divides up its resources—processor time, memory, storage, networking bandwidth, etc.—like cake at a birthday party where every partygoer is blindfolded. Everyone gets to enjoy their slice of cake, unaware of those around them enjoying their own portions, too. The hypervisor keeps each user separate, giving them a turn to use the computer while creating the appearance that each is alone on a single machine. The hypervisor is critical to keeping users isolated from one another. Flaws in the hypervisor software can enable attackers to escape from their slice of the computer into that of other users or, worse, into the host machine’s operating system controlled by the cloud provider.

Figure 4: Illustrating the multi-tenant model

Source: Simon Handler, Trey Herr, and John Eric Goines

Source: Simon Handler, Trey Herr, and John Eric Goines

In a cloud service, each of these computers runs additional software selected by the cloud provider and user and each is tied into a network. By building services which use these networked machines, cloud providers can take storage at a facility in Frankfurt, match it with processing in Texas, and deliver the result to a user in Tokyo. Web mail, search results, streaming video, photo storage and sharing, the computation that makes a digital assistant responsive to your voice—all of these are services layered on top of the cloud. In industry parlance, there are three basic models of cloud service:

Infrastructure as a Service (IaaS): These are the raw computing, storage, and networking elements that users can rent and consume like a service rather than a product but must largely set up and configure themselves. For example, renting a virtual machine to host an email server.

Platform as a Service (PaaS): This is the diversity of software and online services built on top of the cloud. Users access these services without managing the underlying infrastructure. For example, the machine learning service an aircraft engine manufacturing company integrates into its products to predict when they will fail.

Software as a Service (SaaS): These are the online services that require no deep administration from the user. These services are offered without substantial ability to rewrite, rebuild, or reintegrate them like PaaS. For example, sharing documents online or the image recognition service a hospital uses to identify tumors in a CT scan.

There are hundreds of cloud companies, most selling services in one model. A handful compete in all three and the largest of these are referred to as the hyperscale providers—Microsoft, Google, Amazon, and Alibaba. Cloud computing is an expanding constellation of technologies—some old, some repurposed, and some wholly new. Much of the innovation in cloud is in managing these fleets of machines and building the vast networks required to make them accessible for users, rather a single snazzy new product or feature. There is no one single model of cloud computing. The major providers all build their infrastructure in slightly different ways, influenced by market strategy and legacy technology investments, but the abovementioned three models help categorize what one might find in the cloud.

Deploying hybrid cloud

Driven by specific business, regulatory demands, or organizational discomfort with the cloud model, some users combine pubic cloud services with locally managed infrastructure. This half cloud, half local hybrid model varies by provider but generally finds cloud services deployed alongside a user’s existing infrastructure to run the same software as in public cloud data centers with huge amounts of hard disk storage allowing the companies that operate them to capitalize on economies of scale, saving more data for similar costs on site maintenance and connectivity infrastructure. These two work alongside each other and give users access to some or all cloud services while still allowing them to run their own equipment. Hybrid cloud can preserve technical flexibility by allowing organizations to retain other equipment or allow a slower transition from self-run data centers to full use of a public cloud. It also involves some compromise on the economic model behind cloud, premised on widest possible use of shared infrastructure, and requires additional administrative capacity and competence from users. Hybrid cloud serves a real business interest, helping transition less cloud-friendly users, but has a real political benefit as well—making data localization easier. Hybrid cloud allows for sensitive services or data to be physically located in a specific jurisdiction, while remaining linked to public cloud services. This hybridity comes with additional cost, and large-scale deployments can be more technically complex while posing additional security risks as more ongoing technical and policy decisions are shared between user and provider.

Security in the cloud is similarly a mix of the old and new. Old are the challenges of ensuring that users, and not malicious actors, have access to their data, and systems are protected against myriad integrity and confidentiality threats that range from distributed denial-of-service (DDoS) attacks to power outages. New is the need to do all of this across tens of thousands of domains and millions of users every day; cloud presents an enormous challenge of the scale on which these longstanding security functions need to take place. This has driven increased automation and more user-friendly tools but also created recurring gaps9 where the user and cloud provider are not in sync10 about each other’s security responsibilities.

Similarly, new is cloud providers playing host to a growing domain of conflict. There are instances where the origin and destination of an attack occurred in infrastructure owned by the same cloud provider; attacker and defender using the same cloud and observed (possibly interdicted) by the cloud provider. As ownership of information technology (IT) infrastructure concentrates, so does exposure to what two US academics labeled the “persistent engagement” of cyberspace—with fewer and fewer major providers, there is a higher likelihood of engagements that start and end within the same network.11 Cloud providers have to balance the responsibilities of their global customer base with the demands of their home governments. These providers are put in the position of arbitrating between their terms of service and security commitments to customers and national intelligence and military activities taking place in their infrastructure, creating a tangled web of business and national security risk.

Examining four myths

The challenge with telling any story about cloud computing is avoiding spectacle and hyperbole. The cloud will revolutionize, energize, and transform! Much of what is in the public domain about cloud computing comes from marketing material and is thus a creative interpretation of the truth.

Myths are useful in the telling of a story. They simplify complexity and add drama to otherwise mundane events. But myths can become a lie when repeated over and over. In cybersecurity, myths come from all parties: naïve regulators, fearful companies, excitable marketing, and collective misunderstanding. The myth at the heart of the cloud is that only technical factors determine how these services are delivered and how their infrastructure is built.

On the contrary, political realities exercise an ever greater influence over how the cloud is built, deployed, and used in global businesses and security enterprises. External forces, like pressure to store data within a particular jurisdiction or concerns over a supply chain, are equal in weight to internal forces as the concentration of ownership in cloud computing raises significant questions about the democratic accountability of technology. To shed light on the geopolitics of cloud computing, this issue brief examines the following four myths of the cloud:

All data is created equal

Cloud computing is not a supply chain risk

Only authoritarian states distort the public cloud

Cloud providers do not influence the shape of the internet

These are not the only myths about cloud computing, but they are some of the most persistent, persuasive, and unrealistic.

Myth 1: All data is created equal

It is challenging to talk about cloud computing without discussing data. For all its metal and concrete infrastructure, snazzy code, and marketing materials, cloud computing often comes down to managing huge volumes of data. Three categories are helpful:

User data, what the customers of a service store in the cloud: emails, tax files, design documents, and more.

Derived data, which allows cloud providers to learn about how users access and interact with these files: which documents do users from an office in Berlin tend to access first and should they be stored nearby to reduce latency?

System data, or what cloud providers learn about their systems from the way users consume services: what causes a spike in processor utilization or a drop in available bandwidth to a data center or a security alert for malware?12

Figure 5: Illustrating data types in the cloud Source: Lily-Zimeng Liu and John Eric Goines

Source: Lily-Zimeng Liu and John Eric Goines

Source: Lily-Zimeng Liu and John Eric Goines

Source: Lily-Zimeng Liu and John Eric Goines

User data was the subject of intense attention from the European Union’s General Data Protection Regulation (GDPR) resulting in changes by cloud providers. These changes have manifested in new tools and policies for users to limit access to their data, determine where in the world it can be stored, and transport it between cloud providers should the need arise. User account information—details like name, address, payment method, and what services the user consumes—is often given separate treatment from user data as it provides valuable information about user preferences and can be important to trace malicious behavior.

Derived data is incredibly rich and varied; what a Facebook user clicks on depending on the time of day, their most frequently liked posts, or how many times they start but delete a comment. Derived data about user behavior is the secret sauce behind most “behavioral-analytics” security tools and it powers the advertising machine at the heart of Google/Alphabet’s revenue’s engine: YouTube viewing habits, faces appearing together in photos, even the text of emails on the widely used Gmail. All of this data tells a story about users and their habits, beliefs, dreams, and desires—a story which is a commodity.13

For cloud providers, this derived data can help understand which services succeed or fail, how to more precisely price offerings, and where or when to introduce new features. Investigations and security responses produce even more data, combining the activities of multiple customer accounts to track an attacker as it moves across the provider’s infrastructure. The result is extensive privacy training and data security measures within at least the three largest US cloud providers—Amazon, Google, and Microsoft. Security-focused staff at these providers are subject to additional controls on data retention, restrictions on sharing data, and regular privacy training. Some of these controls impose difficult limits on how long data can be retained. If a recently detected cyberattack on a cloud service is the product of months of careful reconnaissance and infiltration by the attacker, limits on retaining data no longer than 90 days can make investigations to determine the source of the breach highly challenging.

Derived data for security: The password spray

Cloud providers use derived data for numerous security functions. All of the intelligence a company like Amazon gathers about its services—which customer accounts access the same data, how many times a user has logged in or tried the wrong password—are signals in the nervous system of the cloud.14 These signals are combined to understand “normal” behavior and identify malicious activity. Repeated attempts to log into the same account with many different passwords is an old way to try to break in. Many attackers now employ “password sprays”— attempting to access a large number of accounts with just a few commonly used passwords.15 A cloud provider could track many or all of those attempts even if they take place across different users, companies, and accounts. Since the cloud provider builds and operates the infrastructure, it knows much of what goes on within, allowing it to combine data from hundreds of users to identify a single attacker or malicious campaign.

System data is everything a cloud provider can learn about its own systems from how they are used; how a server heats up in response to sustained requests for new video links or network traffic associated with a particularly massive shared spreadsheet. System data includes technical information like the network routes a particular server uses to move data between facilities, logs of all the network addresses a single machine tries to access, results of testing for the presence of unverified or disallowed code, or the temperature in front of a rack in a data center. This kind of data is rarely tied to a specific user and is likely to be accessed by more specialized teams like Microsoft’s Threat Intelligence Center16 or Google’s Threat Analysis Group.17 Much of the derived and system data created are quickly destroyed, sometimes after only a few days or weeks. At the mind-boggling scale of global cloud providers, even the cost of storage becomes a limiting factor in how long data lives.

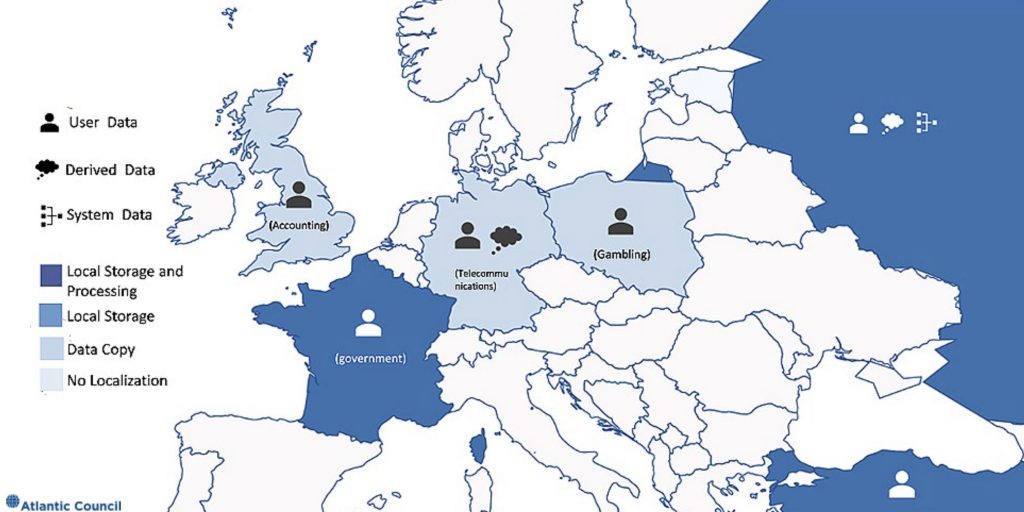

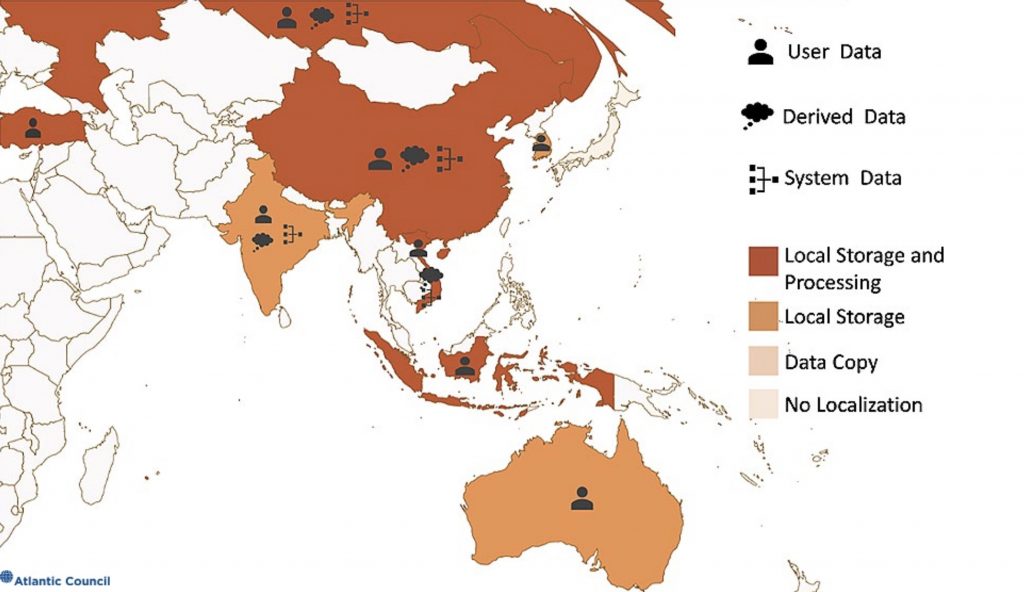

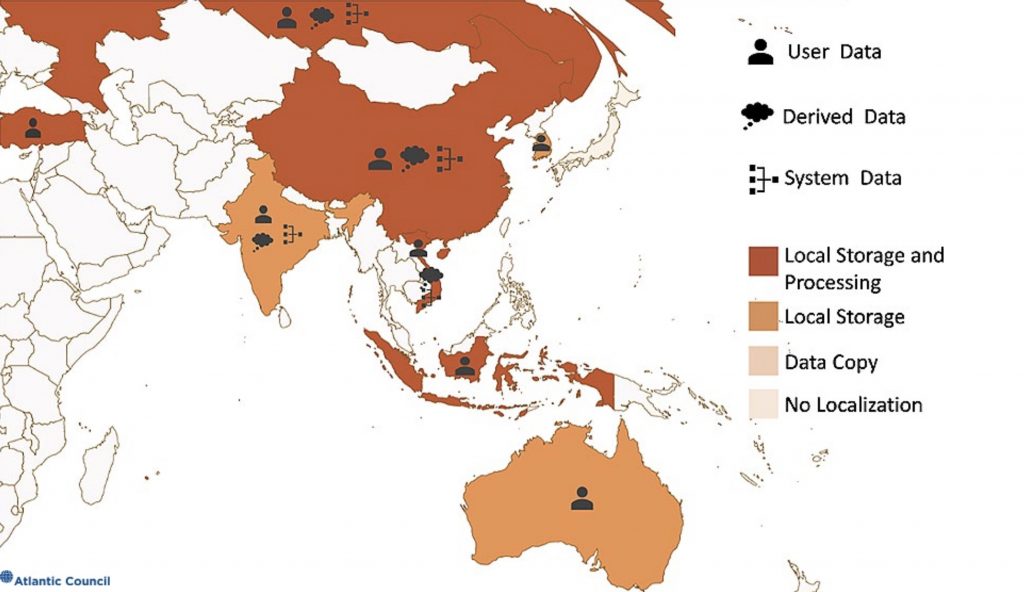

Differentiating between these types of data and their common uses becomes significant for debates about where this data should be located. One of the most frequent debates about cloud computing, especially when providers first enter a market, is where providers will store and process data. Efforts to force data to be hosted in a single jurisdiction are collectively referred to as data localization.18 Each of these data types serves a distinct business purpose within cloud providers that is rarely captured with nuance by localization requirements. Many localization requirements focus on user data, yet many of these same requirements state their intent is to restrict the widespread misuse of data in online advertising which typically leverages derived data. The most difficult category of the three types of data for providers to specifically locate is system data and yet this is rarely carved out from localization requirements.

Source: Lily-Zimeng Liu19

Source: Lily-Zimeng Liu19

Figure 7: Data protection laws in Asia and Australia Source: Lily-Zimeng Liu20

Source: Lily-Zimeng Liu20

Source: Lily-Zimeng Liu20

Source: Lily-Zimeng Liu20

Two laws illustrate the challenge of ignoring different types of data and the workings of the public cloud. Vietnam passed a new and expansive data localization requirement in 2018 for all manner of internet service firms, including cloud providers, to store Vietnamese users’ data in Vietnam for a period of time defined by the government. The law also requires any processing, analysis, or combination of this data must take place on Vietnamese soil.21 This produced a flurry of protests from cloud providers without giving Vietnamese information about how to address their chief underlying concerns about user data. In contrast, in October 2019, Indonesia modified a longstanding requirement that mandated internet service companies locate their data centers in the country. The rule is now limited to entities that provide public services and exempts those that use storage technology that is not available in Indonesia.22

The Indonesian law focused on data centers—linking the location of infrastructure with the location of data.23 Given how the public cloud model can locate processing and storage of data in widely disparate locations, data could be copied to this local infrastructure while processed and stored elsewhere. Even if user data was covered by the law, it is not clear that derived or system data would be, the latter in particular because it is largely generated by the provider about itself. The Vietnamese law, in contrast, appeared to cover all three types of data with its blanket restrictions on processing and data combination. This implied where infrastructure might be located and further limited what cloud providers could do with what Vietnamese users stored on their service and what the provider learned about these users.

Some governments look to localization to help improve the security of their users’ data despite little evidence to support the premise, and even evidence to challenge it. Forcing cloud providers to build variants of otherwise standard infrastructure designs and operating models could introduce unknown flaws or gaps in security practices. The public cloud is premised on global networks of computing and storage resources, not isolated local clusters. While data centers and associated power/cooling infrastructure do have to be located in a specific physical location, the ability to pass derived data about malicious activities and track abnormal events across the world is where cloud can improve users’ security. The same is true of user and system data—linking data centers in different parts of the world can provide more redundant backups and force providers to maintain consistent security and operational practices across these facilities. Figure 8 displays the location of every publicly acknowledged data center from the five listed cloud providers as of late 2019. Many of these are clustered around major cities and internet exchange or cable landing points.

Figure 8: Global cloud data center map 24 25

Cloud providers have evolved26 their policies27 and tools28 in light of demands for greater control and new regulatory requirements. This is progress and it reflects no small amount of investment on the part of these companies, especially the hyperscalers. But it also reflects a baseline expectation—users own their data and control should follow with ownership. It can be challenging to keep that linkage of ownership and control tight across globally distributed networks and constantly evolving infrastructure, but it is table stakes as cloud becomes the default model for much of computing.

Localization is the visible tip of a much larger debate about how to govern data, including its use, storage, retention, combination, and lawful access by governments; debate whose values should and will govern data and how those values will be enforced internationally. As the cloud grows more common and sophisticated in widespread use, countries must rapidly evolve policies to avoid a gap between technical design and commercial practice on one side and security and normative priorities of society on the other. Initially, this gap is a business cost to cloud providers that may pale in comparison to data privacy or national security concerns. Over the long run, such a gap has the practical effect of distorting the economic and technical underpinnings of the public cloud. Isolating markets through localization requirements makes new cloud infrastructure less cost-effective. This, in turn, reduces the incentive for manic competition that helps drive companies like Google to commit billions of dollars to update products, offer interesting services, and build in better security as a way to compete with larger rivals like Amazon and Microsoft.

Figure 9: Relative global data center count by provider/region

Table 1: Global data center count

Total Alibaba Amazon Google IBM Microsoft

Total 387 60 76 73 60 118

East and Southeast Asia 133 49 21 24 8 31

North America 119 4 25 25 28 37

Europe 90 4 18 18 18 32

Oceania 20 2 3 3 4 8

South America 10 0 3 3 2 2

Middle East 8 1 3 0 0 4

Africa 7 0 3 0 0 4

Source: see above, map

Who should care?

Regulators around the world must address how they govern the different types of data used by cloud providers. The alternative is costly breakdowns in the public-private partnership over cloud security and deployment where companies opt out of smaller markets and offer services which are more costly and less flexible in those larger. Innovation requires investment and attention to the rules that govern intellectual property ownership, access to data and requisite research facilities, and the ease of bringing new ideas to market, among many other factors. Many of the US firms under fire in Europe have made their business monetizing data derived from their users because it was possible, profitable, and popular. These conditions, however, may be changing. The European Union (EU) would do well to create a robust framework for data governance, including limiting requirements for localization in the EU, that facilitates the next generation of internet entrepreneurs rather than assailing the exemplars of this one (looking at you, France29).

Recommendations

[White House] Do not forget about machine learning. Finally appoint a chief technologist to the Federal Trade Commission. Charge this person with leading a multistakeholder group to define a policy framework for the status of machine learning models, their outputs, and associated data, including legal ownership and classification under major data governance regimes. Work with Congress to implement key portions of this framework into law and help lead the global policy debate.

[The European Union Agency for Cyber Security] ENISA should develop an updated Network and Information Security (NIS) Directive and rules following on the Cybersecurity Act to ease the adoption of the cloud in regulated industries and security-specific national agencies. This should build on the work of the EU’s Cloud Service Provider Certification (CSPCERT) Working Group and identify rules which could be rolled back or revised.

Myth 2: Cloud computing is not a supply chain risk

Supply chain policy has been dominated by a focus on telecommunications, especially 5G, over the past several years. Efforts by the United States to block certain technology providers, and pushing others to do the same, emphasize the risk posed by allowing untrusted hardware and software into “core” networks, the broad public utility of 5G, and the significance of trusted telecommunications services for both public and private sectors well into the future.

Cloud computing poses a similarly broad and far more immediate source of supply chain risk. Indeed, many cloud service providers are moving into the 5G market, partnering with traditional telecommunications firms, while others take advantage of the increasing virtualization of telephony hardware into software; Amazon is partnering with Verizon to provide 5G services30 and Microsoft acquired virtualized telecommunications provider Affirmed Networks earlier in 2020.31 Indeed, several cloud providers are effectively telecommunications companies themselves with large global networks of undersea and overland fiber optic cables.32

Cloud providers buy and maintain vast computing infrastructure and host customers around the world. They are targeted by the same diversity of sophisticated threats that target telecommunications firms. Intelligence and defense agencies across the world, along with the financial sector and nearly every Fortune 500 company, use cloud computing. Cloud computing is already a pervasive infrastructure and an important source of supply chain risk.

Major cloud providers aggregate the risk from commodity computing and networking technologies by purchasing processors, server boards, networking switches, routers, and more in galactic quantities. Each of these chips, cables, software packages, and servers comes with potential vulnerabilities. Cloud services rely on massive quantities of software, including code developed by third parties and open-source projects. As previous work has shown,33 these software supply chains are vulnerable.

Figure 10: Data center server supply chain Source: Tianjiu Zuo and John Eric Goines

Source: Tianjiu Zuo and John Eric Goines

Source: Tianjiu Zuo and John Eric Goines

Source: Tianjiu Zuo and John Eric Goines

Cloud providers must manage many different kinds of risk—they operate large, sometimes global, telecommunications networks; manage huge corporate enterprise IT networks; develop and maintain massive quantities of software each year; and build out large data centers stuffed with computing, network, and storage gear along with the physical plants to keep these data centers powered and cooled. The largest hyperscale providers in the West—Amazon, Google, and Microsoft—attempt to manage their risk through extensive security audits of purchased products, setting and assessing security standards for technology vendors, and detailed threat intelligence collection and reporting. Building trust in the secure design and support process of these vendors is crucial for cloud providers. In some cases, the cost or complexity of establishing this trust is too great and cloud providers bring a firm in house or even replace key vendors by building their own component devices.34 35 Nonetheless, risk can only be managed, not eliminated.

Hacking the hypervisor

Cloud computing supply chain risk is not limited to hardware or closely related software (firmware). Hypervisors, the software that allows a single computer to be used by multiple entities at once while keeping them separated, are critical to the functioning and security of cloud services. A vulnerability in a hypervisor could allow attackers to escape their virtual machine and gain access to other users or even the host sever like the Venom flaw discovered in KVM, Xen, and the hypervisor QEMU in 2015.36 Google uses a variant of the open-source KVM as increasingly does Amazon, shifting away from the also open-source Xen. As part of its switch to KVM, Amazon has deployed what it calls Nitro—a set of hardware-based hypervisor and security functions. Each function is designed into a single chip, wringing out maximum efficiency and performance.37 Microsoft runs an internally developed product called Hyper-V, which is built into Windows Server. Hypervisor escapes pose a great challenge to cloud services as this software provides the foundation for isolation between users and the provider. One vulnerability broker has offered up to $500,000 for vulnerabilities leading to an escape based on demand from customers.38 There are notable examples of vulnerabilities disclosed in open39 research40 and competitions,41 which suggest still more vulnerabilities being used or sold without disclosure.

Cloud supply chain risk shares some important similarities with other domains. The same flaws in chips or vulnerabilities in software that would impact a cloud data center also pose risks to traditional enterprises in managing their own infrastructure. Managing the security of a large corporate network at a Google or an IBM requires many of the same decisions about investing limited resources as it does at a Goldman Sachs or a Walmart. Software supply chain matters to an open-source project just as it does to government agencies or the military.

The difference with the cloud is in its scale. Where a company running its own data centers might buy hundreds or thousands of servers every year, cloud providers are buying hundreds of thousands of servers and assembling or even designing their own. This equipment is shipped all over the world, as shown in Figure 11, to data centers owned or leased by each provider. Depending on the service model—IaaS, PaaS, or SaaS—cloud providers might make significant design and procurement decisions about every single piece of the technology stack used to provide a cloud service or share more of that responsibility with customers.

No comments:

Post a Comment