JOSEPH DOWNING, WASIM AHMED, JOSEP VIDAL-ALABALL AND FRANCESC LOPEZ SEGUÍ

From the start of the coronavirus/COVID-19 pandemic the global spread of the virus has been mirrored by a global “infodemic” of false information and fake news spread across new media platforms. Months before mobile phone masts were attacked in the UK, the French ministry of public health tried to refute a rumour that cocaine could cure the virus after this rumour spread across social media in France. This rapid emergence and spread of false information demonstrates worrying connections between public health, security and the prominence of fake news on social media that has long alarmed scholars. Survey results from IPSOS and BuzzFeed News showed that close to 75% of Americans surveyed believed fake news during the 2016 presidential election . While it is not possible to generalise from this specific context such publications demonstrate the dangerous persuasiveness of fake news during important political events. In the UK, during the Grenfell fire (as with the current 5G and COVID-19 conspiracy theories) celebrities and politicians have been means by which rumours have been spread. This is a remarkable volte-face within academia where new media platforms had initially been hailed as a panacea of democratisation and the spreading of liberal freedoms during incidents such as the Arab Spring and the early days of Wikileaks.

However, it has been shown that we should not take claims about the ability of social media to translate into direct action in the real world on face value. Rather than social media activity leading to direct, “real world” action scholars have argued for the inverse relationship – that it is real world action that causes a ripple effect across social media. From the perspective of security studies this has long been an important tension within the field between theories of the constructivist persuasion and the more “material” schools of thought. This tension can be expressed here in thinking further about the complexities of causality – do constructions of (in)security on social media lead to material forms of (in)security?

This takes on a further level of importance and complexity when one considers the recent advancements in the field around questions of “vernacular security” where (in)security is reconceptualised as interwoven with the everyday and not something handled simply by state elites. Vernacular security places increasing importance on the ways in which (in)security not only bleeds into the everyday through factors such as the constant intrusions into public life of surveillance technologies, but also the ways in which non-elite actors voice their own discourses around questions of (in)security, something only magnified and democratised by new media technologies.

This process has resulted in worrying consequences during the current COVID-19 crisis in the UK where an online generated and spread conspiracy theory that new 5G mobile technology is causing COVID-19 symptoms has caused mobile communications infrastructure to be attacked . The origin of this theory demonstrates the transnational dimension to both the new media landscape but also the way that fake news and conspiracy theories travel. Scholars have traced the emergence of the conspiracy theory to comments made by a Belgium doctor in January 2020 linking health concerns about 5G to the emergence of the coronavirus. As a result of this rumour, in the period between the 2nd and the 6th of April 2020 it is estimated that at least 20 mobile phone masts were vandalised in the UK alone.

Our on-going research project has begun to investigate the conspiracy theories between COVID-19 and 5G technology on Twitter. Our data was collected based on the time during which the conspiracy theory became a trending topic on Twitter which was towards the end of March. Our initial dataset consists of 6,556 Twitter users whose tweets contained “5GCoronavirus”, which included replies and mentions they received from other users.

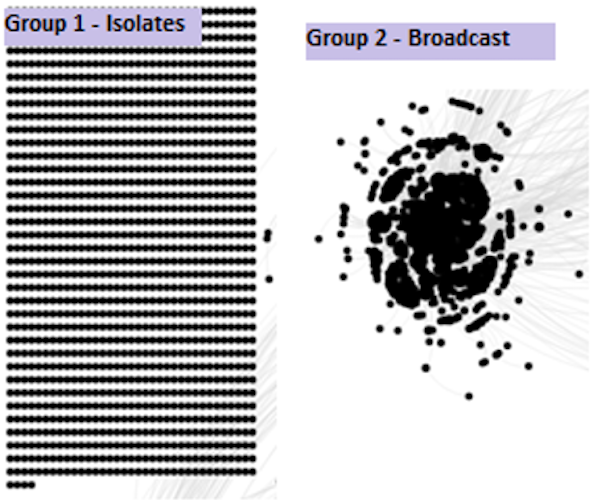

Figure 1 – Zooming in and Extracting Two of the Largest Groups Using NodeXL

We aim to use social network analysis to cluster Twitter users into different groups using NodeXL. In the figure above we have extracted two of the largest groups from the network. On the left-hand side we can see that the largest group of users consisted of an isolate group where users expressed their views towards the conspiracy theory. An isolate group is one in which only contains tweets without user-mentions. As the topic became more popular it attracted users who joined to discussion either to use the increased attention to spread non-related Web-links and/or to ridicule those who believed the conspiracy theory. Our aim is to conduct an analysis of these tweets to ascertain the percent of Twitter users who actually believed in this conspiracy as opposed to those who ridiculed it. On the right-hand side we can see the second largest group is a densely populated group with a number of users mentioning each other with high frequency. This can be described as a broadcast group as it appears that a core group of Twitter users were being retweeted. Broadcast networks are typical shapes for news accounts, for instance, journalists and media outlets such as the BBC News Twitter account will have a broadcast network shape.

Our project also aims to understand the types of information sources which were influential during this time period. The most popular web source shared on Twitter during this time was from the website known as InfoWars which is a popular conspiracy theory website based in the United States. The article itself linked to several videos in which ‘top scientists’ reveal how 5G could weaken a population’s immune system. This is important as Infowars has been shown to be a source of fake news spread during other important political events such as the Grenfell fire. Thus social media discourse involving fake news demonstrates the emergence of the importance of the emergence of persistent and “trusted” producers of fake news in generating narratives of (in)security. We also found that other websites such an electro magnetism protection provider, and a dedicated website linking 5G and COVID-19 also appeared to be influential.

This investigation is beginning to present an important stream of research for international relations scholars in the intersection of fake news, public health and new media technologies. To date public health has been underconceptualised in the possibilities it presents as a discursive register for (in)security. This is especially important in this case given the ways in which narratives about health are spread in everyday “vernacular” language on new media platforms and translate into real world insecurity. We have given greater insights into processes whereby social media narratives generate real world insecurity via attacks on infrastructure. Importantly, this has bucked a documented scholarly trend where the relationship between activity on social media generating real world activity is questioned. This episode demonstrates the serious consequences of fake news driven social media activity on (in)securities in the real world as this activity is generating attacks on communications infrastructure

This is especially pertinent given the rapid pace with which fake news is generated and disseminated during unfolding events in real time – as Downing and Dron demonstrated during the Grenfell fire, fake news was concocted and spread widely across the globe even while the fire still burned. If Churchill quipped that “a lie gets halfway around the world before the truth has a chance to put its shoes on” this episode demonstrates that not only will a lie spread quickly, it can also result in grave physical consequences. Thus, our findings above will be of interest to public health authorities and governments around the world whom would all have an interest in understanding and countering the spread of dangerous misinformation on social media platforms.

No comments:

Post a Comment