Gregory C. Allen

In November 2012, the Department of Defense (DOD) released its policy on autonomy in weapons systems: DOD Directive 3000.09 (DODD 3000.09). Despite being nearly 10 years old, the policy remains frequently misunderstood, including by leaders in the U.S. military. For example, in February 2021, Colonel Marc E. Pelini, who at the time was the division chief for capabilities and requirements within the DOD’s Joint Counter-Unmanned Aircraft Systems Office, said, “Right now we don't have the authority to have a human out of the loop. Based on the existing Department of Defense policy, you have to have a human within the decision cycle at some point to authorize the engagement."

He is simply wrong. No such requirement appears in DODD 3000.09, nor any other DOD policy.

Misconceptions about DODD 3000.09 appear to extend even to high-ranking flag officers. In April 2021, General Mike Murray, the then-four-star commander of Army Futures Command, said, “Where I draw the line—and this is, I think well within our current policies—[is], if you’re talking about a lethal effect against another human, you have to have a human in that decision-making process.” Breaking Defense, a news outlet that reported on Murray’s remarks at the time, stated that the requirement to have a human in the decisionmaking process is “official Defense Department policy.”

It is not.

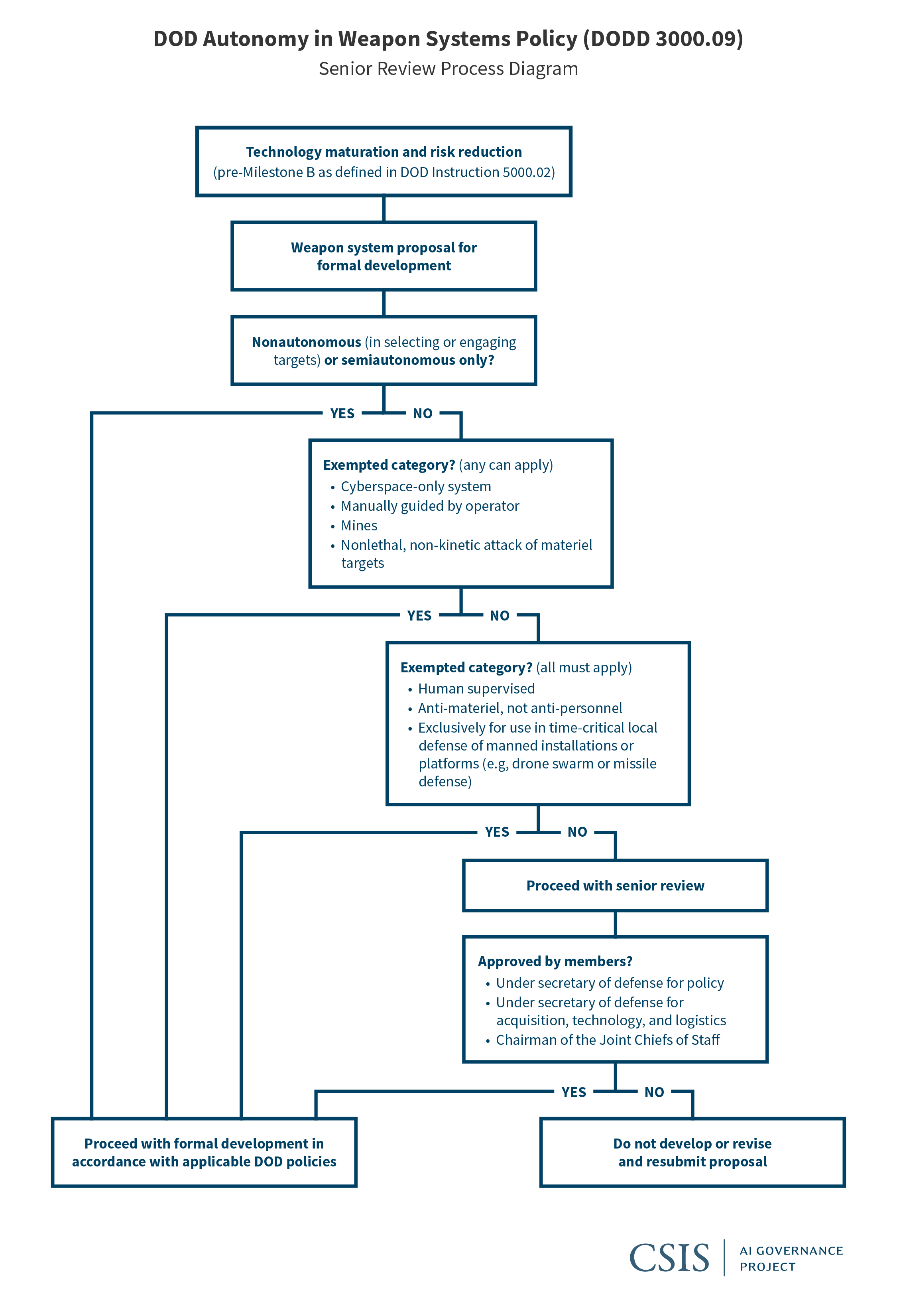

DODD 3000.09 does not ban autonomous weapons or establish a requirement than U.S. weapons have a “human in the loop.” In fact, that latter phrase never appears in DOD policy. Instead, DODD 3000.09 formally defines what an autonomous weapon system is and requires any DOD organization proposing to develop one to either go through an incredibly rigorous senior review process or meet a qualifying exemption. Regarding the latter, cyber weapons systems, for example, are exempted.

The DOD recently announced that it is planning to update DODD 3000.09 this year. Michael Horowitz, director of the DOD’s Emerging Capabilities Policy Office and the Pentagon official with responsibility for shepherding the policy, praised DODD 3000.09 in a recent interview, stating that “the fundamental approach in the directive remains sound, that the directive laid out a very responsible approach to the incorporation of autonomy and weapons systems.” While not making any firm predictions, Horowitz suggested that major revisions to DODD 3000.09 were unlikely.

In general, this is good news. The DOD’s existing policy recognizes that some categories of autonomous weapons, such as cyber weapons and missile defense systems, are already in widespread and broadly accepted use by dozens of militaries worldwide. It also allows for the possibility that future technological progress and changes in the global security landscape, such as Russia’s potential deployment of artificial intelligence (AI)-enabled lethal autonomous weapons in Ukraine, might make new types of autonomous weapons desirable. This requires proposals for such weapons to clear a high procedural and technical bar. In addition to demonstrating compliance with U.S. obligations under domestic and international law, DOD system safety standards, and DOD AI-ethics principles, proposed autonomous weapons systems must clear an additional senior review process where the chairman of the Joint Chiefs of Staff, under secretary of defense for policy; and the under secretary of defense for acquisition, technology, and logistics certify that the proposed system meets 11 additional requirements, each of which require presenting considerable evidence.

Getting the signatures of the U.S. military’s highest-ranking officer and two under secretaries in a formal senior review is no easy task. Perhaps the strongest proof of the rigor required to surpass such a hurdle is the fact that no DOD organization has even tried.

There is no need for a broad rewrite of DODD 3000.09, a conclusion shared by DOD and the congressionally chartered National Security Commission on AI. However, the department does need to update the policy. As just one obvious example, the position of under secretary of defense for acquisition, technology, and logistics no longer exists, and its former responsibilities are now split between two new under secretary positions.

Moreover, new terms have entered the DOD policy lexicon that are not defined in DODD 3000.09 or anywhere else. For example, Deputy Secretary of Defense Kathleen Hicks’s May 2021 memo on implementing the DOD’s AI ethics principles states that the principles apply to “AI-enabled autonomous systems.” Presumably that refers to utilizing machine learning in functions related to the identification, selection, and engagement of targets, but the DOD has yet to formally define in policy what it means for a system to be “AI-enabled” or to be an “AI-enabled autonomous system.”

More than anything, the department needs to improve clarity among DOD technology research and development organizations about what DODD 3000.09 does and does not say. It is remarkable that, nearly 10 years after the policy’s original enactment, an army colonel responsible for developing counter-drone technology was under the impression that DOD policy forbids him from incorporating autonomy for applications that are not only allowed but also expressly exempted from the senior review requirement.

As it updates the policy, there are at least four key issues that DOD needs to address. The first two will require actual changes to the policy, while the latter two can be addressed simply by providing additional clarification and guidance, perhaps through publication of a policy handbook. Define how a system’s “AI-enabled” status does or does not affect the policy’s requirements.

Define how retraining machine learning models will be handled in the senior review process.

Clarify the specific features that formally define an autonomous weapon system.

Clarify the types of weapons that are and are not required to go through the autonomous weapon senior review process.

While the final word on these issues must come directly from DOD, the sections below attempt to shed some initial light.

Define how a system’s “AI-enabled” status does or does not affect the policy’s requirements.

The debate over what constitutes AI as opposed to mere mechanical computation and automation goes back at least as far as the 1830s, when Ada Lovelace worked with Charles Babbage to design and program the first mechanical computers. In recent decades, computer systems that in their heyday were routinely called “AI,” such as IBM’s chess-playing Deep Blue system in 1997, have higher-performing successors today that are merely called “software” or “apps.” Machine learning, a subfield of AI that has been responsible for extraordinary research and commercialization progress since 2012, is more often than not used as a synonym for AI—so much so that some argue that any system that does not use machine learning should not be referred to as AI.

It is important to know whether a system claiming to be AI-enabled is using machine learning. Machine learning works differently from traditional software. It is better at some things, worse at others, requires different factors to enable success, and has different failure modes and risks that need to be addressed. When the U.S. military says that a given system is AI-enabled or that a given project is an “AI project,” it almost always means machine learning.

While describing a military system as AI-enabled or machine learning-enabled provides useful information, it often remains unclear just what functionality is being provided by AI and how central AI is to the system overall. Suppose, hypothetically, that the F-35 Joint Strike Fighter Program Office decided that it should use machine learning to improve the performance on one of the aircraft’s many different types of sensors. Does that mean that the entire F-35 program, with its more than $8.5 billion in annual spending, is building AI-enabled weapon systems? The DODD 3000.09 update is a good opportunity to formally define “AI-enabled” in DOD policy and to specify how using machine learning does or does not affect the autonomous weapon senior review process.

Confusingly, common usage of these terms differs significantly in other countries. For example, Russian weapons manufacturers routinely refer to their automated and robotic military systems as using AI even if the system does not use machine learning, and they rarely make it clear when it does. Varied definitions complicate international diplomacy on these subjects.

Define how retraining machine learning models will be handled in the senior review process.

A 2019 report from the Defense Innovation Board was memorably named “Software Is Never Done.” Updates, bug fixes, cybersecurity patches, and new features are a critical aspect of managing all modern software systems. However, software updates for machine learning systems are different in nature from traditional software, and perhaps even more important. The “learning” in a machine learning system comes from applying a learning algorithm to a training data set in order to produce a machine learning model, which is the software that performs the desired function. To update the machine learning model, the developers provide a new (usually larger) training data set that more accurately reflects the changing operational environment. If the operational environment changes, failing to rapidly retrain a machine learning model can lead to reduced performance.

Does each software update and machine learning model retraining require an autonomous weapon system to go through the senior review approval process all over again? If so, an already formidable process barrier risks unintentionally becoming an insurmountable one. A more reasonable solution would be for the senior review process to assess and decide upon the acceptability of the process for producing new training data; to test and evaluate procedures for determining whether new software updates are sufficiently robust and reliable; and to explicitly state which potential new features would trigger an additional round of the senior review approval process. This is similar to how the global automotive industry regulates software updates and safety compliance.

Clarify the specific features that formally define an autonomous weapon system.

Despite eight years of negotiations at the United Nations, there is still no internationally agreed upon definition of autonomous weapons or lethal autonomous weapons. DODD 3000.09 does, however, formally define autonomous weapon systems and semi-autonomous weapon systems from the perspective of U.S. policy:

Autonomous weapon system: A weapon system that, once activated, can select and engage targets without further intervention by a human operator. This includes human-supervised autonomous weapon systems that are designed to allow human operators to override operation of the weapon system, but can select and engage targets without further human input after activation.

Semi-autonomous weapon system: A weapon system that, once activated, is intended to only engage individual targets or specific target groups that have been selected by a human operator. This includes:

Semi-autonomous weapon systems that employ autonomy for engagement-related functions including, but not limited to, acquiring, tracking, and identifying potential targets; cueing potential targets to human operators; prioritizing selected targets; timing of when to fire; or providing terminal guidance to home in on selected targets, provided that human control is retained over the decision to select individual targets and specific target groups for engagement.

‘Fire and forget’ or lock-on-after-launch homing munitions that rely on [Tactics, Techniques, and Procedures] to maximize the probability that the only targets within the seeker’s acquisition basket when the seeker activates are those individual targets or specific target groups that have been selected by a human operator.

Notably, machine learning is not mentioned anywhere in these definitions, and that is because machine learning is not always necessary. Traditional software is sufficient to deliver a high degree of autonomy for some military applications. For example, the Israeli Aerospace Industries (IAI) Harpy is a decades-old unmanned drone that IAI openly acknowledges is an autonomous weapon. When in autonomous mode, the Harpy loiters over a given region for up to nine hours, waiting to detect electromagnetic emissions consistent with an onboard library of enemy radar, homes in on the emissions source (usually enemy air defense radar), and attacks. No human in the loop is required.

The critical feature of this definition is that the policy threshold for autonomy relates specifically and exclusively to the ability to autonomously “select and engage targets.” DOD officials talk often about their goals for increasing autonomy in weapon systems, but sometimes they mean increasing weapon autonomy in areas like guidance and navigation. For example, Mike E. White, assistant director for the DOD’s hypersonics office, stated in 2020 that he wanted future DOD hypersonic weapons to use autonomy and AI to “optimize flight characteristics.” Increasing hypersonic weapon autonomy for guidance and navigation is irrelevant from a DODD 3000.09 perspective and relatively innocuous from an international relations perspective, but the DOD should seek to minimize potential misunderstandings—especially about hypersonic weapons that could carry nuclear warheads and have significant implications for global strategic stability. This is an area where the DOD must communicate clearly and precisely.

Clarify the types of weapons that are and are not required to go through the autonomous weapon senior review process.

As mentioned above, DODD 3000.09 does not ban autonomous weapons systems but rather subjects them to additional technical and process scrutiny in the form of a senior review. However, not all weapons systems with autonomous functionality are required to go through the review. The below diagram provides a simplified depiction of what systems must go through the review and which ones are exempted. DOD should publish a similar diagram in a policy handbook to accompany the DODD 3000.09 update.

Conclusion

The United States was the first country to adopt a formal policy on autonomy in weapon systems. To the nation’s credit, its first attempt got nearly all the most important details right. However, for the policy to work as intended, it must be not only good, but also well understood. As the DOD revises and updates the policy, it should clearly communicate what the policy says and how the future development of AI-enabled and autonomous weapon systems will feature in the U.S. National Defense Strategy.

No comments:

Post a Comment