California based chip giant Nvidia recently unveiled its artificial intelligence chip Nvidia A100 — designed to cater to all AI workloads. Chip manufacturing has seen some major innovations in recent times. Last summer, I covered another California-based chip startup Cerebras, which raised the bar with its innovative chip design dubbed as “Wafer-Scale Engine” (WSE).

As the need for supercomputing systems gathers pace, chip manufacturers are scrambling to come up with futuristic chip designs that can cater to the needs of processing complex calculations on such systems. Intel, the biggest chip manufacturer is working on powerful “neuromorphic chips” that use the human brain as a model. This design basically replicates the working of brain neurons to process information smoothly — with the proposed chip having a computational capacity of 100 million neurons.

More recently, the Australian startup Cortical Labs has taken this idea one step further by designing a system, using a combination of biological neurons and a specialized computer chip — tapping into the power of digital systems and combining it with the power of biological neurons processing complex calculations.

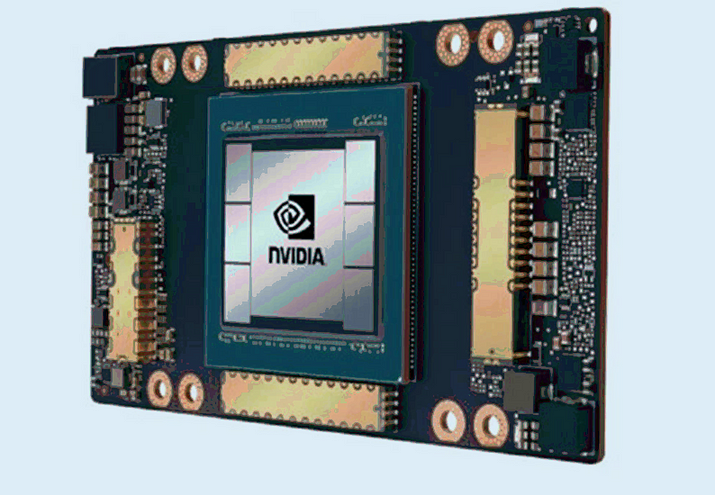

Delayed by almost two months due to the pandemic, Nvidia released its 54 billion transistors monster chip, which packs 5 petaFLOPS of performance — 20 times more than the previous-generation chip Volta. The chips and the DGX A100 systems (video below) that used the chips are now available and shipping. Detailed specs of the system are available here.

“You get all of the overhead of additional memory, CPUs, and power supplies of 56 servers… collapsed into one. The economic value proposition is really off the charts, and that’s the thing that is really exciting.”

~ CEO Jensen Huang, Nvidia

The third generation of Nvidia’s AI DGX platform, the current system basically gives you the computing power of an entire data center into a single rack. For a typical customer handling AI training tasks today requires 600 central processing unit (CPU) systems costing $11 million, which would need 25 racks of servers and 630 kilowatts of power. While Nvidia’s DGX A100 system provides the same processing power for $1 million, a single server rack, and 28 kilowatts of power.

It also gives you the ability to split your job into smaller workloads for faster processing — the system could be partitioned into 56 instances per system, using the A100 multi-instance GPU feature. Nvidia has already received orders from some of the biggest companies around the world. Here are few of the notable ones:

U.S. Department of Energy’s (DOE) Argonne National Laboratory was the first one to receive the AI-powered system using it to better understand and fight COVID-19.

The University of Florida will be the first U.S. institution of higher learning to deploy the DGX A100 systems in an attempt to integrate AI across its entire curriculum.

Other early adopter includes the Biomedical AI at the University Medical Center Hamburg-Eppendorf, Germany leveraging the power of the system to advance clinical decision support and process optimization.

On top of this, thousands of previous-generation DGX systems clients’ around the world are now Nvidia’s prospective customers. An attempt by Nvidia to create a single microarchitecture for its GPUs for both commercial AI and consumer graphics use by switching different elements on the chip might provide it an edge in the long run.

Other releases on the occasion included Nvidia’s next-generation DGX SuperPod, a cluster of 140 DGX A100 systems capable of achieving 700 petaflops of AI computing power. Chip design finally seems to be catching up with the computing needs of the future.

No comments:

Post a Comment