SYDNEY J. FREEDBERG JR.

WASHINGTON — Imagine a militarized version of ChatGPT, trained on secret intelligence. Instead of painstakingly piecing together scattered database entries, intercepted transmissions and news reports, an analyst types in a quick query in plain English and get back, in seconds, a concise summary — a prediction of hostile action, for example, or a profile of a terrorist.

WASHINGTON — Imagine a militarized version of ChatGPT, trained on secret intelligence. Instead of painstakingly piecing together scattered database entries, intercepted transmissions and news reports, an analyst types in a quick query in plain English and get back, in seconds, a concise summary — a prediction of hostile action, for example, or a profile of a terrorist.But is that output true? With today’s technology, you can’t count on it, at all.

That’s the potential and the peril of “generative” AI, which can create entirely new text, code or images rather than just categorizing and highlighting existing ones. Agencies like the CIA and State Department have already expressed interest. But for now, at least, generative AI has a fatal flaw: It makes stuff up.

“I’m excited about the potential,” said Lt. Gen. (ret.) Jack Shanahan, founding director of the Pentagon’s Joint Artificial Intelligence Center (JAIC) from 2018 to 2020. “I play around with Bing AI, I use ChatGPT pretty regularly — [but] there is no intelligence analyst right now that would use these systems in any way other than with a hefty grain of salt.”

“This idea of hallucinations is a major problem,” he told Breaking Defense, using the term of art for AI answers with no foundation in reality. “It is a showstopper for intelligence.”

Shanahan’s successor at JAIC, recently retired Marine Corps Lt. Gen. Michael Groen, agreed. “We can experiment with it, [but] practically it’s still years away,” he said.

Instead, Shahanan and Groen told Breaking Defense that, at this point, the Pentagon should swiftly start experimenting with generative AI — with abundant caution and careful training for would-be users — with an eye to seriously using the systems when and if the hallucination problem can be fixed.

Who Am I?

To see the current issues with popular generative AI models, just ask the AI about Shanahan and Groen themselves, public figures mentioned in Wikipedia and many news reports.

Then-Lt. Gen. John “Jack” Shanahan

On its first attempt, ChatGPT 3.5 correctly identified Shanahan as a retired Air Force officer, AI expert, and former director of both the groundbreaking Project Maven and the JAIC. But it also said he was a graduate of the Air Force Academy, a fighter pilot, and an advisor to AI startup DeepMind — none of which is true. And almost every date in the AI-generated bio was off, some by nearly a decade.

With the delivery of the first set of prototypes, “the pendulum is swinging back to onshoring our own domestic capabilities,” Heidi Shyu, under secretary of defense for research and engineering, told reporters.

“Wow,” Shanahan said of the bot’s output. “Many things are not only wrong, but seemingly out of left field… I’ve never had any association with DeepMind.”

What about the upgraded ChatGPT 4.0? “Almost as bad!” he said. This time, in addition to a different set of wrong jobs and fake dates, he was given two children that did not exist. Weirder yet, when you enter the same question into either version a second time, it generates a new and different set of errors.

Nor is the problem unique to ChatGPT. Google’s Bard AI, built on a different AI engine, did somewhat better, but it still said Shanahan was a pilot and made up assignments he never had.

“The irony is that it could pull my official AF bio and get everything right the first time,” Shanahan said.

And Groen? “The answers about my views on AI are pretty good,” the retired Marine told Breaking Defense after reviewing ChatGPT 3.5’s first effort, which emphasized his enthusiasm for military AI tempered by stringent concern for ethics. “I suspect that is because there are not too many people with my name that have publicly articulated their thoughts on this topic.”

On the other hand, Groen went on, “many of the facts of my biography are incorrect, [e.g.] it got place of birth, college attended, year entered service wrong. It also struggled with units I have commanded or was a part of.”

How could generative AI get the big picture right but mess up so many details? It’s not that the data isn’t out there: As with Shanahan, Groen’s official military bio is online.

But so is a lot of other information, he pointed out. “I suspect that there are so many ‘Michaels’ and ‘Marine Michaels’ on the global internet that the ‘pattern’ that emerged contains elements that are credible, but mostly incorrect,” Groen said.

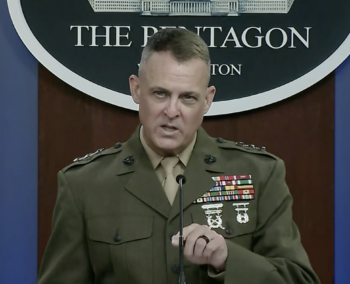

Then-Lt. Gen. Michael Groen, director of the Joint AI Center, briefs reporters in 2020. (screenshot of DoD video)

This tendency to conflate general patterns and specific facts might explain the AIs’ insistence that Shanahan is an Air Force Academy grad and fighter pilot. Neither of those things is true of him as an individual, but they are commonly mentioned attributes of Air Force officers as a group. It’s not true, but it’s plausible — and this kind of AI doesn’t remember a database of specific facts, only a set of statistical correlations between different words.

“The system does not know facts,” Shanahan said. “It really is a sophisticated word predictor.”

The companies behind the tech each acknowledge and warn that their AI apps may produce incorrect information and urge user caution. The missteps in AI chatbots are not dissimilar to those of generative AI artists, like Stable Diffusion, which produced the image of a mutant Abrams below.

Output from a generative AI, Stable Diffusion, when prompted by Breaking Defense with “M1 Abrams tank.”

What Goes Wrong

In the cutting-edge Large Language Models that drive ChatGPT, Bard, and their ilk, “each time it generates a new word, it is assigning a sort of likelihood score to every single word that it knows,” explained Micah Musser, a research analyst at Georgetown University’s Center for Security and Emerging Technology. “Then, from that probability distribution, it will select — somewhat at random – one of the more likely words.”

That’s why asking the same question more than once gets subtly or even starkly different answers every time. And while training the AI on larger datasets can help, Musser told Breaking Defense, “even if it does have sufficient data, it does have sufficient context, if you ask a hyper-specific question and it hasn’t memorized the [specific] example, it may just make something up.” Hence the plausible but invented dates of birth, graduation, and so on for Shanahan and Groen.

Now, it’s understandable when human brains misremember facts and even invent details that don’t exist. But surely a machine can record the data perfectly?

Not these machines, said Dimitry Fisher, head of data science and applied research at AI company Aicadium. “They don’t have memory in the same sense that we do,” he told Breaking Defense. “They cannot quote sources… They cannot show what their output has been inspired by.”

Ironically, Fisher told Breaking Defense, earlier attempts to teach AIs natural language did have distinct mechanisms for memorizing specific facts and inferring general patterns, much like the human brains that inspired them. But such software ran too slowly on any practical hardware to be of much use, he said. So instead the industry shifted to a type of AI called a transformer — that’s the “T” in “ChatGPT” — which only encodes the probable correlations between words or other data points.

“It just predicts the most likely next word, one word at a time,” Fisher said. “You can’t have language generation on the industrial scale without having to take this architectural shortcut, but this is where it comes back and bites you.”

These issues should be fixable, Fisher said. “There are many good ideas of how to try to and solve them – but that’ll take probably a few years.”

Shanahan, likewise, was guardedly optimistic, if only because of the financial incentives to get it right.

“These flaws are so serious that the big companies are going to spend a lot of money and a lot of time trying to fix things,” he said. “How well those fixes will work remains the unanswered question.”

The Combined Air Operations Center (CAOC) at Al Udeid Air Base, Qatar, (US Air Force photo by Staff Sgt. Alexander W. Riedel)

If It Works…

If generative AI can be made reliable — and that’s a significant if — the applications for the Pentagon, as for the private sector, are extensive, Groen and Shanahan agreed.

“Probably the places that make the most sense in the near term… are those back-office business from personnel management to budgeting to logistics,” Shanahan said. But in longer term, “there is an imperative to use them to help deal with … the entire intelligence cycle.”

So while the hallucinations have to be fixed, Shanahan said, “what I’m more worried about, in the immediate term, is just the fact that I don’t see a whole lot of action in the government about using these systems.” (He did note that the Pentagon Chief Digital & AI Office, which absorbed the JAIC, has announced an upcoming conference on generative AI: “That’s good.”). Instead of waiting for others to perfect the algorithms, he said, “I’d rather get them in the hands of users, put some boundaries in place about how they can be used… and then focus really heavy on the education, the training, and the feedback” from users on how they can be improved.

Groen was likewise skeptical about the near term. “We don’t want to be in the cutting edge here in generative AI,” he said, saying near-term implementation should focus on tech “that we know and that we trust and that we understand.” But he was even more enthused about the long term than Shanahan.

“What’s different with ChatGPT, suddenly you have this interface, [where] using the English language, you can ask it questions,” Groen said. “It democratizes AI [for] large communities of people.”

That’s transformative for the vast majority of users who lack the technical training to translate their queries into specialized search terms. What’s more, because the AI can suck up written information about any subject, it can make connections across different disciplines in a way a more specialized AI cannot.

“What makes generative AI special is it can understand multiple narrow spaces and start to make integrative conclusions across those,” Groen said. “It’s… able to bridge.”

But first, Groen said, you want the strong foundations to build the bridge across, like pilings in the river. So while experimenting with ChatGPT and co., Groen said, he would put the near-term emphasis on training and perfecting traditional, specialist AIs, then layer generative AI over top of them when it’s ready.

“There are so many places today in the department where it’s screaming for narrow AI solutions to logistics inventories or distribution optimizers, or threat identification … like office automation, not like killer robots,” Groen said. “Getting all these narrow AIs in place and building these data environments actually really prepares us for — at some point — integrating generative AI.”

No comments:

Post a Comment