by Christopher Ankersen

Imperfection, ambiguity, opacity, disorder, and the opportunity to err, to sin, to do the wrong thing: all of these are constitutive of human freedom, and any attempt to root them out will root out that freedom as wellEvgeny Morozov1

Technology is making gestures precise and brutal, and with them men.”Theodor W. Adorno

Humans now share their world with artificial intelligence (AI). AI does most tasks much better than we do. It can sift through data much more quickly than humans; it is adept at pattern matching; it is not prone to fatigue, to stress, or self-doubt. For these reasons, it is the subject of immense investment. Last year alone, over £1 billion was raised for research and development in AI in the UK.2 And for good reason: the global economic gains from AI are predicted to top £12 trillion by 2030.3

But the commercial world is not unique. Just as the Wright Brothers’ invention went from skimming along the sands of North Carolina in 1903 to observing, bombing, and dogfighting above the Western Front in 1914, AI has swiftly made the leap from a civilian to a military application. Since Alan Turing’s bombe literally ‘cracked the code’ in Bletchley Park all those years ago, we have come to rely on the power of computation to achieve superhuman results. In our visions of the future (whether of war or peace), AI is intimately implicated in defence as a means of overcoming the sluggish, fickle, and error-prone nature of human decision-making. If the wars of the 21st Century have imparted a singular lesson it is this: having our troops mired in ambiguous situations, unable to see into the next compound, incapable of loitering without exposing themselves to mortal risk is increasingly unacceptable to the generals, politicians, and citizens who—ultimately—put them there.

Enter technology: uninhabited, quasi-autonomous weapons systems designed to undertake these kinds of prolonged and dangerous measures are perfect solutions for the kinds of stability operations we repeatedly and incessantly find ourselves in. Algorithms already create search patterns, power facial and signature recognition systems, and remain at their posts indefinitely.

What is more, even if those nuisance wars of choice are coming to an end (at least for us in the West), they are to be replaced by the even more frightening prospect of a return to great power competition. The US and its allies are increasingly challenged by Russia and China and this challenge takes on a many forms, from influence operations, to conventional confrontations, perhaps even to nuclear warfighting. In this arena, AI may prove even more valuable: the telemetry of incoming missiles, the ability to control massive swarms of sensors, the exquisite timing of the perfect counter-measure…all are beyond the cognition of mere humans. Our survival could very well depend on the kind of split-second decision making only AI can deliver. If smart bombs were good, then brilliant bombs will be better.

Can it be that easy? Not that developing and deploying AI is child’s play; far from it. The intellectual and financial investments involved are indeed boggling. And despite all the sophistication that goes into the algorithms that power machine learning and the AI process, we have re-discovered that ‘garbage in, garbage out’ still reigns, meaning that the AI product, whatever form it eventually takes, will be far from perfect.4

All that aside, there now appears to be more than a simple fascination with attempting to harness AI and exploiting it in the military realm. That fascination has become a compulsion. AI is a necessity, without which we risk losing our edge.5 This impulse is entirely human and consists of twin desires: to avoid misery and to not be left behind. While these desires are neither new nor contemptible, they do mean that we are heading into dangerous territory.

Outsourcing Drudgery and Pain

“Beware; for I am fearless, and therefore powerful.”Mary Shelley, Frankenstein

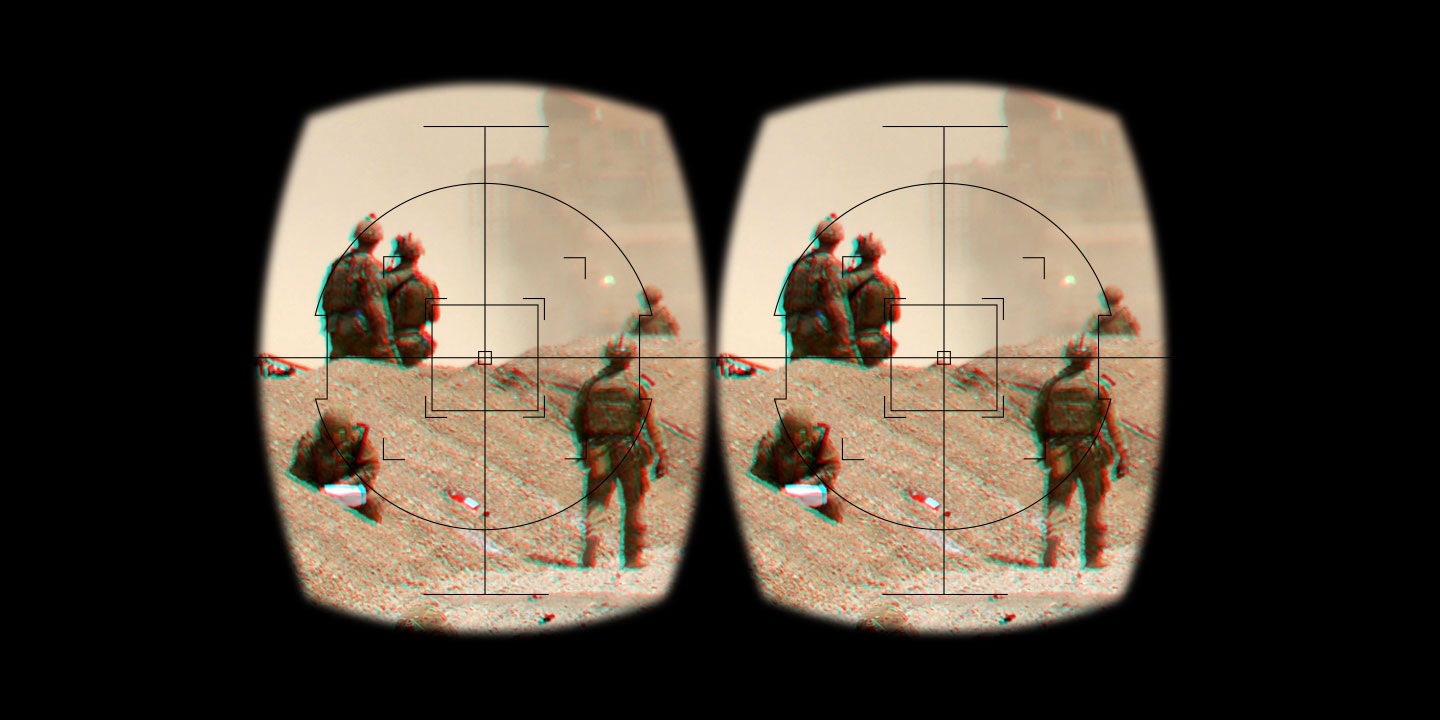

This obsession with technology is an extension of two impulses that have driven military development: to overcome human weaknesses and to outsource as much of the brutal aspects of war to others, whether human, beast, or machine. These have often been twinned: donkeys can carry more ammunition that people can, and moving the weight off the backs of soldiers and onto beasts improved the lot of the biped. This was repeated again and again, with rail and truck picking up where the horse left off. Similarly, as human vision lacks range and must ‘close down’ every so often if it is to remain effective, tired, myopic eyes were replaced by the ceaseless gaze of cameras, some even orbiting from space. And it is not just lack of capacity that machines can overcome—they can address the woeful fragility of human fighters. Tanks, for instance, besides being mobile guns, are metallic shields, providing a modicum of protection from the lethality of the battlefield. We constantly strive for “the externalization of the burden of warfare”, whereby we seek out “technological and human surrogates [that] enable the state to manage the risks of post-modern conflict remotely.”6 We are aware now that those risks are not just corporeal; contemporary warfare not only ends lives and removes limbs. Combat has the power to wound mentally those who take part in it, causing critical stress that can lead to secondary damage in the form of haunting nightmares, chemical dependency, anti-social behaviour, and, all too often, suicide. Even drone pilots, often thousands of kilometers away from their targets, are not wholly liberated from this trauma.7 In that way, AI represents nothing more than the latest attempt to put distance—literal and figurative—between humans and the injury, both physical and moral, caused by war.8 However, AI does promise something revolutionary in this regard: it would not just displace humans from the battlefield, it could replace them altogether.

That’s Just Loopy

There is debate about whether replacement represents a step too far. For some, wholly autonomous AI is a manifestation of the dystopic idea of ‘killer robots.’9 We feel more comfortable with a human ‘in the loop’ making the final decision about when or if to pull the trigger. AI might be able to do the sums rapidly, but do we trust it with matters of life or death? The kind of future we want “should be a society where people feel empowered, not threatened by AI.”10 That just makes sense…right?

But Everyone is Doing it…Faster

To the mix of wanting to avoid pain and retain control, we need to add another age-old compulsion: that of keeping up with the others. Arms races, whether in the form of dreadnoughts, strategic bombers, or intercontinental ballistic missiles, occur because we cannot stand by and let our opponents gain an advantage. Russian and especially Chinese militaries are working hard on AI. Because we do not know just what they are up to, we cannot be sure that we are not already falling behind, and therefore we must work to catch up.11 What we do know about Chinese commercial capability in the field of high technology and advanced computing leads some to believe that the West is already victim to an ‘AI gap’.12 If our own social and political instincts to avoid putting our own people at risk does not push us to develop AI, then surely the immutable drive for survival will.

Moreover, we need worry not only about the amount of AI being developed by our rivals, but about how they might use it. Our consciences may be bothered about the need for some form of human/loop relationship, but can we count on the fact that our adversaries will? If they flip the switch to fully automatic, would that make them even faster, thereby defeating our more human system? Just as uncertainty will lead pressure to build more AI in order to keep up with our rivals, so too will it create pressure to build faster AI. It is clear that the slowest portion of the AI/human loop will forever be the human. There will be little sense in building faster AI that can perform 99% of the find, fix, fire cycle in a fraction of a second, only to need to wait for a ponderous human to give the thumbs up. This has already led to a retreat from a posture of positive control (human in the loop) to one of monitoring (human on the loop), whereby a human keeps watch on what is going on, ready to intervene at the first sign of trouble.

The reality is, though, that this position is untenable and illusory. Human monitors will be overwhelmed by the speed, scale, and scope of the systems they are charged with; indeed, they may wish to create AI systems solely for aiding in the monitoring process, which of course would lead to a cycle of infinite recursion. Even if rapidity and size could be tamed, our human monitors would still be faced with deeper problem: “No one really knows how the most advanced algorithms do what they do.”13 We have created tools that we do not fully understand, and may not be able to control.

Escalation Frustration

So what of it, you might well ask. Where can we turn to understand the impact of AI on war? Should we look to 21st Century tech gurus for guidance? Or the oracles of yesterday, in their guise as 20th Century authors of science fiction? While these may be of some assistance, the best place to look is to a 19th Century philosopher of war. Carl von Clausewitz believes that the nature of war is to trend towards the absolute; that is, war without limits, where the escalation of violence goes on, on both sides of a conflict, until both are spent and one is victorious. Absolute war leads to absolute annihilation, the Prussian warns. Luckily, though, such annihilation is more theoretical than real. Absolute war is rare in our actual world. What makes this so, says Clausewitz, are the impediments of fog, friction, and politics. Because we are unsure of what lies behind the next hill, because tires run flat, and because governments often vacillate, the world has been spared from absolute war, and therefore, from total destruction. Clausewitz explains it thus:

The barrier [that prevents war from becoming absolute] is the vast array of factors, forces and conditions in national affairs that are affected by war. No logical sequence could progress through their innumerable twists and turns as though it were a simple thread that linked two deductions. Logic comes to a stop in this labyrinth; and those…who habitually act, both in great and minor affairs, on particular dominating impressions or feelings rather than according to strict logic, are hardly aware of the confused, inconsistent, and ambiguous situation in which they find themselves.Carl von Calusewitz, On War

At its heart, as a means of improving upon warfare and reducing immediate human suffering, AI is an attempt to cut through the fog of war, to eliminate friction, and ultimately to de-politicize war, turning it from a phenomenon marked by an amalgam of rationality, irrationality, and non-rationality,14 into one of hyper-rationality.

In a hyper-rational world where AI was master of the loop, war would be waged strictly according to logic and reason, freed from fear, insecurity, and quavering. Mistakes could be reduced or even eliminated. Extraneous considerations, those that keep human commanders up at night, like the scale of killing, concern for morale, the welfare of the troops, or even one’s reputation in posterity, could be properly ignored. Surely that would be an improvement?

Definitely not. Consider Clausewitz’s clues (highlighted in the passage above): war, as we know it, cannot be strictly viewed as logical: there are too many ambiguities—what rational actor theorists, like economists—might call ‘externalities’. If war is disembedded from its surrounding social and political—its human context15—what is to prevent it from reaching the end point of absolute war? War’s nature, as we know it now, is founded on human particularities and bounded by our failings. If those limits were to be removed through the application of Artificial Intelligence, then the nature of war—long held to be unchanging—would melt away, transformed into something non-human, potentially inhuman, probably inhumane. When waging war, authentic humanity is our safety net; alarmingly, artificial intelligence has already begun to pick at its all too gossamer threads.

What is to be done?

“Forgetting extermination is part of extermination.”Jean Baudrillard

We will not get the AI genie back into the bottle. It will coexist with so many of its siblings (carbon-based combustion, the secrets of nuclear power), spilled forever from Pandora’s amphora. What must be done, though, is to match the efforts at weaponizing artificial intelligence with a commitment to maintaining our authentic humanity. We need doubt, pity, and hesitation if we are to survive.

If we don’t know how to turn it off, should we even turn it on?

The first step is to proceed with caution. As the name suggests, machine learning means that the machines we are creating are capable of their own development. While many warn that fears about AI of today gaining consciousness are unfounded, the reality is we just don’t know what the future holds. Even when handicapped by flaws in its origin scripts, AI, still in its relative infancy, has moved beyond ‘pattern matching’ and ‘option cycling’. It has re-created ancient games and begun communicating to itself in ways which we do not fully understand.16 It can team up and beat humans in games of head-to-head simulated combat.17 So far this has been in vitro, largely confined to the test-tube world of the laboratory. But what would we—what could we—do if this proto-singularity happens ‘in the wild’, where systems are connected not to game boards but to lethal effects? Proponents of AI are quick to dismiss the spectres of SkyNet, Terminator, War Games, and HAL as the stuff of fantasy, but the reality is we have already seen the first green shoots of a form of para-consciousness develop. We just don’t know where they will lead. We need to build in mechanisms where we can pull the plug if we need to.

Focus on inclusive development, thinking out loud, and learning to code.

To paraphrase the tagline of the Washington Post “humanity dies in darkness.” Concerns over commercial and national security secrets will push AI development into closed labs. Algorithms will be written by small groups of coders, who are in turn given instructions by even smaller groups of decision-makers. This tendency must be resisted at all costs, as it will increase both the ‘black box effect’ and the in-built biases that already plague the AI universe. A world populated by competing ‘Manhattan Projects’ will end up creating destabilizing weapons with minds of their own. The ‘quick win’ of solving some technical puzzle may be offset by the eventual consequences.

Everyone involved in the human/machine interface, from politicians, to commanders, to watchkeepers, needs to become literate when it comes to what AI is, does, and does not do. The more that it resembles incomprehensible magic the less likely it is that it will be deployed correctly. Professional and personal development of serving officers (and not just those in the Signals Corps) will need to add this to the list of requirements if they are to remain effective managers of violence.18

As ever, the most worrying source of uncertainty will not be technical. Rather it will be the intentions of the adversaries building and deploying these systems. The nascent international fora and mechanisms for dialogue and the development of norms for the use of cyber and AI in defence should be wholeheartedly supported.19 Where possible, transparency and confidence-building measures should be developed and highlighted, much as was done to mitigate the effects of accidental nuclear war in an earlier age.

‘Melancholic and Fascinated’

War is a human endeavor. Humans must not be seen as a flaw in the system; in truth, they are the only feature worth keeping. If not, we may well end up liberated from the immediate effects of war, only to sit on the sidelines, melancholic and fascinated, hostages to something else’s decisions. Pain and sacrifice are not unnecessary byproducts of war, they are constituent safeguards of it. Hesitation due to compassion for one’s fellow traveler to the grave has saved more than it has imperiled. Could AI have come up with a preposterous back channel gambit and a quid pro quo elimination of nuclear weapons from both Turkey and Cuba as a means of avoiding World War III? Would AI have sweated it out at 3 AM willing to believe, against all good reason, that the positive signals were not incoming missiles requiring immediate retaliation?20 No. Only slow, irrational, unpredictable humans can do that.

No comments:

Post a Comment