John Marke

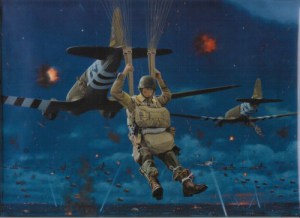

On this the 71st anniversary of the World War II D-Day invasion it is only fitting to remind ourselves that rarely do things go as planned in battle.

The 18th-century military strategist Carl Von Clausewitz called it the “fog of war.” It must have been pretty foggy on the night of June 5th and the morning of June 6th 1944 off the coast of Normandy. In the predawn hours, Airborne troopers were dropped all over the field of battle, few hitting the “drop zone” as planned…

The Rule of LGOPs

“After the demise of the best Airborne plan, a most terrifying effect occurs on the battlefield. This effect is known as the Rule of LGOPs. This is, in its purest form, small groups of 19- year old American Paratroopers. They are well-trained, armed to the teeth and lack serious adult supervision. They collectively remember the Commander’s intent as “March to the sound of the guns and kill anyone who is not dressed like you…” …or something like that. Happily, they go about the day’s work…

The Rule of LGOPs is instructive:

– They shared a common vision

– The vision was simple, easy to understand, and unambiguous

– They were trained to improvise and take the initiative

– They need to be told what to do; not how to do it.

To be sure, I am not denigrating planning. Whether that structured thought effort is military, homeland security, or risk assessment, which I include as a type of planning. But anticipation must go hand in glove with adaptability.

The Rule of LGOPs is, of course, a metaphor for resilience. All Armies, by the way, believe their soldiers are the best, the bravest, the most noble. But not all are the most resilient or adaptable. That was true of the German Army in Normandy, which ironically was commanded by Field Marshall Erwin Rommel. Rommel earned the nickname “The Desert Fox” for being a master of manoeuvre warfare. The irony, of course, is that “The Fox” was now charged with defending the European Coastline from Spain’s northern border to Norway. Rommel, the master of the blitz maneuver was locked into a static, defensive position.

Rommel faced a terrible quandary: how to defend over 5,300 kilometres of coastline? The quandary is not unlike that faced by Homeland Security. How to defend against an almost infinite number of potential targets? Think about this in terms of risk and investment. What is the threat, where and how am I vulnerable, and what is the consequence of an attack being launched at a particular place in this landscape?

Let’s stretch a bit and compare two theories of risk: Auditor v. LGOP

Both the Auditor and the LGOP are creatures of their respective environments and assumptions, i.e. their “world views.” While I appreciate that most points of view are “relative,” for the sake of argument let me give the following biased impressions:

The Auditor, for the most part, deals with an orderly and predictable world. They deal with rules, regulations, processes, protocols, and procedures. They deal primarily with man-made systems. The title “Father of Accounting” is generally accorded to the Franciscan Friar Luca Pacioli (pronounced pot-CHEE-oh-lee) who, in 1494, codified methods used by merchants in Venice that served as the world’s only accounting textbook until well into the 16th century. Remarkably sophisticated, if not a tad compulsive, he described the accounting cycle we know today and also suggested that a person should not go to sleep at night until the debits equalled the credits.

Now, accounting is not the same as auditing, although they are inexorably bound in mainstream commerce and governance, i.e. determining the financial health of a publicly traded company or the efficient use of resources in government.

The paradigm of today’s Enterprise Risk Management, the GAO, auditing in general (and its governing board the Public Company Accounting Oversight Board or PCAOB) is a product of professional education at the university level, shared accounting standards, certifications, governing bodies, etc. Technically there is a difference between ERM and Audit. But let me put it this way: they share so much professional DNA that, were they actual families, their offspring‟s would not be allowed to marry. And this is important….they share the same epistemic outlook.

The LGOPs – The LGOP is a first responder. Paratrooper, Marine, Ranger, SEAL, Air Commando, Special Forces, Fire Fighter, Paramedic, Police Officer, ICE, Coast Guard. I probably missed your group, but you know who you are. They are also the steelworkers who showed up at World Trade and started rescue and recovery operations….nobody told them to do that.

Today, more than even before, LGOPs operate in the fog of battle, and they also know a guy named Mr. Murphy – that if it can go wrong it will go wrong.

The LGOP will (usually, but not always) do what you tell them.

More importantly, they will do what you haven’t even thought of….and do it well. They know the world is uncertain, life is a craps shoot. They improvise, take the initiative, and like the paratroopers riding the horses pictured below, “they didn’t get the memo.”

I would never talk to paratroopers about “epistemology;” but I sure would ask their bosses. If you commit people to war, to danger, to risk… then your “world view” and all your underlying assumptions better hold water. This, by the way, is the whole point of this paper, and I will return to this theme a bit later.

OKAY, what’s YOUR epistemology?

Now, I didn’t take a poll of auditors, but actions speak louder than words. If this is true, then auditors are solidly grounded in the epistemology of Newtonian physics. This is a mechanical view of the world….think “clock.” The clock is the sum of its parts. It can be reduced to its components (called reductionism) and its operation is both knowable and “determined.” The world is largely linear, i.e. moves from point “A” to point “B” and 2+2 = 4. What you get out is proportional to what you put in. This is the world of the engineer, the auditor, the accountant, the time-and-motion expert (we once called them efficiency experts), of Fredrick Taylor, Frank & Lillian Gilbreth.

A competing view of Newtonian could be termed Quantum. Two tenets of this field deal with the indeterminacy in nature (see Heisenberg‟s Principal of Uncertainty), and the inability to prove a system while operating within that system, (see Gödel‟s Incompleteness Theory). It gets back to what we know for sure, and how can we measure it – the theory of knowledge or epistemology.

From a systems perspective, the revolution in physics and epistemology of the early 20th  century leads us to the concept that the whole is greater than the sum of its parts, and sometimes is it also different than the sum of its parts. Ultimately the science of complexity and complex adaptive systems (CAS) finds its intellectual roots in the theories of Heisenberg, Einstein, Schrödinger (and his quantum cat) and Gödel.

century leads us to the concept that the whole is greater than the sum of its parts, and sometimes is it also different than the sum of its parts. Ultimately the science of complexity and complex adaptive systems (CAS) finds its intellectual roots in the theories of Heisenberg, Einstein, Schrödinger (and his quantum cat) and Gödel.

century leads us to the concept that the whole is greater than the sum of its parts, and sometimes is it also different than the sum of its parts. Ultimately the science of complexity and complex adaptive systems (CAS) finds its intellectual roots in the theories of Heisenberg, Einstein, Schrödinger (and his quantum cat) and Gödel.

century leads us to the concept that the whole is greater than the sum of its parts, and sometimes is it also different than the sum of its parts. Ultimately the science of complexity and complex adaptive systems (CAS) finds its intellectual roots in the theories of Heisenberg, Einstein, Schrödinger (and his quantum cat) and Gödel.This is a whole different way of thinking – a different paradigm – with different assumptions about what is knowable and how it is knowable. The world we live in is, more often than not, a non- linear world. Very simply, 2+2 may be greater than.

Think of Per Bak’s sand hill experiments in “How Nature Works” (Springer, New York, 1996). When is criticality reached? The last grain of sand creates a non-linear reaction, i.e. a tiny grain of sand causes the whole pile to collapse or to “flip” from one stable state to another. Think about the straw that broke the camel’s back. Biologists, like C.S. Holling, think about resilience in terms of criticality, i.e. you reach the tipping point when the ecosystem “flips” into another state.

Some of theorists have gone so far as to say that the closer you get to measuring the phenomena, the more error you induce by your presence, i.e. Heisenberg’s Uncertainty. The social scientist Karl Weick does a beautiful job of describing this in his seminal work The Social Psychology of Organizing (Weick, 1997). And back to Kurt Gödel, “Can we ever formulate a mathematical system that could contain the proofs of all its own truths?” With all apologies to Gödel, “the truth of a system must come from outside the system…very simplified: it’s like a wife asking her husband, “Are you having an affair?”

The physics of Newton is not wrong; we need it and use it every day. The intellectual tenets of auditors are not wrong. We need their methodologies to carry out even the most basic of commerce. However, neither is inappropriate for problems characterized by high levels of complexity and uncertainty. Our responsibility is to make sure the incorrect paradigm doesn’t dominate and do more harm than good. For example…

Robert McNamara was Secretary of Defense under Presidents Kennedy and Johnson during the Vietnam War. He was the first non-Ford family member to run the Ford Motor Company. As the press was fond of saying, he was one of the “Wiz Kids” of the Kennedy Administration.

He was what we would today call a “quant” or numbers man. He introduced an efficiency-based brand of “systems analysis” called Planning Programming, & Budgeting. His staff focused on qualities that could be quantified. He demanded cost justification as part of the budget process in the Defense Department. Money is power in Washington, and McNamara ensured his power as an administrator under this rubric.

According to McNamara, quantification lifts up and preserves those aspects of a phenomenon which can most easily be controlled and communicated to other specialists. Further, it imposes order on hazy thinking by banishing unique attributes from consideration and re-configuring what is difficult or obscure such that it fits the standardized model.

In short, anything but measurable, quantifiable outcomes were irrelevant. Further, there was an economically rational linkage between the inputs (e.g. soldiers, weapons systems) of war and the outputs (e.g. dead VC). McNamara thought he could predict the probability of and timing of victory based on the number of US troops in Vietnam. He was wrong.

Success Inconclusive Collapse

For the year 1966: .2 7 . 1

For the year 1967: .4 . 45. 15

For the year 1968: .5 . 3 . 2

McNamara even went to far as to develop models predicting the probability of various outcomes by year, i.e. success, inconclusive, and collapse (here meaning the collapse of the South Vietnamese government). The truth was equated with that which could be counted. As a result, the options most reducible to quantification became the ones that received the most attention in Vietnam.

Is there an institutional memory for foul-ups? Perhaps not. Much of Secretary of Defense Donald Rumsfeld’s perspective (at times called a fetish for numbers) can be traced back to McNamara. Obviously, this perspective was much in favour of auditors, who also live and breathe by the numbers. It has also been entrenched into the current risk management philosophy of the GAO or Governmental Accountability Office.

The GAO (first called the Government Accounting Office) was started in 1921 under President Warren G. Harding. According to its history, “…the GAO was created because federal financial management was in disarray after World War I. Wartime spending had driven up the national debt, and Congress saw that it needed more information and better control over expenditures. The GAO is independent of the executive branch and has a broad mandate to investigate how federal dollars are spent.”

The GAO is staffed by honest, patriotic, hardworking people. The “whiz kids” were also honest, patriotic, and hardworking people. But remember what I said earlier: If you commit people to war, to danger, to risk… then your “world view” and all your underlying assumptions better hold water.

Using the calculus of cost-benefit in war (McNamara) or demanding probabilistic risk assessment in homeland security (GAO) is an invitation to disaster.

There are alternatives that better deal with the complexities of war and terrorism. We need to move those to the forefront of our risk management efforts. We owe it to the future and to the past…

No comments:

Post a Comment