SYDNEY J. FREEDBERG JR.

The Army wants AI to detect hidden targets, like this M109A6 Paladin armored howitzer camouflaged during wargames in Germany.

The Army wants AI to detect hidden targets, like this M109A6 Paladin armored howitzer camouflaged during wargames in Germany.

AUSA: The Army has developed AI to spot hidden targets in reconnaissance photos and will field-test it in next year’s massive Defender 20 wargames in Europe, the head of the service’s Artificial Intelligence Task Force said here.

It’s just one of a host of AI applications the Army is exploring with combat applications, Brig. Gen. Matt Easley said. Shooting down drones, aiming tank guns, coordinating resupply and maintenance, planning artillery barrages, stitching different sensor feeds together into a single coherent picture, analyzing how terrain blocks units’ fields of fire and warning commanders where there are blind spots in their defenses are all uses that will be tested.

The most high-profile example of AI on the battlefield to date, the controversial Project Maven, used machine learning algorithms to sift hours of full-motion video looking for suspected terrorists and insurgents. By contrast, Easley said, the new application looks for tanks and other targets of interest in a major-power war, he said, in keeping with the Pentagon’s increasing focus on Russia and China.

To date, the AI Task Force has mostly focused non-combat applications like mapping natural disasters in real-time for the National Guard, predicting Special Operations helicopter breakdowns before they happen, and improving the Army’s personnel system. But, Easley told an audience of contractors, soldiers, and journalists on the show floor of the Association of the US Army conference last week, “our final thrust this year is what we call intelligence support to operations, where we’re using offboard data” – that is, data gathered from a wide range of sensors, not just those onboard one aircraft or vehicle – “to look for peer competitor type targets.”

For example, Easley elaborated to reporters at a follow-on roundtable, you would feed the AI surveillance imagery of “a forested area” and ask it, “show me every tank you see in the image.” The AI would rapidly highlight the targets – far faster than a human imagery analyst could go through the same volume of raw intelligence – and pass them along to humans to take action.

Note that nobody’s talking about letting the computer pull the trigger to kill people. Although systems like the Army’s Patriot and the Navy’s Aegis have been allowed to automatically fire on unmanned targets like drones and missiles for decades, Pentagon policy and military culture make it hard – albeit not impossible – for humans to delegate the decision to use deadly force to a machine. And since AI can make absurd mistakes, and since an entire science of adversarial AI is emerging to find subtle ways to trick the algorithms into such mistakes, it’s probably best to have a human double-check before anybody starts shooting.

The American Soldier is evolving from low-tech grunt to high-tech warrior. For decades, the infantry have gotten the least investment in new equipment. Now that’s changing.

Machine learning can be deceived by subtle changes in the data: While the AI in this experiment recognized the left-hand image as a panda, distorting it less than 1 percent — in ways imperceptible to the human eye — make it register as an ape.

All those caveats aside, the target-detecting AI is already good enough that an Army acquisition program office has decided to try it, Easley said. It was the first such “transition” of a technology developed by the year-old task force into the Army’s formal procurement system. It will be “demonstrated” – Pentagonese for a relatively low-stakes test — during next year’s Defender 20 wargames involving 20,000 US troops and 17,000 NATO allies.

Created last fall, the task force is now nearly at full strength and can take on more projects, Easley said, such as a cybersecurity AI next year. Military networks have so many thousands of users and devices that just figuring out what is connected at any given time is a challenge, he said, let alone sifting through the vast amounts of network traffic to spot an ongoing intrusion. For all its faults, AI is much better than humans at quickly sorting through such masses of data.

AI can also quickly potential lines of sight between different points of the battlefield. That’s why the AI Task Force is working on a “fields of fire” AI that uses the new IVAS targeting goggles to determine what area each soldier can cover with their weapons. The software would compile that data for the whole squad, platoon, company, or even battalion, giving commanders a map of exactly what approaches were defended and where any potential blind spots lie. (Another potential application of this same technology would be to analyze potential fields of fire from suspected or confirmed enemy positions to identify the safest routes past them).

Priority projects for the Army’s AI Task Force.

The armored ground vehicles of the future will allow soldiers to do things their predecessors only dreamed about.

All told, there is a tremendous number of potential military applications for AI, from the battlefield to the back office. The trick is not overwhelming the users with too many different specialized apps to figure out and not overwhelming the network with too much data moving back and forth.

After six months talking to all of the Army’s high-priority modernization task forces – the eight Cross Functional Teams – “we’ve got hundreds of potential areas where we can use artificial intelligence,” Easley said on the floor at AUSA. “We obviously can’t do hundreds of projects.” So rather than develop a bespoke solution for each request, he said, the AI Task Force is combining related requests and figuring out AI applications that can meet multiple modernization teams’ needs.

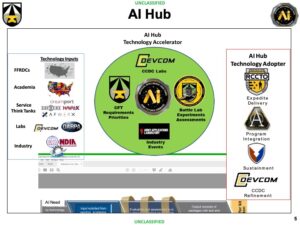

Partner organizations for the Army’s Artificial Intelligence Task Force.

“We can’t have 50 AI solutions across the Army,” Easley continued. “We need to make it easier for our soldiers to do AI regardless of what [role] they’re in and this is why we’re developing an AI ecosystem for use within the Army.” The idea is to pull data from a host of sources; curate it by putting it into standard formats, throwing out bad data, and so on; and create a central repository — not only for the data but for a family of AI models trained on that data. Users across the Army could download those proven algorithms and apply them to their own purposes.

“We can’t have 50 AI solutions across the Army,” Easley continued. “We need to make it easier for our soldiers to do AI regardless of what [role] they’re in and this is why we’re developing an AI ecosystem for use within the Army.” The idea is to pull data from a host of sources; curate it by putting it into standard formats, throwing out bad data, and so on; and create a central repository — not only for the data but for a family of AI models trained on that data. Users across the Army could download those proven algorithms and apply them to their own purposes.

Now, Easley acknowledged, this means a lot of data moving back and forth over the Army network. Modernizing that network is a major Army priority – No. 4 of its “Big Six” – and the Network modernization team plans major increases in satellite bandwidth for its 2023 update, Capability Set 23. But the AI Task Force is also looking to reduce those data demands wherever possible.

Consider Uber as an everyday example, Easley said. The company as a whole has a fairly sophisticated AI matching drivers with customers, but each individual user isn’t actually sending or receiving all that data. It boils down to “here I am, I want a ride to there” or “here I am, I’m willing to give someone a ride this far.” Likewise, a combat vehicle could transmit “here I am, I have this much ammunition, I have this much fuel. Please send supplies ASAP.”

Soldiers train with the IVAS augmented-reality headset at Fort Pickett.

You can extend this principle to even more complex functions like spotting targets or predicting breakdowns. If you put enough processing power and software on the frontline platforms themselves – a concept called “edge computing” – they can analyze complicated input from their own sensors and only send a summary report back over the network, rather than having to transmit raw data to some central supercomputer for analysis. A vehicle could send, “I need X maintenance in Y hours,” for example, rather than every bit of data collected by the diagnostic sensors on its engine. Or a drone could send “I see tanks here, here, and here, and a missile launcher there” rather than transmit full-motion video of everything in view.

You can extend this principle to even more complex functions like spotting targets or predicting breakdowns. If you put enough processing power and software on the frontline platforms themselves – a concept called “edge computing” – they can analyze complicated input from their own sensors and only send a summary report back over the network, rather than having to transmit raw data to some central supercomputer for analysis. A vehicle could send, “I need X maintenance in Y hours,” for example, rather than every bit of data collected by the diagnostic sensors on its engine. Or a drone could send “I see tanks here, here, and here, and a missile launcher there” rather than transmit full-motion video of everything in view.

Now, not every present-day weapons system is smart enough to do the analysis by itself. Many systems don’t even have sensors to collect data, and installing them would be prohibitively expensive. “You’re not getting any data off my 20-year old Honda Civic, [and] we can’t afford to retrofit all our Humvees from 30 years ago,” Easley said.

So one of the task force’s priorities will be to ensure that all the Army’s new weapons systems, from long-range missile launchers to targeting goggles, can collect and transmit the right kind of data on their own performance. That means embedding enough computing power to perform a lot of analysis at the edge, rather than having to transmit masses of data to a central location.

Big data gets a lot of attention and can put a lot of strain on networks. But, for many applications, Easley said, “we can just start little.”

No comments:

Post a Comment